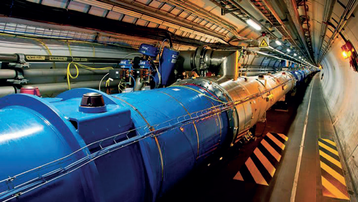

The large hadron collider (LHC) sings. Its superconducting magnets hum and whistle as they fling particles at nearly light-speed around a 27km (17 mile) circular tunnel that straddles the Swiss/French border.

But next year, the world’s largest particle accelerator will go silent.

In 2012 the scientists at CERN, Europe's nuclear physics laboratory, used the LHC to find the Higgs boson - the elusive particle that explains why matter has mass. Then, after increasing the energy of the LHC, they began to probe the Higgs’ properties.

LHC

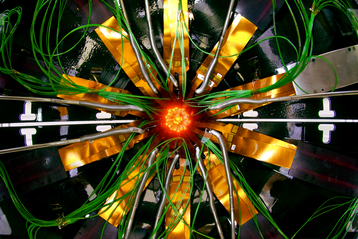

The LHC works by smashing particles together, as fast and as hard and as often as possible, by crossing two precisely focused beams rotating in opposite directions around the underground ring. Sifting the shrapnel from such collisions proved the existence of the Higgs particles, and there are more to discover.

In 2019, it’s time for a second upgrade. The “luminosity” of the LHC will be increased tenfold (see box), creating 15 million Higgs bosons per year, after an upgrade process which will shut the particle accelerator down twice. Two of the experiments spaced around the ring, called ALICE and LHCb, aren’t waiting for the new LHC. When the installation goes quiet next year, they will upgrade their equipment to prevent scientific data going to waste.

LHCb is looking at the b or “beauty” quark to try and solve a puzzle in physics: what happened in the Big Bang to skew the universe so that it contains mostly regular matter, with very little anti-matter (see Box: baryogenesis)?

The LHCb experiment will be updated to capture more data, said Niko Neufeld of the LHCb’s online team. It already captures information from a million collisions each second - a rate of 1MHz. That’s an almost unimaginable feat, but LHCb is missing most of the action. When the experiment is active, there are 40 million collisions each second. The detectors can quickly select a million likely collisions to capture. But what is the team missing in the other events?

“Right now we trigger in an analogue way at 1MHz,” Neufeld said. The detectors quickly decide whether to take a picture of specific events. But LHCb wants to collect all the data, and search it more carefully after the event. “We want to upgrade the sampling to 40MHz, and move the decision to a computer cluster,” he explained. Instead of making a selection of collision data at the sensor, all information will be captured and analyzed.

ALICE

ALICE, near the village of St Genis-Pouilly in France, is also doing fundamental work, recreating conditions shortly after the Big Bang by colliding lead ions at temperatures more than 100,000 times hotter than the center of the Sun, so quarks and gluons can be observed. As with LHCb, ALICE is currently losing a lot of potentially useful data, so it will be getting a similar upgrade.

These upgrades will cause certain fundamental problems. The detectors digitize the data, but vast quantities must be transmitted in fibers which gather readings from thousands of sensors. The data is encoded in a special protocol, virtually unique to CERN, designed to carry high bandwidth in a high-radiation environment. The transfers add up to 40 terabits per second (Tbps) carried over thousands of optical fibers - and Neufeld said the HL-LHC upgrade will increase this to 500Tbps in 2027.

This kind of cable is fantastically expensive to run, so the experimenters have been forced to bring their IT equipment close to the detectors. “It is much cheaper to put them right on top of the experiment,” Neufeld explained. ”It is not cost-efficient to transport more than 40Tbps over 3km!”

It’s not possible to fit servers below ground right next to the detectors, Neufeld said. Apart from the lack of room in CERN’s tunnels, there’s the problem of access. The environment around the LHC is sensitive, and access is fiercely guarded, so random IT staff can’t just show up to upgrade systems.

So the computing is hosted above ground in data center modules the size of shipping containers, specially built by Automation Datacenter Facilities of Belgium. There’s only a 300m length of fiber separating them from the experiments.

Two “event building” modules handle LHCb’s I/O, taking the data from the fiber cables and piecing together information from the myriad detectors to reconstruct the events detected by LHCb.

There are 36 racks in the I/O modules, all 800mm wide and loaded with specialist systems built for CERN by a French university, which translate the data from the CERN protocol to a regular form, and enable it to be collated into actual events.

The event data is transferred to four modules which search for interesting patterns, using general-purpose GPUs for number crunching. These contain 96 of the more customary 600mm-wide racks.

All the racks in the modules are rated for 22kW, except for 16 of the compute racks (four in each module) which are rated up to 44kW. The total power drawn by all six modules is capped at 2.2MW.

Meanwhile, the ALICE experiment will have four other modules for its own event filtering, with a similar total power draw.

The modules could be placed anywhere, said Juergen Cayman of Automation: the company's SAFE Multi-Unit Platform just needs a concrete base, power and water. At CERN, they sit between the cooling towers and the refrigerant systems for the LHC itself.

The modular facility has a reliable power supply, but Neufeld points out there is no need for fully redundant power: “We are providing a power module with A and B feeds. There is a small amount of UPS on the ALICE system, but it’s built with no redundancy. If the electricity drops, there are no particles.”

Removing heat efficiently is not a problem in the near-Alpine climate of CERN. Modular data centers are cooled by air using Stulz CyberHandler 2 chiller units, mounted on top of the Automation modules, with indirect heat exchangers supplemented by evaporative cooling, and no compressors. The whole system will have a power usage effectiveness (PUE) rating of below 1.075.

Liquid cooling could appear in the future, but Neufeld isn’t ready to use it on the live LHCb systems. He’s evaluating a system from European liquid cooling specialist Submer (see box, p19) but thinks the technology won’t be necessary during the available upgrade window: “You have to use the technology that is appropriate at the time,” he explained.

The technology has to be solid, because Neufeld gets just one shot at the upgrade. The first two modules arrive in September 2018, and the rest of the technology has to be delivered and deployed while the LHC is quiet. Before the experiments resume in 2021, the new fiber connections must be installed, and the IT systems must be built, tested and fully working.

Physicists will be waiting with great interest. When the LHC finds its voice again in 2021, the LHCb and ALICE will be picking out more harmonies, making better use of the available data.

Even before the luminosity increases, this upgrade could shine an even brighter light on the structure of the universe.

To learn about CERN's use of OpenStack infrastructure, join us next week for the second part of our magazine series