The digital twin for the data center is now ‘a thing’ insofar as the whole industry is talking about it. But it’s not ‘a thing’ in the sense that there is no single version of it.

In fact, as the industry evolves to embrace the Internet of Things (IoT), and game changers such as Artificial Intelligence (AI) come to the fore, there’s a lot of scope for variation in what a digital twin really means and how it can empower data center design and operations.

In light of all this complexity, I believe it’s essential that we’re talking about the digital twin concept in its most complete guise.

It should be integrated within the information systems of the owner or operator so that it’s always accurate and can be relied upon to give a real account of what’s happening in the data center from an airflow, power, cooling and capacity perspective.

With this technological capability, it can let data center professionals explore and address pretty much any issue or opportunity they’re facing within a safe, digitized model.

After all, data center outages are becoming more severe, and more frequent. The number of data center operators reporting an outage has jumped from 50 percent in 2019 to 78 percent in 2020, of whom 75 percent believe their downtime was preventable.

The digital twin can be, and in fact already is, is the answer to this.

A powerful decision-making engine

Change is both a friend and a foe for those of us in the data center world. We’re naturally curious creatures who like to engineer new things and solve problems that don’t have obvious solutions. But we also want things to work flawlessly, and, in the data center, change can get in the way of this objective.

That’s why I believe the concept of a digital twin is so important to our industry today. If you have the right model, you can observe, understand, and tinker with your overall ecosystem, and then execute meaningful change in the real environment, without the risk.

You can see the complexity of your estate, from plug to PDU, in a digitized, streamlined form. What’s more, you can improve the capacity planning process and validate your assumptions in the model. In addition to fearlessly testing failure scenarios under an array of conditions.

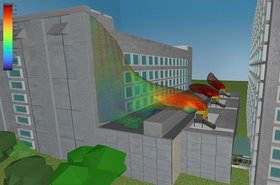

These are all capabilities when you have CFD-enabled digital twin modelling, and it’s the reason that the phenomenon is picking up such momentum.

It’s not all change

Legacy data centers are significant beneficiaries of this capability. While some firms are able to keep pace with digital proliferation through the acquisition of new space, others want to get the most from what they have.

Under commercial, geographical, and availability pressures, many data center leaders will ask how they can accommodate new applications within their existing architecture. This is exactly where the digital twin steps up.

Freeing up capacity trapped within an already excruciatingly expensive facility is a real win in the current climate.

It doesn’t just solve one of the key challenges of the digital economy – firms having the performance needed across all their applications and data management solutions – but it’s good for corporate responsibility.

Freed capacity typically means acquiring less hardware with its rare minerals and shipping footprint, while maximizing the efficiency of cooling and power within the facility, therefore maximizing data center energy efficiency too.

So it’s fair to say that for many firms the starting point with the digital twin will be about doing more with what they have. However, for those wanting to really push the boundaries, it’s pretty good at that too.

Evolving use cases in the data center

Industries with high demand for IoT– for example healthcare and manufacturing – are increasingly exploring where they can really push the envelope within their facilities. Deploying at high-density is difficult from the perspective of managing thermal loads.

Where margins are tight, or more critically, lives are at risk, the tolerance for outages and downtime is non-negotiable. With a digital twin you can trial and test cooling, flow, and heat processes on a totally risk-free basis, which is going to be critical when deploying these high-density configurations.

A step further along the scale, digital twins are already in use to accelerate the delivery of supercomputing capabilities.

Nvidia built its Cambridge 1 supercomputer in under 20 weeks – a world record – setting the tone for how the use of simulation can enhance and streamline the design process of computing at the very highest level.

As we continue to see Edge computing evolve, high-density become commonplace, the adoption of supercomputing accelerate, and operators continue to aspire to optimise their data center and make it more environmentally friendly, the digital twin is going to take center stage.

Truly effective digital twin simulations are contingent on some complex technology, such as the computational fluid dynamic modelling to which I’ve dedicated most of my career. However, it’s clear that when a powerful digital twin is applied to complex problems, there’s only one winner.

More from Future Facilities

-

Sponsored Future Facilities launches 16th release of its leading CFD and digital twin software, 6SigmaDCX

Updates will deliver improved performance, accuracy, and speed to support a broad range of data center teams in decision making and sustainability

-

Getting to know the digital twin

And how it can help data centers find solutions to downtime

-

Future Facilities on the reality of data center management

And how they propose you solve it

-

Sponsored Thésée: Design, operations and the digital twin

In Greek mythology, the secret to Theseus’s success was probably largely down to good genes, but in the absence of Godly parents, how has the Thésée data center followed suit?