Vultr has added AMD's Instinct M1300X GPUs to its artificial intelligence (AI) cloud.

In addition to the AMD accelerators, customers can also access the AMD ROCm open software for their AI deployments.

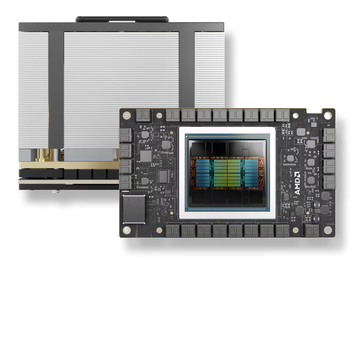

AMD Instinct M1300X GPUs are built on the AMD CDNA 3 architecture and are designed for AI - including generative AI - and high-performance computing workloads.

The GPUs feature 19,456 stream processors and 1,216 matrix cores. The accelerators have a peak eight-bit precision (FP8) performance with structured sparsity (E5M2, E4M3) of 5.22 petaflops, and peak double precision(FP64) performance of 81.7 teraflops.

“Innovation thrives in an open ecosystem,” said J.J. Kardwell, CEO of Vultr.

“The future of enterprise AI workloads is in open environments that allow for flexibility, scalability, and security. AMD accelerators give our customers unparalleled cost-to-performance. The balance of high memory with low power requirements furthers sustainability efforts and gives our customers the capabilities to efficiently drive innovation and growth through AI.”

Negin Oliver, CVP of business development, data center GPU business unit, AMD, added: “We are proud of our close collaboration with Vultr, as its cloud platform is designed to manage high-performance AI training and inferencing tasks and provide improved overall efficiency."

Vultr's GPU cloud also offers Nvidia H100 GPUs and GH200 chips. In April 2024, the company launched a new sovereign and private cloud offering. The company has a presence in 32 data centers across six continents.

Competitor Nscale offers a GPU cloud based on M1300X accelerators as well as AMD MI250 GPUs and Nvidia’s A100, H100, and V100 GPUs.

In August 2024, AMD launched ROCm 6.2, the latest version of its open source software stack. First launched in 2016, ROCm consists of drivers, development tools, compilers, libraries, and APIs to support programming for generative AI and HPC applications on AMD GPUs. The latest version offers extended virtual Large Language Model (vLLM) support, enhanced AI training and inferencing on AMD Instinct accelerators, and broader FP8 support.