Oracle Cloud Infrastructure (OCI) is now offering AMD Instinct MI300X GPUs with ROCm software.

The accelerators will be powering Oracle's newest OCI Compute Supercluster called BM.GPU.MI300X.8, which will be able to support up to 16,384 GPUs in a single cluster.

The AMD MI300X GPUs will be able to use the same ultrafast network fabric technology used by other accelerators on OCI, and are designed for artificial intelligence (AI) workloads including large language model (LLM) inference and training.

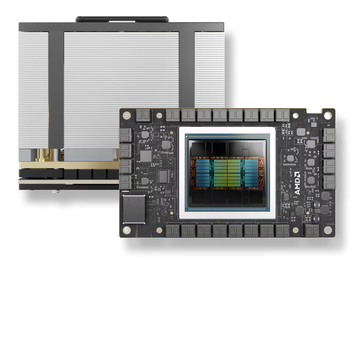

AMD Instinct MI300X GPUs are built on the AMD CDNA 3 architecture and are designed for AI - including generative AI - and high-performance computing workloads.

The GPUs feature 19,456 stream processors and 1,216 matrix cores. The accelerators have a peak eight-bit precision (FP8) performance with structured sparsity (E5M2, E4M3) of 5.22 petaflops, and peak double precision (FP64) performance of 81.7 teraflops.

“AMD Instinct MI300X and ROCm open software continue to gain momentum as trusted solutions for powering the most critical OCI AI workloads,” said Andrew Dieckmann, CVP and GM, Data Center GPU Business, AMD.

“As these solutions expand further into growing AI-intensive markets, the combination will benefit OCI customers with high performance, efficiency, and greater system design flexibility.”

“The inference capabilities of AMD Instinct MI300X accelerators add to OCI’s extensive selection of high-performance bare metal instances to remove the overhead of virtualized compute commonly used for AI infrastructure,” added Donald Lu, SVP, software development, OCI. “We are excited to offer more choice for customers seeking to accelerate AI workloads at a competitive price point.”

This week also saw Vultr add AMD's Instinct MI300X GPUs to its artificial intelligence (AI) cloud.

Nscale also offers the accelerators via its GPU cloud based on MI00X accelerators as well as AMD MI250 GPUs and Nvidia’s A100, H100, and V100 GPUs.

In August 2024, AMD launched ROCm 6.2, the latest version of its open source software stack. First launched in 2016, ROCm consists of drivers, development tools, compilers, libraries, and APIs to support programming for generative AI and HPC applications on AMD GPUs. The latest version offers extended virtual Large Language Model (vLLM) support, enhanced AI training and inferencing on AMD Instinct accelerators, and broader FP8 support.

Earlier this month, Oracle shared that its investment in chipmaker Ampere Computing could give it the option for future ownership. Ampere currently estimates that 95 percent of Oracle's services use Ampere chips.