Facebook is developing its own suite of machine learning chips to handle common workloads on its social media platforms.

The Information reports that one chip processes machine learning for tasks such as recommending content to users, while another focuses on handling video transcoding.

More than 100 people are thought to be working on the machine-learning chip projects.

The move should not come as a huge surprise - back in 2018, Facebook hired the founder of Google’s hardware technology engineering team and consumer silicon team, Shahriar Rabii, and appointed him as its new Head of Silicon.

The initiative also echoes similar steps by other hyperscalers to take advantage of their large scale, and their understanding of their own workloads, to develop specific chips that are more efficient, and reduce their reliance on companies like Intel, AMD, and Nvidia.

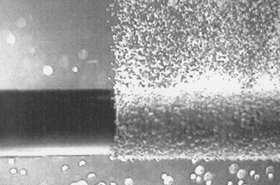

Earlier this year, Google revealed that it developed a custom 'Argos' video-transcoding chip to provide "up to 20-33x improvements in compute efficiency compared" to its previous traditional server setup.

Google also developed its own TPU family of AI processors, Titan security chip line, and this year hired Intel's Uri Frank to build a System on Chip.

Amazon Web Services, meanwhile, has its own Arm CPU line Graviton, as well as AI training (Trainium) and inference (Inferentia) chips. Microsoft is believed to also be working on its own Arm chips.