Google has announced the latest version of its custom Tensor Processing Units AI chip.

At its I/O developer conference this week the company claimed that the fourth generation TPU was twice as powerful as the previous iteration.

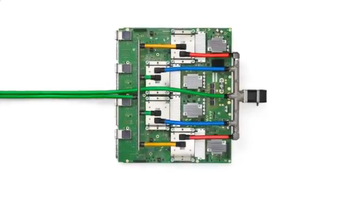

Google deploys the chips in its own data centers, combining them in pods of 4,096 TPUs. Each pod delivers over one exaflop of computing power, the company claimed. Google did not disclose the benchmark it used, but it is likely single-precision rather than the double-precision used to evaluate Top500 systems.

“This is the fastest system we’ve ever deployed at Google and a historic milestone for us,” CEO Sundar Pichai said.

“Previously to get an exaflop you needed to build a custom supercomputer, but we already have many of these deployed today and will soon have dozens of TPUv4 pods in our data centers, many of which will be operating at or near 90 percent carbon-free energy. And our TPUv4 pods will be available to our cloud customers later this year.”

The pods feature “10x interconnect bandwidth per chip at scale than any other networking technology,” Pichai claimed

Google does not sell its TPUs, keeping them as an exclusive feature for its cloud service. Rival Amazon Web Services has developed its Trainium and Inferentia chips for a similar reason.

Google also uses custom YouTube chips for video transcoding, and created specialized security chips. Earlier this year, the company hired Intel's Uri Frank to spearhead the development of more ambitious Systems-on-Chip.