At its annual GPU Technology Conference, Nvidia unveiled its latest DGX system, the DGX A100.

Updated to include the latest A100 GPU and NVSwitch interconnection tech, the system will cost $199,000. A similar HGX A100 will target cloud providers, while a lower-powered EGX A100 is for Edge use cases.

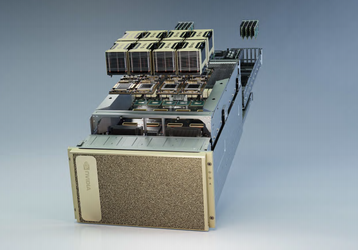

A beast in a box

The DGX A100 packs 8 eponymous GPUs, with 6 NVSwitches, 15TB of Gen 4 NVME SSD, 9 Mellanox ConnectX-6 VPI 200 Gbps Network interfaces, and a dual 64-core AMD Rome CPU with 1TB of RAM.

This all adds up to five petaflops of FP16 performance, or 2.5 petaflops TF32, and 156 teraflops FP64. With INT8, you're looking at 10 petaops.

Early purchasers of the DGX include the US Department of Energy’s Argonne National Laboratory (which will use it in the fight against Covid-19), the University of Florida, and the German Research Center for Artificial Intelligence.

The hyperscale variant with four GPUs, HGX A100, will be used by Alibaba, AWS, Baidu, Google Cloud, Microsoft Azure, Oracle, and Tencent.

For those in need of a lot of compute power, Nvidia is bundling 140 DGX A100s together as a 'DGX SuperPOD,' capable of 700 petaflops of AI performance. Nvidia will expand its own internal Saturn V supercomputer, with four SuperPODs, adding 2.8 exaflops of AI computing power, for a total of 4.6 exaflops. Note: 'AI computing power' is a more generous benchmark than those used to traditionally judge whether a supercomputer is exascale.

On the other end of the spectrum is the EGX A100, for the Edge. It has just one A100, and includes an Nvidia Mellanox ConnectX-6 SmartNIC for fast networking capabilities.