There’s no question that data center networks are conquering the world – but with more data, more bandwidth, more network connectivity and therefore increased complexity, this brings a new paradigm for network test and monitoring.

Historically, Data Center customers limited the network test to copper & fiber cabling infrastructure and packet level testing, but just to the network inside the DC building.

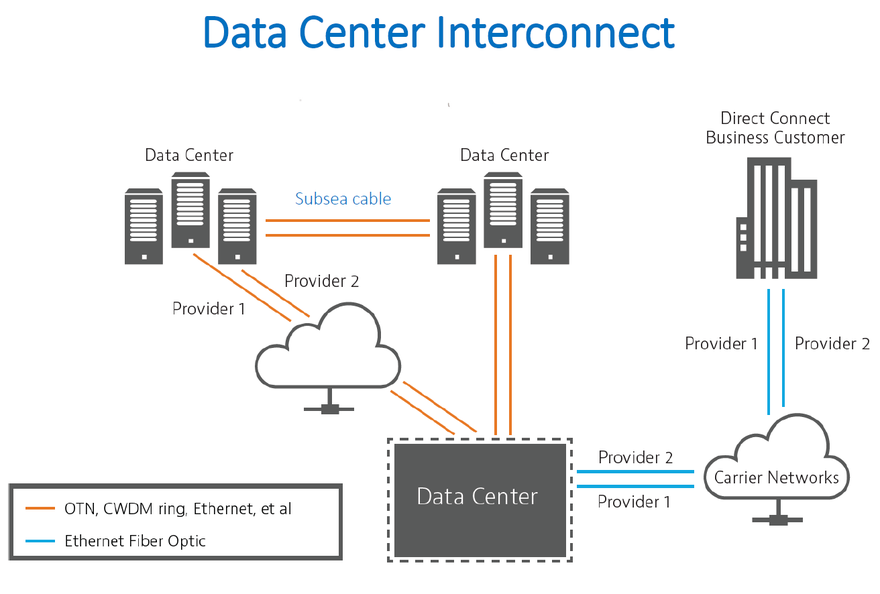

This approach has been valid for many years, but it’s now challenged with the new “mesh” network and Data Center Interconnects era. More visibility is required. And monitoring critical parameters like latency, optical link degradation and throughput are now to become part of the strategy of data center companies – as they’ve been for many years in the Network Service Providers arena.

In the middle of the digital transformation era, and where millions of dollars are being invested on network infrastructures, many organizations are still unclear on how to, and what to, test in their new networks to avoid bottlenecks and downtime.

Overall, data center customers are looking for lower latency, higher resiliency and lower cost per bit. If we look specifically at the DCI (Data Center Interconnect), the technology used to carry data in between sites is typically DWDM - Dense Wavelength Division Multiplexing – that allows transporting multiple optical carrier signals onto a single optical fiber using different wavelengths.

We can classify the DCI’s according the distance that connects two data centers:

- Campus DCI: Interconnects in a small geographical area, typically shorter than 5kms in a “Campus” type

- Metro DCI: Interconnects in between nearby cities or mid-size geographical areas with distance up to 80kms (no amplification needed)

- Long haul DCI (terrestrial, aerial or submarine): Interconnects in between cities, countries or even continents through long haul links that go from 80kms to thousands of kms. A good example are submarine networks, where the optical signals need to get amplified through ILA - In-Line Amplifiers – to get to the remote location.

With this scenario, the best network performance cannot be guaranteed by just looking at the DC building. We need to consider the entire network links end-to-end. The new DC network looks more like a traditional telco backbone, with tight network dependencies. And redundant, seamless connections that attach data centers, building clusters and ultimately availability zones to guarantee resiliency for IoT and Cloud applications.

Now, what network parameters should one look at in the DCI? Below are five fundamental tests that should be done at the time of installation, turn up and/or the operational phase of the network:

Latency: The time that it takes for a packet to get from one point of the network to another. Typically measured in milliseconds (ms), it’s one of the main drivers for data center companies to build new sites geographically closer to their customers. High latency means a slower network.

Throughput: The amount of data moved successfully from one point of the network to another. Typically measured in a time period, i.e. Gigabits per second (10/100/400Gbps). It provides an idea of the “real” data being transmitted vs the theoretical speed to the connection.

BERT (Bit Error Rate Test): The amount of bit errors produced in a transmission between two network elements. It is expressed in a percentage, or as 10 to a negative power (i.e. 1 x 10-9). A transmission link with low BERT would guarantee a “cleaner” communication between transmitter and receiver.

Optical signal degradation: Also known as Link Loss. It is the amount of optical power lost between the transmitter and receiver in the transmission link. It is expressed in dB/km, and it includes the losses of all elements in the optical link associated to the infrastructure (cables, connectors, splices, etc) as well as potential defects in the installation (i.e. macrobends). If the optical signal is too low, the network equipment will struggle to decode the symbols and consequently, generate more errors at higher layers.

OSNR (Optical Signal-to-Noise Ratio): Ratio in between the transmitted signal vs the amount of noise that travels along the optical link. It is typically expressed in dB (decibels). A higher OSNR level would indicate more quality in the transmission, and less error rate decoding the symbols. In DWDM networks, we need to ensure high OSNR levels at all wavelengths in the transmission spectrum to guarantee the best optical channel performance.

Although these metrics have existed for years, and they’ve been used by telco network players, many data center operators are just starting to adopt them as standard within their test procedures. My experience says that many users don’t react to network faults until a problem happens - and then it’s too late. High repair costs, labor involved and loss of reputation may cause bigger damage to the organization. Testing proactively, anticipating potential network issues, would help to prevent higher costs, deployment delays and overall big headaches in the operational phase.

Conclusion:

Data center networks have become the cornerstone of modern communications. They require ultra-high bandwidth interconnections to maximize the amount of data transmitted. These interconnects have the same challenges that the traditional telco has had, so it’s fundamental to verify parameters like latency, throughput or optical link degradation in the Data Center Interconnects.

Designing an adequate network test and monitoring strategy for the DCI is fundamental to reduce risk in the organizations and prevent network downtime.