The storage industry experienced several major technology transitions over the last 20 years that included RISC to CISC, bare metal to virtualization, on-premises to cloud/hybrid and, most recently, monolithic applications to scale-out workload containerization (such as Kubernetes infrastructures).

Industry experts argue that Kubernetes-managed containerization can be a game-changer, but today’s available storage technology has inhibited the broad adoption of these infrastructures – until now.

The NVMe over Fabrics (NVMe-oF) protocol has delivered the storage infrastructure breakthrough that stateful applications, such as databases, have needed to enable Kubernetes-based infrastructures to advance the computing landscape.

Container orchestration delivers infrastructure agility

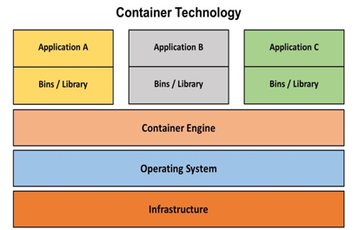

Container technology enables an application to run, with all of its dependencies, in an isolated state from other applications or processes.

Application containers typically run in virtual machines (VMs) or on bare-metal systems, enabling a host to support multiple operating systems and different container types – all sharing the same physical resources (Figure 1). Containers orchestrated by a Kubernetes platform deliver a highly flexible form of virtualization.

Direct attach storage inhibits Kubernetes infrastructure adoption

Data centers have been typically built as general-purpose architectures that utilize a pre-configured allocation of compute and storage resources on each server to handle ALL application and workload demands.

As data-intensive applications scale, these general-purpose architectures come up short relating to performance, capacity and scalability. Since databases are the epitome of data-intensive applications, they have not translated well to Kubernetes-managed containerization because the historical storage performance trade-off has been: local storage equals fast performance versus remote storage equals slow performance.

But, migrating data when applications need to migrate is not practical. This performance trade-off and locality inflexibility have made these stateful workloads a challenge for the adoption of container orchestration.

NVMe-oF to the rescue

The Non-Volatile Memory Express (NVMe) protocol is designed specifically for flash-based storage devices. Devices based on this specification currently deliver the industry’s highest bandwidth and IOPS performance, and connect to servers and storage through the PCIe interface bus.

This enables a 2.5-inch x4 NVMe SSD to move data at speeds approaching 4 gigabytes per second (GB/s) with PCIe Gen3 and 8GB/s with PCIe 4.0. When processing huge amounts of data at large scale, the NVMe specification unlocks the performance of the underlying flash media, reducing the CPU load and driver latency, allowing NVMe SSDs to handle these workloads much faster and more efficiently than legacy interfaces such as SATA.

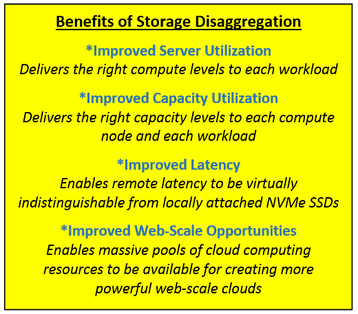

The NVMe standard has also been extended beyond local-attached devices with the advent of the NVMe-oF specification. This specification enables flash storage to be shared over standard networks while delivering comparable high-performance and low-latency as if the drives were locally-attached - removing the traditional local/remote storage performance latency constraint. It also provides a new data center storage deployment option, called ‘storage disaggregation.’

In this configuration, NVMe drives are centralized into standard storage nodes with high-bandwidth network interfaces that serve up storage volumes to the vast majority of servers, which behave as simple compute nodes without local storage.

All the compute nodes access their storage volumes from these shared NVMe-oF storage nodes. With this approach, pooled storage can be accessed at much lower latencies than was previously possible, and at a lower cost. When enough network resources are available, there is virtually no limit as to how many servers can share NVMe-oF storage or the number of NVMe SSDs that can be shared. The end result is that the right amount of storage and compute can be allocated for each application workload while delivering nearly the same high-performance, low-latency capabilities of locally attached NVMe SSDs.

The removal of the performance trade-off between local and remote storage enabled by the NVMe-oF specification adds a level of flexibility and scalability that ends up being critical in a Kubernetes infrastructure.

How does the NVMe-oF specification assist Kubernetes infrastructures?

The NVMe-oF specification enables containers to scale data-intensive applications, such as databases, over disaggregated storage so the workloads can be properly orchestrated with the right level of resources (i.e., compute and memory, storage and network bandwidth). Centrally pooled NVMe SSDs are connected to orchestrated container frameworks, such as Kubernetes infrastructures, through the well-defined Container Storage Interface (CSI) standard. With CSI enabled, dynamic volume scaling can be delivered transparently, so if the workload requires additional storage resources, storage management software can allocate this on-demand. When the workload storage requirement subsides or ends, spare capacity can then be placed back into the shared resource pool.

The combination of NVMe-oF-native storage volume management software, with the CSI driver, enables data centers to optimally manage and monitor their physical resources while providing a more agile, efficient and resource-demand driven environment. This effectively enables NVMe flash as a service in a cloud infrastructure.

Storage abstraction is key

Storage volume management software that natively supports the NVMe-oF specification is key to realizing the benefits of orchestrated containerization. It also provisions the resource pool to provide the right amount of storage or compute for each application workload, on each server within the cloud data center - all while preserving the high-performance and low-latency benefits of NVMe SSD devices.

There are some software solutions available today that include a direct interface connection to Kubernetes orchestration frameworks, enabling the resources to be monitored and managed in sync with Kubernetes infrastructures. When shopping for this type of cloud storage volume management software, make sure it includes an interface to a containerized orchestration framework that utilizes an API or tool to manage the resources while delivering dynamic scaling as needed.

Conclusion

Moving stateful applications, such as databases, from a direct-attached storage architecture to a disaggregated architecture based on an NVMe-oF shared storage model presents several benefits and offers dramatic increases in resource utilization. It also helps to significantly reduce storage overprovisioning and associated costs. The combination of storage volume management software, the NVMe-oF specification and the CSI standard, enable containerized Kubernetes infrastructures to dynamically and efficiently scale data-intensive containerized workloads on disaggregated storage.

With this solution, disaggregated storage becomes the preferred, cost-efficient storage choice for Kubernetes infrastructures while accelerating industry adoption to a container-based orchestration. Thus, the NVMe-oF specification delivers the storage breakthrough that Kubernetes infrastructures have needed to heighten their penetration within the cloud computing landscape.