Supercomputers have always been at the forefront of cooling technologies, with their high power densities demanding careful attention.

At DCD>San Francisco, we learned what it will take to keep up with the next great supercomputing challenge: Exascale.

Cooling El Capitan

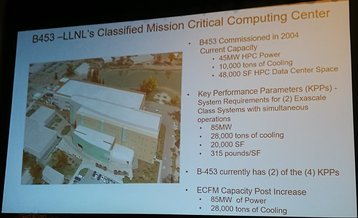

During a panel on different cooling solutions, Lawrence Livermore National Laboratory systems engineer Chris DePrater detailed the lab's plans for the B453 Simulation Facility, used to run nuclear weapons simulations as part of the NNSA's Stockpile Stewardship program.

"I've been in our facility since it was opened in 2004," DePrater said, with the facility originally known as the Terascale Simulation Facility - a title it quickly outgrew.

"At launch, it was primarily an air cooled, 48,000 square foot data center, [but] since the inception of our building we've been in constant construction. We've been able to keep up with the vendors and actually modernize our facility along the way.

"When we commissioned our building, it was 12MW, 6,800 tons of cooling, but now we're at 45MW, 10,000 tons of cooling - still with 48,000 square feet of space."

Now, DePrater's team are upgrading the site for exascale supercomputers. "We polled the major vendors and we asked them about weights, we asked them about the cooling, we asked them about the power," he said.

"And to get to exascale with those vendor parameters, we need to be at 85MW, 28,000 tons of cooling, and we need approximately 20,000 square feet of space. Those are our key performance parameters to get there."

That 85MW is across two exascale supercomputers, with a 2017 Campus Capability plan noting that the 'Exascale Complex Facility Modernization (ECFM) project' will provide "infrastructure to site an exascale-class system in 2022, with full production initiating in 2023, and to site a subsequent exascale system in 2028."

That first system will be known as El Capitan, and comes after Argonne will install the ~13MW Aurora one exaflops system, and the ~40MW 1.5 exaflops Frontier system at Oak Ridge, both in 2021.

The plan adds that to get to 85MW a new substation will be installed, and that "tap changers will be provided on the transformer if the key performance parameter threshold power is exceeded as a means of inexpensive contingency planning."

To cool its supercomputers, LLNL has slowly been moving away from air: "The building started off with thirty air handlers, each capable of 88,000 cubic feet per minute (CFM) - we've removed four and we're removing four more. So we're needing less and less air, and we're utilizing that space and putting in power and water cooling infrastructure. We started around almost 2.7 million CFM. Now we're under 2 million CFM."

But, DePrater noted, "we are looking at not going completely away from air cooling, because it's still needed for 10 to 20 percent [of the cooling]."

For the water cooling, DePrater said LLNL plans to rely on W3 water, an ASHRAE thermal guideline for facility water-supply with a temperature of 2-32°C (35.6-89.6°F). "The existing building’s cooling capacity will be increased from 10,000 to 18,000 tons by installing a new low-conductivity water cooling tower to provide direct process loops for energy-efficient computer cooling," the campus capability plan states.

That water, however, won't go straight to the machines: "We have some systems that use facility water directly to the machines," DePrater said. "But I think what I have seen, the vendors... want to be in control of what water touches their stuff. So we're using more and more CDUs [cooling distribution units] instead of ... straight facility water."

Then there's the weight. DePrater's presentation showed a slide requiring 315 pounds per square foot for the exascale systems. A 2018 CORAL-2 procurement document reveals that B-453 has 48in raised floor space with "a 250 lbs/sq ft loading with the ability to accommodate up to 500 lbs/sq ft through additional floor bracing."

With the facility set across two floors, "this design affords the capability of siting a machine with higher weight capacities on the slab on grade of the first level of the computer room."

DePrater said that current B453 facility has a power usage effectiveness (PUE - the ratio of total amount of energy used by a data center to the energy delivered to computing equipment, where the closer to 1.0 the better) of between 1.1 and 1.2.

For the exascale deployment, "we're going after 1.02," DePrater said. "That's what we're aiming for."