A new data center rack structure, Open19, has been launched at the DCD Webscale event by LinkedIn’s head of infrastructure architecture Yuval Bachar.

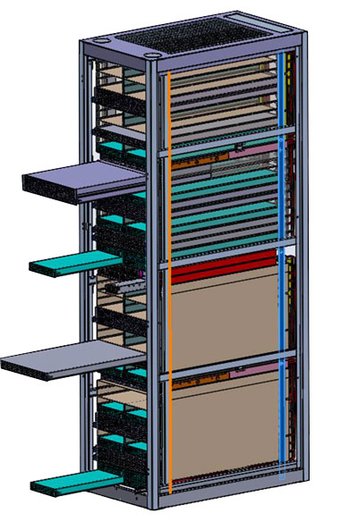

The Open19 rack is designed to use a standard size rack compatible with existing equipment and spaces, but to introduce a much more efficient structure within that, using around half the equipment for common elements such as power distribution, eliminating waste and wiring, and also making it much quicker and easier to install. It’s an alternative to the Open Compute Project’s 21 inch Open Rack,

Cage fight

“We looked at the 21 inch Open Rack, and we said no, this needs to fit any 19 inch rack,” Bachar told the DCD Webscale audience. ”We wanted to create a cost reduction of 50 percent in common elements like the PDU, power and the rack itself. We actually achieved 65 percent.”

Bachar was at Facebook during the genesis of Open Rack, so he understands that system well. He was also involved in Facebook’s Open Compute switches, Sixpack and Wedge, and has launched the Pigeon top-of-rack switch since arriving at LinkedIn.

The rack is divided into cages, which hold standard modules, so the rack can be built and loaded in about one hour, instead of some six hours for a conventional rack, said Bachar. Power is distributed at 12V via clip-on connectors, so there are no power wires, and data wires are also eliminated.

The invidual modules are attached with two or four screws.

While Open Rack is a great achievement, it’s only really suitable for customers buying vast numbers of servers, who can afford to set their own standards, Bachar contends. Open19 is aimed at smaller operators and enterprises, who need to combine the system with conventional racks, and buy servers from conventional players, not necessarily from white box makers, he explained.

More details are given in a LinkedIn blog published today.

Bachar hopes an ecosystem will emerge around Open19: “We are not big enough to do this by ourselves. We want support from server, storage and component vendors,” he said, asserting that Open19 gives these vendors a better opportunity than other options.

Servers go into the rack’s cages, in “brick” modules, which can be single height (1U) or double height. (2U). They can also be half the width of the rack, or the whole width. LinkedIn contacted all the world’s server makers, he said, and ensured that their motherboards could be simply dropped into the bricks for installation. “We’ve created a blade system which does not rely on any single vendor’s chassis,” he said.”We’re not defining the servers, only the form factor.”

The servers can cost some 45 percent less than normal however, as they don’t need to have their own power supply. The Open19 rack can also have a battery backup unit installed, instead of a UPS for the whole data center.

The power shelf distributes power at 12V, he explained: “48V is not stable enough, and while 12V requires higher current, the losses are marginal and neglible.”

Network switches are similarly installed. While it suits LinkedIn’s own Open19 switch, it can also take standard switches from other vendors.Each server gets a 50Gbps data path and 250W of power.

The rack may have its own management system, he said: “We’re exploring with Vapor IO to install management in the rack itself.”

Last month, it was announced that Microsoft is buying Linkedin for £26.2 billion. Bachar was not ready to discuss any of the implications of this, but Microsoft has shown a lot of interest in the Open Compute specifications, and has contributed its own idea for distributed batteries to the group. It’s quite possible that Open19 might fit well with parts of Microsoft’s own infrastructure.