Google has become the first cloud operator to offer access to the Nvidia T4 GPU, two months after it was announced.

The T4, a cheaper alternative to the high performance computing (HPC)-focused V100, is also available in 57 separate server designs from computer manufacturers, Nvidia announced at SC18.

Google Processing Unit

“We have never before seen such rapid adoption of a data center processor,” Ian Buck, VP and GM of accelerated computing at Nvidia, said.

“Just 60 days after the T4’s launch, it’s now available in the cloud and is supported by a worldwide network of server makers. The T4 gives today’s public and private clouds the performance and efficiency needed for compute-intensive workloads at scale.”

"The existing CPU infrastructure is simply not keeping up with the needs of AI," Buck added in a briefing attended by DCD.

The 70W T4 GPU can fit into a standard server or any Open Compute Project hyperscale server design. Server designs can range from a single T4 GPU to up to 20 GPUs in a single node, with servers available from companies including Dell EMC, IBM, Lenovo and Supermicro.

It offers 8.1 Tflops at FP32, 65 Tflops at FP16 as well as 130 Tops of INT8 and 260 Tops of INT4.

"Many of you have also told us that you want a GPU that supports mixed-precision computation (both FP32 and FP16) for ML training with great price/performance," Google Cloud product manager Chris Kleban said. "The T4’s 65 TFLOPS of hybrid FP32/FP16 ML training performance and 16GB of GPU memory addresses this need."

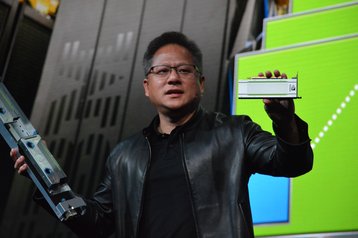

Google Cloud is offering T4 in early access, starting today. "I am just blown away by how fast Google Cloud works, in 30 days from production it was deployed at scale on the cloud," Nvidia CEO Jensen Huang said at SC18.