Fans of science fiction will know that cryogenic freezing is a commonly used mode of transport for astronauts wishing to traverse the galaxy. From classic movies like 2001: A Space Odyssey and Alien to more modern tales such as Interstellar, sci-fi writers love nothing better than plunging their protagonists into deep freeze to allow them to travel millions of miles unscathed.

In the real world, you can’t freeze and unfreeze live humans (yet), but server chips are a different matter, and for data center operators, cryogenics could be moving out of the realm of science fiction and into that of science fact.

Research is emerging that suggests running complementary metal-oxide-semiconductor, or CMOS, chips at very low temperatures (the cryogenic temperature range is considered to be anything below 120 Kelvin, or -153°C) using liquid nitrogen cooling can lead to increased performance and power efficiency.

Bringing the technology out of the laboratory and into commercial environments will be a challenge, but as vendors seek new efficient ways to cool their increasingly powerful components, this novel approach could reap rewards.

Colder is quicker

CMOS technology plays a vital role in integrated circuits (ICs), such as processors, memory chips, and microcontrollers, as part of switching devices that help regulate the flow of current through the IC, thus controlling the state of its transistors.

“Chips are made up of transistors which are either switched on or off,” explains Rakshith Saligram, a graduate research assistant at the Georgia Institute of Technology’s School of Electrical and Computer Engineering. “Switching devices are used to apply the minimum voltage required by these transistors to change from on to off. The amount of voltage you need to apply as part of that switching action determines how efficient the device is.”

Saligram is an electrical engineer who formerly worked for Intel (“I would describe myself as a circuit designer,” he says) and is currently conducting research “exploring different devices and looking at ways to make circuits better.” While evaluating different technologies, he came across cryogenic CMOS.

Most commercially available silicon chips are graded to run from a minimum temperature of 233 Kelvin (-40°C), right up to a maximum 173 Kelvin (100°C). Transistors will switch at what Saligram describes as a “reasonable speed” while operating at room temperature, but performance picks up considerably as temperatures get lower. In a paper published in March 2024, Saligram and his two co-authors, Georgia Tech colleagues Arijit Raychowdhury and Suman Datta, took a 14-nanometer FinFET CMOS device, and optimized and tested it using a cryogenic probe station, focusing on how the transistors performed at temperatures ranging from 300 Kelvin (26.85°C) to 4 Kelvin (-269°C).

“The minimum voltage difference you need to apply in order to take a transistor from on to off at room temperature is around 60 to 70 millivolts (0.06V-0.07V) in the bulk of the devices,” Saligram says. “But when you go to cryogenic temperature, this voltage difference can be as low as 15-20 millivolts. That’s a 4x reduction in the voltage you need to apply, which is a big difference."

While these are small values in absolute terms, the number of transistors on a single CPU can run into the millions, so the power savings soon add up, something which is likely to be welcomed by operators at a time when many data centers are becoming constrained by the amount of available energy from the grid.

Saligram says the research also shows that power leakage drops at lower temperatures. “When you're running a workload in a data center, not all devices need to be on at the same time,” he explains. “There’s always switching activity going on, and when a component is not performing any action, it is generally switched off. But during that period a small amount of electricity is still being used.

“That’s wasted power and we want to minimize that waste. And if we take these devices down to cryogenic temperatures we see a 4x reduction in those kinds of currents.”

Lessons from quantum

While Saligram and his colleagues have been looking at how standard components can be optimized to perform at low temperatures, over in the UK work is ongoing on semiconductor IP specifically designed to operate in cryogenic conditions.

The snappily titled “Development of CryoCMOS to Enable the Next Generation of Scalable Quantum Computers” is backed by UK government innovation agency Innovate UK and led by low-power chip specialist sureCore, with support of a host of other organizations including chip design specialists AgileAnalog, SemiWise, and Synopsys, as well as Oxford Instruments and quantum computing companies Universal Quantum and SEEQC.

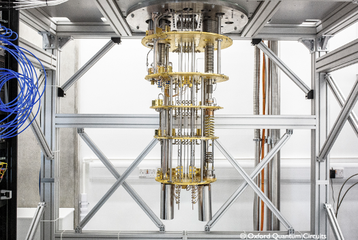

Quantum computers are a field where cryogenic temperatures are, by necessity, already in widespread use, with many of the types of early quantum machines requiring ultra cooling to operate effectively.

SureCore and its partners are aiming to drop this temperature further by incorporating more parts of the quantum computer inside the cryostat, the coldest part of the machine. “The big problem for quantum computers at the moment is that most of the control electronics get housed outside the cryostat,” says sureCore CEO Paul Wells. “You have a considerable amount of cabling coming out of the cryostat, and that not only introduces latency, but you’ve also potentially got thermal paths back into the cryostat. This has the effect of limiting the number of qubits.”

Qubits are the unit of measurement used for quantum power, and the most advanced computer currently in operation has around 1,000. It is thought that quantum machines with hundreds of thousands, or even millions, of qubits will be required if the technology is to fulfill its potential and outperform classical computers, so more efficient hardware will be required.

To help solve this issue, the consortium has come up with new timing and power models for chips designed to operate at cryogenic temperatures. “People who are developing quantum control chips can just pick up the new models as part of their work and drop them into existing processes - the rest of the chip design flow is unchanged,” says Wells, who hopes the designs can form the basis of new quantum control and measurement ASICs.

In May, the project taped out its first chip, which will be used to validate this IP. “Assuming that works ok, our end goal is to offer a portfolio of cryogenic chips that can operate down to 4 Kelvin,” Wells says.

Cryogenics in the data center

Despite the progress made on the project, sureCore’s Wells is skeptical that widespread use of cryogenic cooling technology will be seen in the data center outside of specialized quantum environments.

This is because, he says, the processes needed to develop dedicated cryogenic hardware will be costly and complex to set up for chip manufacturers. “They’ve got thousands of very clever engineers, so I’m sure if they wanted to do it, they could,” he says. “But it will come down to economics, and if they were to do this it would not be cheap or straightforward.”

Victor Moroz also has reservations about the technology’s commercial viability, but for different reasons. Moroz is a fellow at semiconductor equipment maker Synopsys, and has published several papers on the potential of cryogenic cooling of CMOS chips, the most recent of which he presented at last year’s VSLI Symposium. This event, run by the Institute of Electrical and Electronics Engineers, is one of the most prestigious and long-running conferences on electronics and circuit design, and Moroz says this reflects that interest in the technology is running high.

The findings of Moroz’s research are broadly in line with those of Saligram and his team at Georgia Tech, but he says that while there is plenty of enthusiasm for the potential of cryogenic CMOS within the academic community, restrictions being placed on the technology by the US government are likely to hold its adoption back.

“I would say within the research community there is a lot of excitement [about cryogenic CMOS],” he says. “But once you get into industry there’s a huge pushback because once you associate your technology with cryogenic you can get put on the US export control list. All the foundries are ‘allergic’ to this technology because of that.”

Indeed, cryogenic storage is one of many technologies that have been put in the spotlight by the US trade war with China, which has seen exports of a host of semiconductor-related products to Beijing either banned or heavily restricted.

Cryogenic equipment is covered by restrictions on quantum technology, and though these are not as prescriptive as some of the controls on artificial intelligence chips, they still present a barrier to development, Moroz says.

“From a power perspective, cryogenic cooling totally makes sense, but this export control thing is a big problem,” he adds. “There is also the issue of cost, because in my research I did not do any cost analysis. But if the cost is ok then it simply becomes a matter of infrastructure and creating enough hardware.”

Saligram strikes a more optimistic note when it comes to adoption, pointing to an announcement from IBM last December that it had developed a CMOS transistor optimized to work at extremely low temperatures. Big Blue used nanosheets, a new generation technology that is set to replace FinFET and enable greater miniaturization of transistors (“Nanosheet device architecture enables us to fit 50 billion transistors in a space roughly the size of a fingernail,” Ruqiang Bao, a researcher at IBM, said at the time). The device performed twice as efficiently at 77 Kelvin (-196°C) as it did at room temperature, according to the IBM team.

US innovation agency DARPA, which has previously funded programs that led to the development of many of the foundational technologies used by businesses and consumers today, has also taken an interest, starting a research program called Low Temperature Logic Technology, through which some of Saligram’s research was conducted.

Data center operators themselves are also looking at how cryogenic temperatures can be utilized at their facilities, Saligram says. “At Georgia Tech we’re working with one of the leading data center companies, which is interested in pursuing this technology as part of their applications,” he says, declining to name the business involved. “They are really interested, and there have been multiple other instances where companies have experimented with low temperatures - Microsoft famously dunked an entire server onto the ocean bed to see how that would play out and saw some performance improvements.

“This Darpa project includes several industry players, including IBM, so there is definitely interest there and a desire to take this technology to the next level.

“We need to get more traction from the guys who build the chips, like Intel and AMD, who need to take this up so that they can take advantage of all the benefits it brings on a circuit and system level. The final stage is to work with mechanical engineers on the deployability of this technology, to ensure data centers are able to handle this. There is some work to do there.”

Indeed, installing a cryogenic cooling system is a costly business, particularly at a time when many data center companies are spending considerable sums switching from traditional air cooling systems to new and more efficient liquid cooling set-ups.

“Bringing the temperature down [to cryogenic levels] does involve a lot of cooling costs,” Saligram says. “But our argument is that data centers currently invest a lot in power and cooling, but don’t get anything back in terms of improved performance - the money just goes on keeping things running.

“If operators invest a little bit more [to move to lower temperatures] they may be able to get some performance back.”

He adds that it is not even necessary to aim for some of the more extreme temperatures investigated as part of the research project. “You don’t need to go all the way to that temperature, even going to 373 Kelvin (-100°C) enables you to get better performance, depending on the type of hardware you’re using and the workloads it is running,” Saligram says.

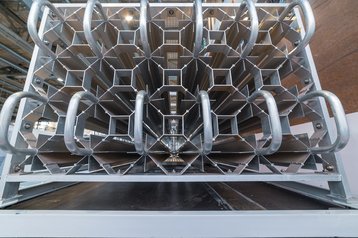

Elsewhere, there is work to do, he says, on handling the large amounts of liquid nitrogen that is used as coolant in cryogenic systems. “Liquid nitrogen production itself is energy-intensive,” he says. “And we need to look at ways we can effectively recycle the liquid nitrogen if it is going to be used, and how we can build infrastructure that is leak-proof and achieves the connectivity at node and rack level, as well as switch level.

“These are big questions that need to be answered if this technology is going to be deployed, but there are a lot of opportunities to make things happen, and as an engineer, it’s a very interesting area to be involved in."

This feature first appeared in the DCD Cooling Supplement. Register here to read the supplement free of charge.