YouTuber bitluni likes building strange things. Head over to his channel and you can watch footage of him constructing a multi-colored LED wall made of ping pong balls and a DIY sonar scanner.

Recently, his 250,000 followers have also seen him create his own supercomputer. To do this, he stitched together 16 “superclusters,” each containing 16 RISC-V-based microcontrollers from Chinese vendor WCH, into one “megacluster.”

The resulting 256-core computer is capable of registering 14.7GHz combined single-core clock rate (“not amazing but not too shabby either,” according to bitluni). On the face of it, this is not particularly super - Frontier, the world’s fastest supercomputer, has more than eight million processor cores - but the machine bitluni put together in his workshop demonstrates what might be possible on a grander scale using components based on open-source chip architecture RISC-V.

The open nature of RISC-V means there are no intellectual property (IP) licensing fees to be paid by vendors, so components can come cheap; the WCH microcontrollers cost less than ten cents each, a quarter of the price of comparable devices based on other architectures, meaning bitluni was able to procure the parts needed for his ‘megacluster’ for less than $30.

But beyond providing low-cost chip option for bedroom projects, can RISC-V have an impact in the data center? Semiconductors based on Intel’s x86 architecture still rule the roost, alongside a growing number of Arm-based devices being developed in-house by the cloud hyperscalers. But some vendors think so, and are launching products that they hope will become integral to the servers of the future, but have their work cut out to make an impact.

RISC management

RISC-V is a product of research carried out in the Parallel Computing Lab at UC Berkeley in California. First released in 2010, it is a modular instruction set architecture (ISA), a group of rules that govern how a piece of hardware interacts with software. As an open standard, RISC-V allows developers to build whatever they desire on top of the core ISA.

Non-profit organization RISC-V International manages the ISA, and has formed a community of just under 4,000 members including Google, Intel, and Nvidia. In 2022, RISC-V International claimed there were 10 billion chips based on its ISA in circulation around the world, and predicted that number would climb to 25 billion by 2027.

Many of these chips are basic microcontrollers featured in low-cost Edge devices and embedded systems for products such as wireless headphones, rather than advanced CPUs. But Mark Himelstein, CTO of RISC-V International, says RISC-V is thinking bigger. “Everything we’re doing in RISC-V is driven by the data center,” he says.

Last year the RISC-V Foundation introduced profiles; packages containing a base ISA coupled with extensions that work well together when building a specific type of chip. “We can give these to the compiler folks and operating system folks to say ‘target this,’” Himelstein says. “The first profile that came out is called RVA, which is for general-purpose computing applications like HPC and big, honking AI/ML workloads. It’s not really targeted at the earbud folks, though they can use it.”

Himelstein says this is to help accelerate RISC-V chip development and reflects a growing interest in server chips based on the ISA. “In the post-ChatGPT world, people are getting much more aggressive with how they integrate AI and machine learning into every application and solution,” he says. “You need good hardware to be able to go off and do that, and we’re seeing an increasing number of RISC-V server chips that can power the next generation of ‘pizza boxes.’”

Hitting the market

Ventana Micro Systems is one of the companies targeting the server market with RISC-V hardware. Last year the vendor announced a second version of its Veryon processor, featuring 192 cores built in a chiplet design and ready for production on TSMC’s four-nanometer process.

The company was founded in 2018 by engineers who had previously worked on developing 64-bit processors on the Arm architecture and saw an opportunity to do something similarly transformative with RISC-V high-performance semiconductors.

“If you look at RISC-V today, it’s basically a bunch of microcontrollers,” says Travis Lanier, Ventana’s head of product. “In fact, I would expect RISC-V to completely take over the microcontroller market.

“But people will look at that and say ‘It’s not a serious ISA for high performance.’ So we have to prove it is by moving RISC-V along, and I think all the features are now there to compete with the other ISAs, it’s about putting them into a CPU.”

In terms of performance, Ventana says the Veryon V2 can outpace AMD’s Genoa and Bergamo Epyc server processors, though given that the V2 won’t be deployed until 2025, this comparison is likely to be somewhat dated. “We’re finishing up the design and expect the first deployments in data centers to be early next year,” Lanier says. “Those will be limited deployments, and we’ll look to scale up from there.”

Ventana plans to take advantage of the flexibility RISC-V’s open architecture to give it the edge over its rivals. The Veryon V2 supports domain-specific acceleration (DSA), enabling customers to add bespoke accelerators. DSA could help data center operators boost performance for specific workloads to meet customer requirements, Lanier says.

Many vendors are thinking along similar lines. SiFive was an early proponent of RISC-V high-performance chips, and in 2022 was valued at $2.5bn following a $175 million funding round. The company’s hardware can apparently be found in Google data centers, where its chips help manage AI workloads, and it was awarded a $50m contract by NASA to provide CPUs for the US space agency’s High-Performance Spaceflight Computer. However, its progress seems to have stalled recently, and last November it was reported that it was laying off 20 percent of its workforce, including most of its high-performance processor design team.

Another startup, Tenstorrent, is building its own RISC-V CPU, as well as an AI accelerator which it hopes will be able to compete with Nvidia’s all-conquering AI GPUs. It is headed up by Jim Keller, a former lead architect at Intel who is credited with an instrumental role in the design of Apple’s A4 and A5 processors, as well as Tesla's custom self-driving car silicon.

Tech big names are also getting in on the act. Samsung is setting up an R&D lab in Silicon Valley dedicated to RISC-V chip development, and Alibaba, which has long held an interest in RISC-V, claimed in March that it was on track to launch a new advanced server chip based on the ISA at some point this year.

Alibaba already has a RISC-V server chip on the market in the form of the C910, which was made available on French data center company Scaleway’s cloud servers in March. Scaleway claimed that this was the first deployment of RISC-V servers in the cloud, and added that it expects the architecture to become dominant in the market as countries “seek to regain sovereignty over semiconductor production.”

Sébastien Luttringer, R&D director at Scaleway, said at the time: "The launch of RISC-V servers is a concrete and direct statement by Scaleway to boost an ecosystem where technological sovereignty is open to all, from the lowest level upwards. This bold, visionary initiative in an emerging market opens up new prospects for all players.”

Trust the process

As commercial RISC-V chips gather momentum, the architecture is also underpinning efforts to develop open-source silicon which could find its way into data centers.

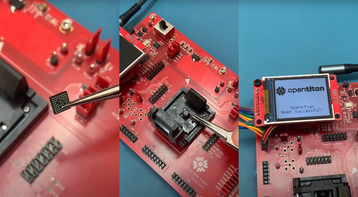

Earlier this year, the OpenTitan coalition claimed a milestone when it taped out what it said was the first open-source silicon project to reach commercial availability.

The chip, which features a RISC-V processor core, is a silicon root of trust, a firmware device that provides security at the hardware level by detecting changes made by cyberattackers to a machine and disabling the affected hardware.

OpenTitan was founded by Google in 2018 with the aim of developing an open-source root-of-trust chip, and the project has come to fruition with the help of lowRISC, a community interest company dedicated to the development of open silicon, and a host of other partners from industry and academia.

“Open source software is in every project now,” says Gavin Ferris, CEO of lowRISC. “It’s a clear success, but the challenge for us was how to do that with hardware, because there are huge advantages to sharing foundational IP.

“It makes economic sense to share and amortize the cost of foundation IP blocks so that you can focus on the stuff that is new and innovative and you can cut time to market.”

LowRISC spun out of Cambridge University’s computer lab in 2014, and was looking for a first use case to drive open-source hardware when it happened upon OpenTitan. Following years of work, a chip based on IP developed by the project was made earlier this year, and the coalition says it is the first open-source semiconductor to have been built with commercial-grade design verification and top-level testing.

The IP is now available to use, and Ferris says he is confident it will be adopted by chip makers as part of their devices. “It’s commoditizing something that isn’t the ‘secret sauce,’ and I think there’s a growing recognition that security is not an area where people want to differentiate,” he says. “There’s a lot of engineering in any SoC, and it doesn’t make sense to do it all yourself, the smart thing to do is leverage open-source and concentrate on the product features higher up the stack that you can sell.

“That doesn’t mean you don’t do proprietary things with [open-source], it just means there are a whole layer of tools you can just access and use. We’ve seen this movie before with software and we know how it ends, we just need to get to a good baseline to get the machine started, because once it starts, it doesn’t stop. That’s what we’ve got with OpenTitan.”

Challenges ahead

While enthusiasm for RISC-V within the open-source community is high, whether this is shared in the wider data center market is another matter.

Server chips have long been the domain of Intel and its x86 architecture, though recent years have seen AMD, which designs its own x86 CPUs, eat into that domination. Mercury Research’s latest report on the CPU market, released in May, shows AMD now has a 23 percent share of the server market thanks to the success of its Epyc range. This is up from 18 percent a year ago.

Intel and AMD also have to contend with the rise of Arm devices in the data center. Previous attempts to introduce the UK chip designer’s low-power architecture into data halls have not been a success, but improvements to its technology, combined with the desire of the cloud hyperscalers to develop their own hardware in-house, has driven a paradigm shift over the last three years.

Amazon offers Arm-based Graviton chips in its data centers, while Microsoft launched its Cobalt 100 CPU and Maia AI accelerator, both built on Arm, before Christmas. Apple also has its own Arm-based consumer silicon, having ditched Intel in 2022, while Nvidia has an Arm CPU, Grace, which can be deployed in conjunction with its GPUs or used as a standalone product.

Elsewhere, vendors such as Ampere are building dedicated Arm-based data center chips which are also gaining traction with the hyperscalers.

All this leaves limited space for RISC-V CPUs in the data center, argues chip industry analyst Dylan Patel of SemiAnalysis. “Arm is doing really well at locking in the big guys by providing them a lot of value,” he says.

“They’re not just providing CPU cores, but also the network-on-chip that connects the CPU to the memory controllers and PCIe controllers. They’re even doing things like physically laying out the transistors, so they’re doing a lot to maintain and grow their market.”

Because of this, Patel says, “I don’t think RISC-V as the central CPU in the data center is going to happen any time soon.”

Patel also points out that the high-profile problems at SiFive, and the delays Ventana has experienced in getting a product to market (the original version of the Veryon chip never made it to production), have not helped adoption. “I think the RISC-V hype peaked in 2022,” he says. “Since then SiFive has laid off its high-performance CPU core team because they weren’t getting the traction. I think they’ll claim they’re still making one, but it’s at a much slower cadence.

“Ventana never got their first chip out and have had a few issues. They're not down and out but there’s definitely been a slowdown, and if you put those two together it shows the difficulty of finding a solid commercial case for RISC-V.”

He believes there is a supporting role RISC-V chips can play in the data center through devices such as the OpenTitan root of trust chip.

“If you’re making a new accelerator, it will need to have standard instructions,” he says. “That stuff is being standardized by RISC-V, and then you can go out and attach everything else yourself. That’s something a handful of folks are doing with custom accelerators for data centers, whether they’re related to storage or AI workloads.”

Meta is the most interesting example of this, Patel says. Facebook’s parent company has designed its own AI accelerators by linking together RISC-V CPU cores built using IP from another vendor, Andes Technology, and intends to continue using the architecture in its future silicon efforts. Patel says this “probably the biggest positive” for those hoping for greater RISC-V adoption in servers.

Speaking at the RISC-V summit in November, Prahlad Venkatapuram, senior director of engineering at Meta, said: “We’ve identified that RISC-V is the way to go for us moving forward for all the products we have in the roadmap. That includes not just next-generation video transcoders but also next-generation inference accelerators and training chips.”

If Meta’s engineers need any tips on what to do next, they could always put in a call to bitluni.

This feature appeared in Issue 53 of the DCD Magazine. Read it for free today.