As the data center industry enters a new phase, every operator has been forced to reckon with two unknowns: How big will the AI wave be, and what kind of densities will we face?

Some have jumped all in and are building liquid-cooled data centers, while others hope to ride out the current moment and wait until the future is clearer.

For Meta, which has embraced AI across its business, this inflection point has meant scrapping a number of in-development data center projects around the world, as DCD exclusively reported late last year.

It canceled facilities that already had construction workers on site, as it redesigned its facilities with GPUs and other accelerators in mind.

This feature appeared in Issue 50 of the DCD Magazine. Read it for free today.

Now, with the company breaking ground on the first of its next-generation data centers in Temple, Texas, we spoke to the man behind the new design.

"We saw the writing on the wall for the potential scale of this technology about two years ago,” Alan Duong, Meta’s global director of data center engineering, said.

Meta previously bet on CPUs and its own in-house chips to handle both traditional workloads and AI ones. But as AI usage boomed, CPUs have been unable to keep up, while Meta's chip effort initially fizzled.

It has now relaunched the project, with the 7nm Meta Training and Inference Accelerator (MTIA) expected to be deployed in the new data center alongside thousands of GPUs.

Those GPUs require more power, and therefore more cooling, and also need to be closely networked to ensure no excess latency when training giant models.

That required an entirely new data center.

The cooling

The new facilities will be partially liquid-cooled, with Meta deploying direct-to-chip cooling for the GPUs, whilst sticking to air cooling for its traditional servers. “During that two-year journey, we did consider doing AI-dedicated data centers, and we decided to move towards more of a blend, because we do know there's going to be this transition,” Duong said.

“95 percent of our infrastructure today supports more traditional x86, storage readers, front end services - that's not going to go away. Who knows where that will evolve, years and years from now? And so we know that we need that.”

The AI systems will also require access to data storage, “so while you could optimize data centers for high-density AI, you're still going to need to colocate these services with data, because that's how you do your training.”

Having the hybrid setup allows Meta to expand with the AI market, but not over-provision for something that is still unpredictable, Duong said.

"We can't predict what's going to happen and so that flexibility in our design allows us to do that. What if AI doesn't move into the densities that we all predicted?"

That flexibility comes with a trade-off, Duong admitted. "We're going to be spending a little more capital to provide this flexibility," he said.

The company has settled on 30°C (85°F) for the water it supplies to the hardware, and hopes to get the temperature more widely adopted through the Open Compute Project (OCP).

Which medium exactly it uses in those pipes to the chip is still a matter of research, Duong revealed. "We're still sorting through what the correct medium is for us to leverage. We have years - I wouldn't say multiple years - to develop that actual solution as we start to deploy liquid-to-chip. We're still developing the hardware associated, so we haven't specifically landed on what we're going to use yet."

The company has, however, settled on the fact that it won't use immersion cooling, at least for the foreseeable future. "We have investigated it," Duong said. "Is it something that is scalable and operationalized for our usage and our scale? Not at the moment.

"When you imagine the complications of immersion cooling for operations, it's a major challenge that we would have to overcome and solve for if we were ever to deploy anything like that at scale."

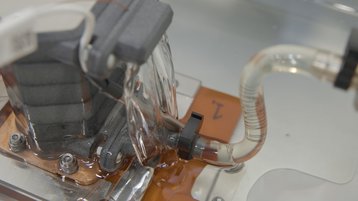

Another approach that will not go forward is a cooling system briefly shown in an image earlier this year of a fluid cascading down onto a cold plate like a waterfall (pictured). "These are experiments, right? I would say that that's generally not a solution that is going to be scalable for us right now.

"And so what you're going to see a year or two from now is more traditional direct-to-chip technology without any of the fancy waterfalls."

While the facility-level design is fully finalized, some of the rack-level technology is still being worked on, making exact density predictions hard. "Compared to my row densities today, I would say we will be anywhere from two times more dense at a minimum to between eight to nine times more dense at a maximum."

Meta "hasn't quite landed yet, but we're looking at a potential maximum row capacity of 4-500 kilowatts," Duong said.

"We're definitely more confident at the facility level," Duong added. "We've now gone to market with our design, and the sort of response we've gotten back has given us confidence that our projections are coming to fruition."

Switching things up

Alongside cooling changes, the company simplified its power distribution design.

"The more equipment you have, the more complicated it is," Duong said. "You have extra layers of failure, you have more equipment to maintain."

The company reviewed which equipment it could remove, without requiring new, more complex equipment.

"And so we have a lot of equipment in our current distribution channel, whether it's switch gear, switchboard, multiple breakers, multiple transition schemes from A to B, etc., and I said 'can I just get rid of all that and just go directly from the source of where power is converted directly to the row?'"

This new design also allowed Meta "to scale from a very low rack density to a much larger rack density without stranding or overloading the busway, breaker, or the switchgear," he said.

Going directly from the transformer to the rack itself allowed us "to not only eliminate equipment but to build a little bit faster and cheaper, as well reduce complexity and controls, but it also allows us to increase our capacity."

Faster, cheaper

Perhaps the most startling claim Meta has made with its new design is that it will be 31 percent cheaper and take half the time to build (from groundbreaking to being live) over the previous design.

"The current projections that we're seeing from our partners is that we can build it within the times that we have estimated," Duong said.

"We might even show up a little bit better than what we initially anticipated."

Of course, the company will first have to build the data centers to truly know if its projections are correct, but it hopes that the speed will make up for the canceled data center projects.

"There is no catching up from that perspective," Duong said. "You may see us landing a capacity around the same time as we planned originally."

That was critical in being able to make the drastic pivot, he said. "We bought ourselves those few extra months, that was part of the consideration."

How long will they last?

The first Meta (then Facebook) data centers launched 14 years ago. "And they're not going anywhere, it's not like we're going to scrap them," Duong said.

"We're going to have to figure out a way to continue to leverage these buildings until the end of their lifetime."

With the new facilities, he hopes to surpass that timeframe, without requiring major modernization or upgrades for at least the next 15 years.

"But these are 20-30 plus year facilities, and we try to include retrofittability into their design," he said. "We have to create this concept where, if we need to modernize this design, we can."

Looking back to when the project began two years ago, Duong remains confident that the design was the right bet for the years ahead. "As a team that is always trying to predict the future a little bit, there's a lot of misses," he said.

"We have designs that are potentially more future-facing, but we're just not going to need it. We prepared ourselves for AI before this explosion, and when AI became a big push [for Meta] it just required us to insert the technologies that we've been evaluating for years into that design."