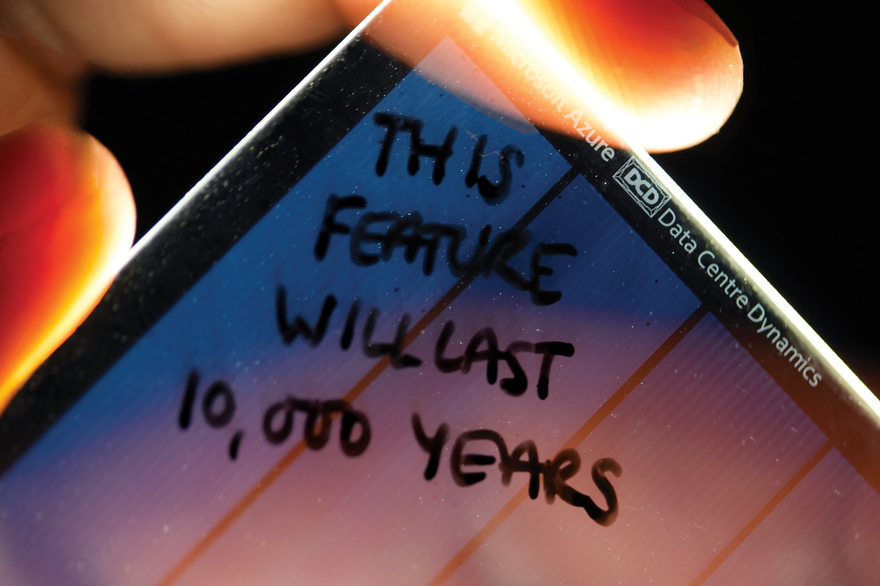

This feature will last 10,000 years. To the people of the year 12024, we hope more has remained of our time than a single article on data centers.

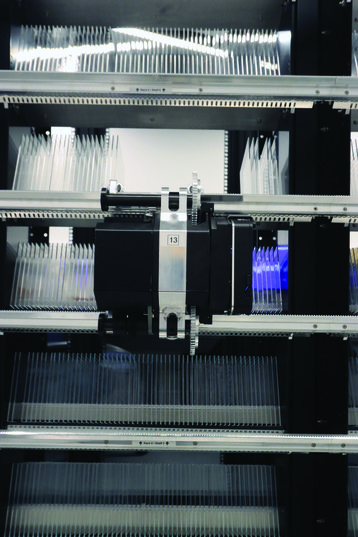

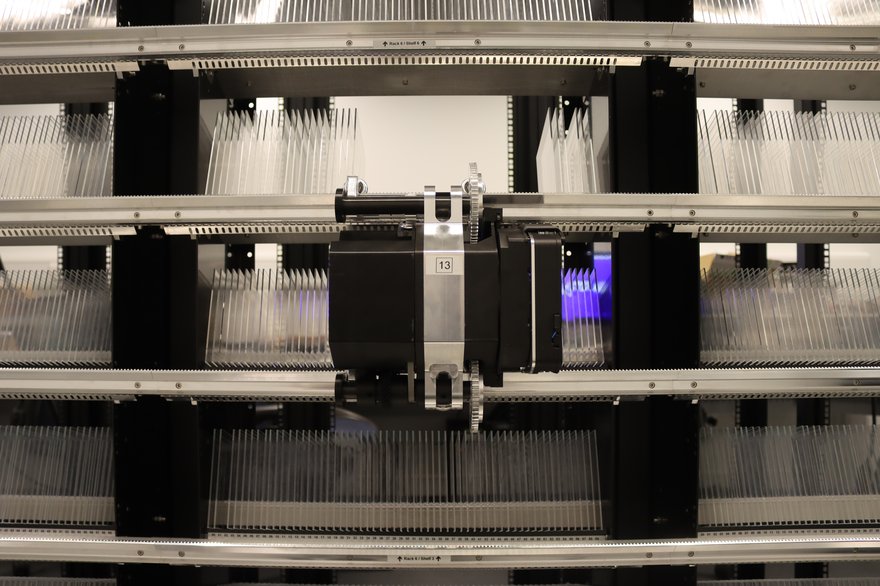

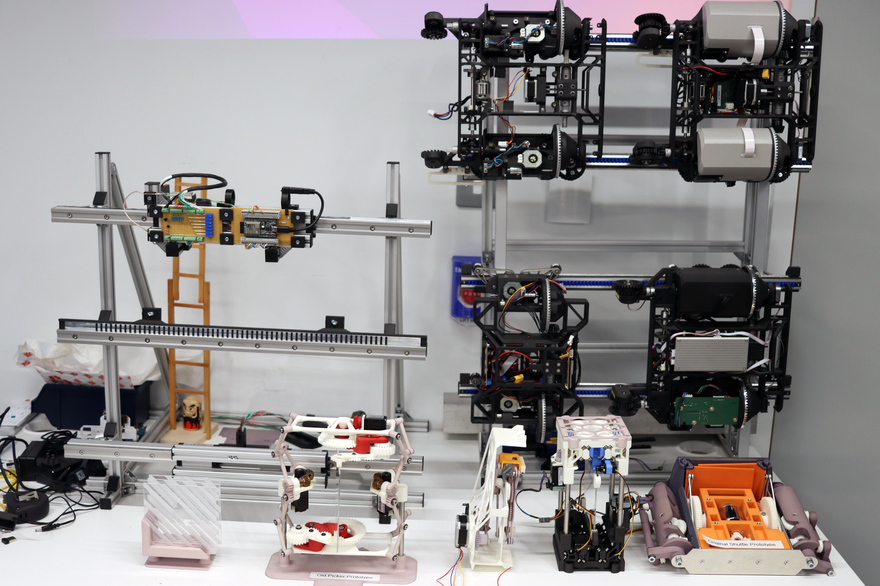

The future of the past starts in a basement in Cambridge, UK. Crab-like robots scoot along rails at high speeds, stopping suddenly to carefully pick up small platters of laser-inscribed knowledge, ready to ferry them back to AI-assisted cameras.

But, for all the modern machinery, at the core of this library is a technology first discovered some 3,500 years ago when craftsmen on the banks of the Tigris began mixing sand, soda, and lime: glass.

Over the centuries, glass has evolved to be used as an expression of artistic creativity, has brought light into people's homes through windows and bulbs and, most recently, has formed the backbone of the Internet as fiber.

Now, it could have another use: To store the world's knowledge.

At a time when we are producing more data than ever, the planet’s data centers are struggling to keep up. Even if we could manufacture enough hard drives, flash drives, and tape to store everything, we’d soon need to move the data once again as the equipment ages and begins to fail.

HDDs generally live three to five years, SSDs are lucky to reach 10, and tape is sold with promises of 15-30 years - but only as long as temperature and humidity are carefully controlled.

In this, our most recorded age, data is set to be lost as companies and governments either choose not to store it due to cost, or simply fail to transition when their devices age.

This feature appeared on the cover of Issue 53 of the DCD Magazine. Read it for free today.

In search of captured time

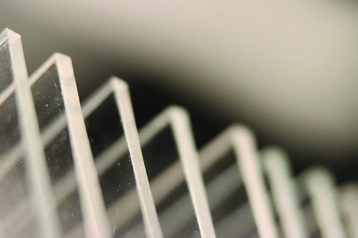

Over a decade ago, and about a hundred miles southwest of the glass basement, researchers at Southampton University discovered a previously unknown property of glass: Using femtosecond-length pulses could leave precise deformations, and changing the polarization of the laser would change the orientation of these imprints.

This could then be ‘read’ by a microscope, interpreting the scars in the glass as data. The university first demonstrated a 300KB glass storage system in 2013, and has since postulated a potential 360TB disc that would last billions of years.

When the glass deformation research first came out, Richard Black was trying to improve hard drive storage. "We were working on this system called Pelican, basically trying to get the lowest cost hard drive storage you could possibly imagine," he recalls over lunch in Microsoft’s Cambridge cafeteria. "At the time, there were about 24 HDDs in a 4U deployment, and we managed to pack 1,152 3.5” HDDs in 52U."

Black's team focused on keeping costs low, including energy usage - "We'd keep the drives spun down as much as possible, and we only had one fan to cool the whole system,” he says.

“It was a fun project and had some impact, but we realized that the medium was actually where the problem was.”

HDDs cost too much, their lifetime was too short, “and an annual failure rate of 3-5 percent means that your hard drives are failing at the rate of one a week just in that single rack,” he says.

“We just realized that, in the archival space, we needed a better medium. And then, pretty much simultaneously, Southampton published this paper going ‘modified glass crystal is immutable.’”

Microsoft partnered with Southampton for the first iteration of what would come to be known as Project Silica, but has since forged ahead on its own.

After years in the lab, the company is in the early stages of thinking about rolling out the product through its Azure cloud, in a move that could have a profound impact on archival storage, cold storage data center design, and how we choose what to keep for the future.

The librarian

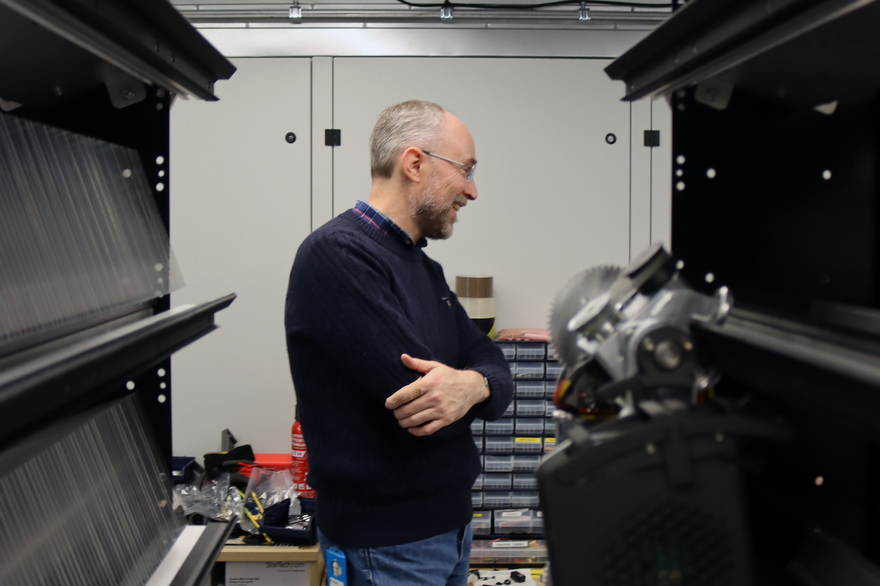

Black won’t stop talking. We’re behind schedule for our tour of the Project Silica research lab as he jumps from discussing optics and Rayleigh scattering to RAID storage to the costs of femtosecond lasers.

"It's always exciting to talk about the tech," Black says, gently holding an older iteration of the quartz glass Silica in his hands, which was itself holding a complete copy of Microsoft Flight Simulator.

But, for all the transparency of the medium and the tour, Black was coy on some crucial details about the latest version of Silica - including its density, current read and write speeds, or even its final exact size. "We've had a number of breakthroughs in the last few years that we don't want people to know about just yet," he teases.

Microsoft agreed to store this article on Silica (along with the rest of DCD's historical text and magazine output), but again has done so on an older version of the technology. The company does not have copy approval over this feature.

Black gave us about 100GB to play with - Microsoft has since confirmed 4TB and 7TB versions. But asking how high they can go is the wrong question, he says.

"We pushed the density a lot. Eventually, the Azure business asked us to stop," he says.

Everybody focuses on density due to three factors, he believes - 1) When people have to carry a device around, they think about how much they can fit in their pockets, which isn't a concern for archives; 2) As media eventually dies, when people replace it they want a bigger one; and 3) Cost.

That third point is a critical part of the sales pitch behind the Silica research effort. With HDDs, flash, or even tape, the medium is expensive - and even more costly over a long time period where it has to be replaced repeatedly.

Glass, on the other hand, is incredibly cheap and durable. Silica can happily survive being baked in an oven, microwaved, flooded, scoured, demagnetized, or exposed to moisture, and will do so for at least 10,000 years. Over those longer timescales, this means it can outlast many possible threats.

More immediately, it also means that it doesn't need any energy-intensive and costly air conditioning or dehumidifiers to keep it from rotting away. Once the data has been inscribed, Silica's cost is "basically warehouse space," Black says.

"We're competitive with Linear Tape-Open (LTO) on density, and that's why Azure said to us: 'stop pushing density, push other aspects of the project.'"

Like tape, but unlike HDDs and SSDs, Silica is also true cold storage. It requires no power to maintain it in its rest state. “Eventually, when the time comes, we know how to recycle glass,” Black says.

The main cost remains femtosecond lasers, which need to be capable of sending out pulses at 10-15 seconds, making plasma-induced nano-explosions that leave microscopic bubbles in the glass. Microsoft is hopeful that the technology will follow the trajectory of nanosecond and picosecond lasers and drop in price as it matures and grows in use.

Even if cost doesn’t drop dramatically, the cloud’s economics favor Silica. Black notes that Azure currently does not sell storage by technology type but by ‘tier.’

The company could feasibly simply sell the archival tier, and Microsoft would have “complete control over when we actually move it from [more traditional storage] to glass,” Black says, which would allow the company “to schedule that in a way that lets us run our writers flat out,” maximizing the use of the expensive lasers.

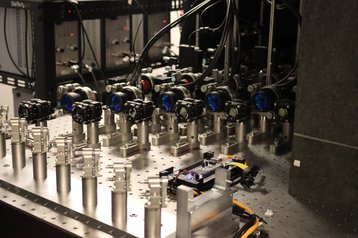

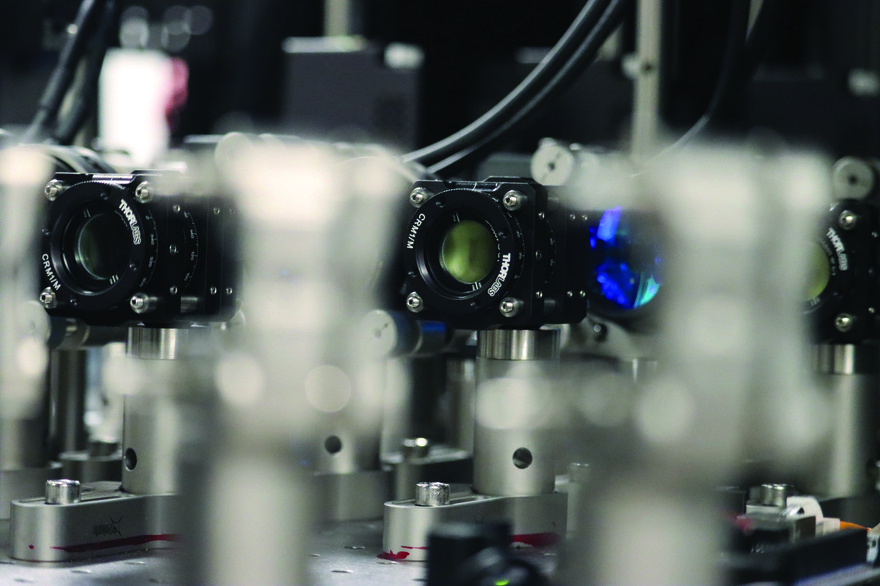

Black takes us to see one of the laser-based data-writing stations. It sprawls across a large table; lenses and mirrors protrude at different angles, while cameras and sensors abound. For safety, the laser is not operating as Black prods at different components (it is also why the technology will never be available for consumers).

The production version will be much smaller. Many of the sensors are only for research, and the system has been designed to be quickly upgraded and changed on the fly.

“When I first saw HoloLens, it was much larger than this,” Black says, referencing the company’s mixed reality headset effort. “Microsoft has a business unit that is comfortable with optics,” he adds, suggesting that the HoloLens team may help with the commercialization of Silica’s equipment.

Were Black to switch the laser on, the beam would be split into seven parts – this number differs on the version we were not able to see – that would simultaneously imprint data on the glass.

“We've got this thing here called a polygon,” he says. “Rather than trying to spin a piece of glass, what we actually do is spin the light across it, a bit like a barcode scanner at a supermarket checkout.”

The process starts from the base of the platter “so that you're always writing through pristine glass, you're not picking up any noise,” Black says. “It’s like pouring layers of cement that fill up layer by layer on the way to the top.”

Each little bubble is a voxel that represents data, with the laser having 180 degrees of freedom to develop voids at different orientations. “If you can differentiate between four symbols, then you can store two bits in one symbol,” Black says. “If you can differentiate between eight different symbols, you can store three bits in one symbol.”

Southampton got all the way up to seven bits in a single voxel, “but that takes a huge number of pulses from the laser and leaves a big flipping shiner in the glass,” which slows down writing speeds and limits how many voids you can fit on the platter.

“Around two to three bits is where you want to be, where we've got these nice little gentle bruises,” he says. “It’s only a small amount of energy to make each one, and you can use all that other energy for doing hundreds of them simultaneously, packing them in.”

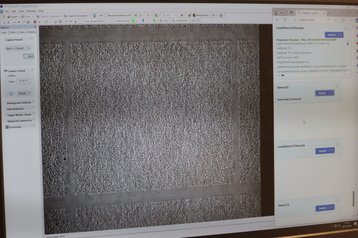

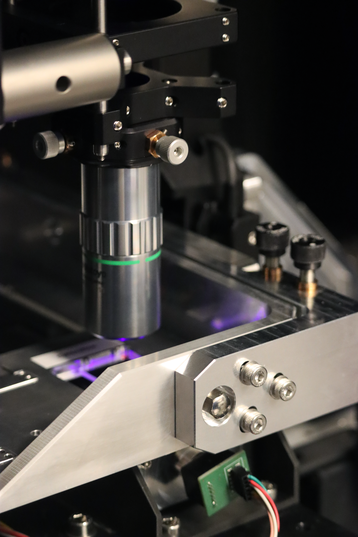

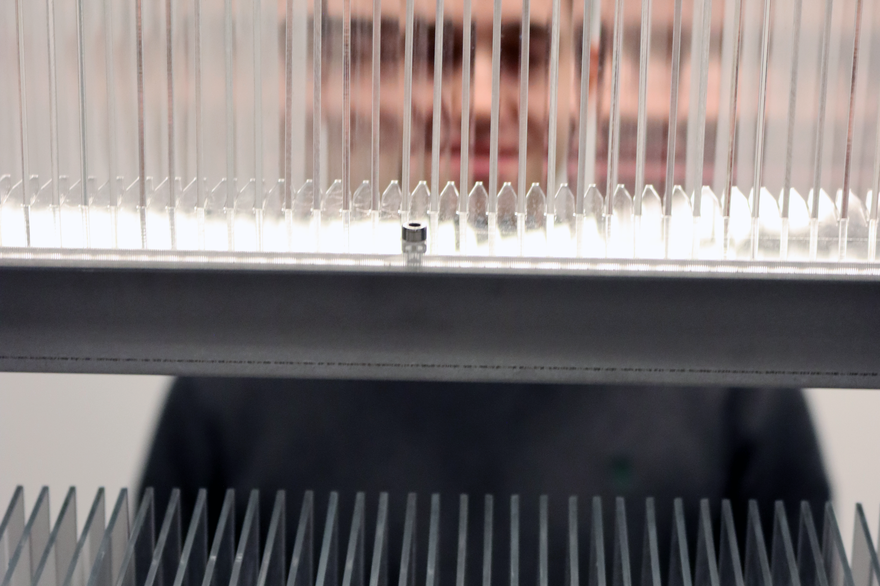

In an adjacent room, we get to see those voxels up close. It’s another large table brimming with lenses, wires, and exotic apparatuses. In the middle sits a square of glass, watched closely by a camera at the end of a series of lenses and mirrors.

“You have hundreds of layers of data in that two millimeters of glass,” Black says. “What we do is we sweep the glass through the focal plane of the microscope. So, as it comes into focus, you snap the data.”

The demonstration is slowed down so that our human eyes can keep up. The platter is moved so that the right sector of data is in the camera’s view, and then it takes photo after photo of the different layers of voxels.

Understanding Silica

Those images are the first step in re-converting the analog data for digital systems.

“Since the beginning of the project, we saw an opportunity to leverage deep learning in image understanding and signal processing,” Ioan Stefanovici, principal research manager in the cloud infrastructure group, tells us.

“We found that machine learning consistently outperformed all of the traditional signal processing approaches, and it was quicker to get results.”

Stefanovici adds that using artificial intelligence will speed up the final system and allow for faster iteration during research. Most crucially, it will help the company fit more on a single platter “because you need to write less error correction as your error rate will be lower.”

Training the AI was, to some extent, easy. While larger models today are pushing the limits of recorded knowledge to build their systems, the Silica team had the enviable position of being able to create data whenever they needed it.

If they wanted more images of voxels in glass, they’d simply laser another platter and put it in the microscope. “We're in the unique position that we can create as much training data as we want,” Stefanovici says.

It has yet to be decided where that AI will run and whether it’ll be on a custom ASIC or something else. But the team wants to ensure that any future AI can survive as long as the glass itself.

“With every piece of glass we write, we put enough training data in to be able to rebuild the model if needed,” Black says. “It takes a tiny amount of extra data. And it means that, just from that piece of glass, you could rebuild your model and do a decode.”

Stefanovici adds: “Every piece of glass is completely self-describing, so you can bootstrap from scratch and recover your data regardless.”

As Stefanovici leaves to continue iterating on the AI, Black is keen to note that they are not the only creators of this technology. The company, he says, has leaned on a number of different divisions and specialisms to bring Silica to this point.

Perhaps nothing is more testament to the different disciplines at work than the robots in the basement.

The first thing you notice about them is their speed. The second thing you notice is their shape.

There is a joke among evolutionary biologists that, given enough time, everything turns into crabs. English zoologist Lancelot Alexander Borradaile was the first to notice the process of carcinization, coining the term in 1916.

In at least five different instances, distinct species have separately evolved into crab-like creatures. With these robots, we have a sixth case.

Bots for bits

There are several of them.

They race along rails, hooked legs hanging onto a rail above and below. One stops, a grabber on its right side carefully extending to softly retrieve a Silica platter for carrying to a microscope-based read drive.

Then it lets go from the rail above it, its wheeled feet reaching out into thin air. The whole system flips around. It has dropped a rung, with what were its bottom feet now at its top.

This allows for a small number of robots to serve rows and rows of Silica platters, clambering up and down racks in search of the right piece of glass. Should one robot fail, another could go around it with relative ease.

Like with traditional media, Microsoft uses erasure codes including RAID (redundant array of independent disks) to store duplicate data around the library, so data isn’t stranded behind any broken robots. This differs from a tape library, which is often serviced by one large robotic arm that can disrupt the whole system if it breaks.

Each robot is battery-powered and standalone, a critical design choice, Black says. “We absolutely wanted the shuttle robots to be untethered, and wanted the storage racks to be completely unpowered. If you attach power, then you have all these electronics that have a lifetime and need monitoring.”

All of that equipment has a finite lifespan that could render the project obsolete before the death of the media itself. In the case of Silica, the shelf life of the system is the life of the shelf itself - or of the building it is stored in.

“So basically, the shelving, the glass, the building - which wears out first?” Black says. “Probably the building - once you're at that point, whether it's 1,000 years or 10,000 years, it's a bit moot.”

The robots may also have a more immediate impact on the data center sector, with researcher Andromachi Chatzieleftheriou confirming that Microsoft Research is “starting to think about how we can use robots for data center operations,” as she checked a cloth covering non-Silica robot prototypes that we weren’t allowed to see.

Last October, DCD exclusively reported that the company was hiring a team to research data center automation and robotics. On LinkedIn, those hired say that they are working on "zero-touch data centers."

Microsoft also has a broader robotics effort, one led by former DARPA program manager Dr. Timothy Chung, who previously led the OFFensive Swarm-Enabled Tactics program and the DARPA Subterranean (SubT) Challenge.

For these crab robots, their speed is an important part of reducing the time it takes to access Silica data. The sooner they can shuttle a platter back to a camera, the faster it can start to process. This is where things slow down, although Microsoft won’t say just how long it expects reading to take.

“It's definitely still targeting the archival space,” Black says. “In that space today, the standard is 15 hours.” Even though reading off of a tape is faster, that’s only once you’ve reached the right part of the tape - in reality, the system has to spool through a kilometer of tape to find the data, and then wind it back up.

“If you want milliseconds, use a hard drive,” Black says. “You’re definitely not online.”

For archives, that is part of the selling point. The tech is ‘write once read many’ (WORM), so cannot be altered after the fact by those looking to rewrite history, nor is it at risk of ransomware attacks.

Back up a bit

To understand what a technology like Silica could mean for archivists, we turned to John Sheridan at the National Archives, the UK government’s official archive.

He wants to talk about sheep.

"Medieval England had an economy based on sheep - just look at the wool churches in the east," he says, referencing the vast churches funded by pious wool magnates that dot the nation.

"Because there were a lot of sheep, it was easy to lay your hands on sheepskin parchment," Sheridan continues, growing animated. "And, consequently, medieval England is particularly well documented because parchment has amazing preservation properties."

In the 17th century, sheepskin had another revival. Lawyers discovered that the layers of flesh acted as an immutable record, "so if you try and alter the record, it shows on the skin."

Sheridan adds: "There's a long-standing relationship around the transaction cost of recording information and the cost of keeping information that has a really big bearing on what gets recorded and what gets kept. Long term storage is not new; we have a building full of parchment."

What is new is the level of density that a modern technology like Silica could provide. All the world’s 1.2 billion sheep could record but a fraction of what could fit in a single Silica warehouse.

Our understanding of the past is intrinsically linked to the choices of contemporary individuals and the medium they used. “Most civilizations wrote on perishable degradable organic materials: wood, bamboo slips, textiles, paper, parchment,” Curtis Runnels, professor of archaeology, anthropology, and classical studies at Boston University, tells us.

“This means that the lion's share of all written texts are gone forever.”

We don’t know how much we’ve lost - the best we can do is make guesses from what remains. “The ancient Mesopotamians and the Hittites wrote on clay tablets which they fired to preserve; the Hittite archive runs to more than 25,000 such tablets,” says Prof. Runnels.

“That is a very rare exception. When you consider that the Library of Congress or the British Library each have in excess of 100 million texts, you get an idea of the scale of the loss from ancient empires.

“Almost all the thoughts of humans who have ever existed are lost. A tiny bit of human thought and experience has slipped through the memory hole. How would we measure the loss if the ancient scriptures (like the Torah or New Testament or Bhagavat Gita), poems (Iliad, Odyssey, Aeneid, Mahabharata), philosophy (Lao Tzu, Buddha, Plato, Aristotle), and science (Archimedes, Euclid) [were lost]? Yet they are only 0.001 percent of the accumulated store of human knowledge.”

He adds: “But what we have is priceless.”

We ask Runnels, who was not aware of Silica, about what we could do to ensure that the texts of today would not be lost. “Inscribe the text in multiple scripts and a key for decipherment (like a chart/sound correspondence code/microchip) inside of some material like glass, say in a glass block,” he says.

“Then make many copies and distribute them around the world (several hundred thousand per continent) each block marked with huge structures or monuments like towers. One might get through to the future.”

A message through time

Time is on Jonathon Keats' mind. The conceptual artist and experimental philosopher has long created projects focused on exploring our epoch and what comes next.

His latest work centers around two cameras: One that lasts 100 years, and another that lasts 1,000. The first, the Century Camera, is an inexpensive pinhole system developed with UNESCO where individuals are encouraged to hide hundreds of them across the world.

"Most of them are going to fail, but it's cheap enough that we can have redundancy,” he says.

The Millenium Camera is more expensive, and is only being installed in a few specific locations, including upcoming deployments in Los Angeles and the Swiss Alps.

As we speak, the sun is shining on Tumamoc Hill, overlooking Tucson. A few photons of sunlight make their way through a tiny pinhole in a thin sheet of 24-karat gold housed in a small copper cylinder perched on top of a steel pole.

True to its temporal nature, the technology behind this camera is old. “It’s a concept from about 500 years ago,” Keats says. “Basically, you rub the copper with pumice, and then you rub it with garlic - nobody even knows why you rub it with garlic, but it helps to bind the oil.”

That oil, itself a technology traced back to at least the ancient Egyptians, is then glazed to leave a millennia-long exposure on the back of the copper cylinder. “There are myriad reasons why this is likely to fail,” Keats says.

“First and foremost, we're in beta, nobody's ever done it before, I have no real way in which to be able to iterate, given that I don't have 1,000 years to live to be able to create my first prototype.”

Therein lies one of the fundamental challenges of any signal to the future. With the Internet’s Transmission Control Protocol, or TCP, an acknowledgment - or return signal - is a core part of any communication that is sent from one system to another.

“Here's the rub,” archivist Sheridan says. “If you're sending messages through time, the future can't send you the acknowledgment 'message received.' You’ve got to spread your bets, you’ve got to have redundancy.”

Symbols without meaning

Should our message survive through unknown millennia, it is also not clear that our glass-encased words will even be understood.

"It is not a possibility but rather a certainty that any given language will have considerably evolved 10,000 years from now," Filippo Batisti, postdoc in philosophy of language and mind at the Catholic University of Portugal in Braga and co-founder of the Cognition, Language, Action, and Sensibility – Venetian Seminar (CLAVeS) in Italy, says.

"Small changes happen all the time in front of our eyes and their sum over decades amounts to grandchildren talking and writing somewhat differently than their grandparents."

Over a couple of generations, mutual intelligibility can be mostly preserved - but as time stretches on, that link begins to fray. "Linguistic intercomprehension will become the first concern," warns Batisti.

"Gutenberg and computer writing are separated by a mere five-and-a-half century interval: Here, we're talking seventeen times this difference in a world where technological progress is much faster!"

It is not inconceivable that a future civilization would turn to AI to help decipher any discovered texts - or that the civilization could simply be an AI.

Already, we can see how our primitive technology can resurrect dead and forgotten languages. This past year alone, there have been “two marvelous breakthroughs, one with the Kushan Script and the other the Herculaneum papyrus rolls,” archaeologist Runnels says, with AI helping unlock new understandings of life in Central Asia and insight into a city destroyed by Mount Vesuvius’ eruption.

AI “has proved to be very good at deciphering unknown scripts and, when combined with CT-scanning and other technologies, to be able to read charred or otherwise ‘unreadable’ fragments of ancient books. I think that we will see all the undeciphered scripts (and there are many) broken in the next five years at the most.”

Of course, we still suffer from the lack of contextual background that would help us fully understand the texts. “I can have all the AI translations of my words but our lack of comprehension is not only linguistic, they are not understanding the conceptual/cultural framing that makes me have that particular need,” Batisti says.

“This is more of an interpretative problem, rather than one of mere linguistic translation. AI-assisted translation will hardly be of help alone. This, incidentally, is also a very good reason to defend the value and the usefulness of the humanities against the ever-trending hyper-scientistic utopias.”

Lost in translation

Even if a future civilization relies on a language somewhat similar to our own, and is able to translate texts for their time, "the problem might be that entire sets of single words would turn out to have empty referents: entire concepts, taken in isolation, would then be lost," Batisti says.

"The longer the distance, the more the pieces of life (like material culture, or social norms, or modes of knowledge and beliefs) attached to words change. By then, our comprehension of physics or even medicine will be different and its social significance as well. Even words or concepts referring to our own body and biological features could be construed quite differently. That already happens today in different cultures around the world."

The archaeologists of today do not just rely on texts - they look for dwellings, statues, lost cities, and other civilizational detritus to help paint a picture of the past. Luckily for future historians, but unfortunately for everyone else, we are leaving a far greater message to our descendants.

Climate change, biodiversity loss, and plastic waste are but a few of the anthropogenic scars we will leave on our timeline. With or without Silica’s recordings, a successor civilization would be able to decipher our values and priorities from our actions.

Artist Keats hopes that his work, and those of others, will help people think at longer time scales to understand the compounding effects of loss and change.

“It is essential that we be able to situate ourselves in relation to the deep past and to the future,” Keats says. “By virtue of the fact that what we do will persist for a long time, we are responsible to the future.”

That said, he warns that such a view can also be coopted to justify anything in the now. “There are many more generations that will live after us than that have lived before us,” he says. “And if we think about suffering, and we take a utilitarian way of considering suffering, then the far future as a whole is far more important than the present,” a concept that can allow for dangerous moral assertions.

“We are in the present and we need to be living fully in the present in order to be able to make the kinds of decisions that are actually going to have a positive impact on the future. It’s a delicate balance.”

That present is one increasingly dominated by AI.

Generative AI, in particular, has become the technology of our age - or, at least, has been hyped up to be so. The largest hyperscale cloud companies, including Microsoft and its competitors, have announced record investments in data centers and servers as they gear up for a profound jump in the computational capabilities of our species.

This moment also represents another delicate balance for archivists and others interested in recording the world, one of great opportunity and even greater risk.

An AI audience

Generative models are hungry. Current approaches have seen companies circumvent copyright laws to ingest most of the Internet and a huge number of the world’s texts to help the models grow dramatically smarter over the past year.

This feature, once published, will soon be scraped and added to the great slop of data that is being pumped into the next wave of models. But it’s not enough. To keep growing, the models need ever more data, and they are running out.

One way to circumvent this has been for companies to feed their model synthetic data (knowingly or unknowingly), allowing them to create the data they need. But this can lead to model collapse, as the system errs ever further from baseline reality.

Another approach could be to digitize the treasure trove of data we have from the past - something made more possible by lowering the cost of storage through Silica or other technologies.

"I wonder whether it opens up new opportunities and new models for digitizing analog collections, because we've got hundreds of kilometers of records of humanity and less than 10 percent of that has been digitized," Sheridan, head of the National Archives' digitization efforts, says.

"I've been working at digitizing stuff really hard for a long time. But maybe the economics of digitizing the analog records of humanity - which are pretty extensive - shifts in a really profound and interesting way."

Signal through the noise

The models that we are building by mining our archives also risk polluting them.

“What we have now is a past that never existed,” says Andrew Hoskins, interdisciplinary research professor at the University of Glasgow and founding Editor-in-Chief of the journal Memory Studies.

“Large language models are regurgitating something that never was.”

There is no way to prove that this feature was written by a human. Its length and hopefully its clarity offer some hints, while - were one to invest the time to fact-check it - the lack of hallucinations and fabricated quotes offer another clue.

But that is hard enough today. For a future historian, perhaps sifting through countless records of multimedia content generated by AI models from the next decade, unable to call up sources, and lacking the contextual clues that might speak of human origin, what could they make of this text?

We are creating a great deal of noise that could deafen recorded reality, leaving mirages and illusions of ourselves alongside real videos and text.

The recorded self

Even without generative AI, the amount of data we produce is rising at a dramatic rate.

At the turn of the century, as the power of digital technology to record our lives became clear, "there was this obsession over total memory," Hoskins recalls.

Companies at the time pitched products that could record your life in full: “It was this bizarre advertising like, ‘you'll never miss your first kiss, you can go back and see it at any time.’” The technology was expensive, impractical, and clunky, and it never really took off.

“And then in the past two years, it's started to become a reality.”

As a culture, recording and sharing more and more of our lives has become the norm. Even if you try to limit your own sharing, interacting with modern society means your data will inevitably be stored.

Earlier this year, a report commissioned by the US Director of National Intelligence said that intelligence community (IC) member agencies "expect to maintain amounts of data at a scale comparable to that of a large corporation like Meta or Amazon," and raised concerns about their ability to hold all of this surveillance data.

The "IC has the potential to be one of the largest customers for cold data storage because of its wide-ranging need for information," the report states, laying out the problems of short-lived storage platforms - HDD density growth is slowing, SSDs don't last long enough, and tape will likely hit super-paramagnetic effect limits by the end of the decade, capping density improvements.

The report found that Microsoft’s Silica and rival Cerabyte’s ceramic storage were the only two technologies expected to be capable of storing the coming wave of IC data in the near term.

What we should forget

While the intelligence community will argue that widespread surveillance is key to national security, it continues to be an ethical morass that democracies have failed to fully debate or address.

The scale of the records we provide of ourselves, and that corporations and governments keep, is unlike anything we have ever maintained. An entry-level employee in a quiet backwater town will have more records kept about them than kings and emperors of the distant past.

Sometimes there is a value in losing data, argues Hoskins.

“Forgetting is not always a bad thing, societies need to forget enough to be able to move on," he says. "My teenage years were not recorded, all the crappy things I did no one knows about."

Beyond his own youthful foibles, Hoskins wonders what else should be relegated to the dustbin of history. “Traditionally, the media that carries memory - paper, photographs, etc. - they yellow and fade and decompose in a natural way. That’s how societies forget, it’s a decay time.

“It's a natural thing for memories to disappear. The digital era, of course, just totally messes that up.”

What we might accidentally forget

At the same time, modern recordings suffer from a troubling flaw - they often require complex technologies to understand them, including layers of proprietary software, or always-online servers.

As an example, in “a pervasive software like Microsoft Word - what is encoded in the file and what Word computes when you open the file is not obvious, including to most users of Word,” archivist Sheridan says.

"Our systems have become so complex - we have no idea how anything works, because it has many, many layers of interconnected software and complexity.”

This presents a real “challenge for long term preservation as the systems that we need to use to render information over time become more complex, and the ability to preserve whole infrastructures doesn't look economic.”

Even if the economics are solved, closed source software and intellectual property issues can limit what archivists are able to keep.

“We think that having institutions whose job this is to solve is a really important thing,” Sheridan says. “It’s all the more important, because digital stuff doesn't keep itself, unlike parchment.”

The world’s library

With Silica, Microsoft could be set to store all these disparate worlds within its halls - the representations of real ones, the digital ones, the intentionally fake ones, and the hallucinated ones.

Beyond copyright laws and the broad guidelines of Microsoft Azure’s terms of service, it will not be Microsoft’s job to police and maintain what goes into the glass. Nor would we necessarily want that responsibility to lie with the world’s most valuable public company.

The company will own the technology, although others are working on alternative approaches (see box), and will probably solely offer Silica as a cloud service. But it likely won’t be too aggressive a gatekeeper on what gets stored on the glass, beyond who is willing to pay and won’t break the law.

Instead, that will be down to all of us on what we choose to create and record. Archivists will be at the frontline of the fight to pass on knowledge to future generations.

Lowering the cost of storage, and the risk of loss, is just the beginning of what it means to store the world’s data. “Commoditized, low-cost long term storage gives digital archives the opportunity to put more of their effort into the stuff that is less well solved,” Sheridan says.

“If this is what this is, that's fantastic, because it means we can put more of our effort and energy into all the other parts of the jigsaw.”

Cost savings over the long term will be the thing “that will drive societal change,” Microsoft’s Black believes. “Whether it be hospitals keeping medical data or extractive industries keeping accurate details on what they did to the ground, when you move it to Silica that incremental cost just goes away.”

He expects to see a shift in how people treat data, and that regulations will also change to increase how long different sectors have to hold onto information now that there are no technological or temporal limitations to indefinite storage.

“If people internalize what it actually means, I think it's going to be a complete step change in how people think about data preservation,” colleague Stefanovici adds, mentioning how much scientific data from experiments is currently not stored, and how historical data simply no longer exists.

“We don’t need to have so much loss."