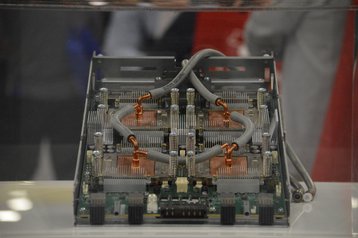

Liquid cooling, once the tool of extreme high performance computing and obsessed overclockers, continues to go mainstream with virtually every hardware vendor supporting and encouraging it to some degree.

The question is at what point do you need it, and how should you deploy it? Is it only for new data centers, or are retrofits possible?

At the rack and server level, virtually all conventional data centers rely on airflow for cooling. The traditional method of air cooling is a computer room air conditioner (CRAC) to cool the air as it enters the computer room, but in the last few years, the IT industry has gotten hip to free cooling, where just outside air is used and the chiller is eschewed. This makes the data center slightly warmer but still well within the tolerance of the hardware.

But even the chilliest of air conditioners will run up against the limits of physics. Eventually, there is simply too much heat for the fans to dissipate. That’s where water, or some other liquid, comes in. Air conditioners can move around 570 liters (20 cubic feet) of air per minute. A decent liquid cooling system can move up to 300 liters of water in that time but, since water has 3,000 times more heat capacity than air, the liquid cooled system is shifting about 1,500 times as much heat as its air-cooled rival.

This feature appeared in the March issue of DCD Magazine. Subscribe for free today.

Despite the hype, liquid cooling is still somewhat nascent and data center providers rarely pitch.

“We don’t actively recommend it,” says John Dumler, director of energy management for data center provider Digital Realty. “At this point it’s really a retrofit market. A customer can ask for a data center retrofit, while others are saying they would like to bring in GPUs, the data center has got the pipes and we want you to connect them.”

Brett Korn, project executive for DPR Construction, which builds data centers, isn’t seeing much activity either: “I see one project every year. It’s not done frequently,” he says.

So what’s the dividing line? It comes down to heat and economics.

Consideration #1: Old vs new

Don’t waste your money retrofitting old hardware. It’s not going to make much difference in terms of heat and the investment won’t pay itself back, says Jason Zeiler, product marketing manager with CoolIT, maker of enterprise cooling solutions.

“It’s pretty rare to see an existing data center retrofit any machinery,” he says. “The cost is just too prohibitive to take machines down and retrofit them to be worth the time and money. Early in CoolIT’s history we were involved with retrofits but they proved to not be beneficial for anybody involved.”

DPR’s Korn said his firm never takes the initiative in pushing liquid cooled upgrades. “The economics aren’t there to put a water cooling system if it was not client-driven, and I don’t see the economics that would allow you to do it in a pre-existing data center,” he says.

If you have a 250 square meter site, at what point do you say let me scrap the site and start over? It depends on the age of the site, said Korn. “If a lot of equipment is old you would have to ask why put good money into an old site. If a site is five or six years old and has a lot of life, then you might do an upgrade,” he says.

Consideration #2: Know your density

For Jason Clark, director of R&D for Digital Realty Trust, the most basic question is around what is the ultimate density and how many cabinets will you place in close proximity.

“If a customer has one 25kW cabinet, that’s different from six cabinets as low as 18kW,” he says. “You tend to run out of runway [for air cooling] at 18kW racks. It’s very situational but where things get challenging is above 18kW.”

The density consideration applies not just to compute gear but also networking equipment as well. If it’s packed in tight with the servers, that might also be a good reason for using liquid cooling, says Clark. “With machine learning or AI boxes, they need to be very close together for high speed networking. That increases heat density,” he says.

Zeiler said anything over 30kW is where CoolIT draws the line. “Any time we see a customer planning a new data center with a density over 30kW per rack is a strong indicator for liquid cooling to optimize performance. Anything above that starts to overload regular air systems,” he says.

Consideration #3: Operating and capital expenses

Water vs air cooling is an opex vs capex argument. With water cooling there is greater upfront expense than for air cooling, thanks to its novel hardware. Since air cooling has been around longer, there is a greater choice and the products are mature.

But with water cooling, operating expenses over time are much lower, says Zeiler. “Pumping water requires a lot less electricity than running air. With liquid cooling, you can significantly reduce the number of fans so cost over time is substantially lower,” he says.

In a 42U cabinet with 2U rack-mounted servers, there would be four compute nodes each, and each node would have three fans. So you have 12 fans per 2U server, that comes out to 252 fans per cabinet, each fan only draws up to a couple of watts, but that could add up to 500W for the fans in the cabinet, With liquid cooling, most of the fans can be removed outright, with just a small number left to do ambient cooling of the memory and other motherboard components, which makes for a substantial saving.

It’s much quieter, too, since the servers can get by with few or no fans. With hundreds of fans screaming in each cabinet, data centers can hit 80 decibels. That’s not quite the level of a Motörhead concert but enough that some data center workers are advised to wear ear plugs.

Consideration #4: Use cases

For basic enterprise apps, like database apps, ERP, CRM, and line of business (assuming you haven’t moved to Salesforce or a competitor yet), you really don’t need water cooling because the overhead is not all that severe. Even a high utilization application like data warehousing has gotten by just fine on air cooling.

But artificial intelligence and all of its branches - machine learning, deep learning, neural networks - do require liquid cooling because of the extreme density of the servers and many AI processors, particularly GPUs, run extremely hot.

However, how much of your data center is running AI applications? Probably not a lot, just a handful of racks or cabinets at best. So you can get by with a small amount of liquid cooling.

Korn spoke of one client, a pharmaceutical firm, that was doing AI simulations in genetic analysis. The workload was 50kW, so it used a chilled water rack blowing cold air.

Korn said the company may use a bigger rack as AI picks up steam but right now, “everyone talks AI but only a handful of apps have real world application.”