"When we started, the first slide in our pitch to startup accelerator Y Combinator was ‘What is a GPU?’,” Paperspace founder Dillon Erb recalls.

This is not a question likely to be posed by anyone in 2024, but when Paperspace was founded a decade ago, the cloud market was still in its infancy. While the hyperscalers had all launched, only Amazon’s AWS had GPUs on offer - it would take Microsoft Azure until 2016 to launch GPUs on its cloud, and Google until 2017.

“Amazon had an instance, but I think we were the first fully GPU cloud,” Erb says. “It’s obvious now, but we thought that GPUs would be a big part of cloud computing.”

As a provider of a dedicated GPU cloud, Paperspace was early on the scene. But with artificial intelligence now all the rage, the company is faced with having to prove that it wasn’t too early, and that it can keep up with relative newcomers like CoreWeave and Lambda.

To do so, it became a part of mid-tier cloud provider DigitalOcean (DO) in a $111 million deal in 2023. “They really pioneered having a developer focus, going after developers and startups in the lower mid-market,” Erb says. “Once you get really big, then there are bigger clouds to go to.”

This strategy has proved somewhat successful for DO, with a market cap of $3 billion at the time of writing - but it has also limited growth. By focusing on the smaller deals and companies, it has served as a stepping stone to the hyperscalers.

Despite launching its business way back in 2011, DO has mostly failed to ride the enterprise cloud migration wave that boosted hyperscaler revenues. After listing on the New York Stock Exchange in 2021 at a valuation of $4.48bn and an initial year of investor support, it has struggled to convince the market that it can grow.

“The business they've grown has been really successful at targeting that scale of customer,” Erb counters. “We have bigger ambitions now because the GPU or AI revolution means that smaller companies can do a lot more. We are pushing to hopefully be able to grow with customers as they grow.”

He added: “There's a massive growth opportunity here. The question everyone internally is asking is ‘how do we turn this from a company that's worth three or four billion to being worth like 10× that?’ And, of course, there are concerns that we’re going to have a supply-demand mismatch eventually as everyone builds out compute.

"But my strong feeling on that, just having watched this space, is that demand for compute is nearly infinite. We have invented these really cool AI softwares that can just eat it all up.”

Such bullish commentary is in line with much of the tech industry’s wild optimism for the future of AI, which has others worried that reality will eventually catch up.

"I'm in the bubble," Erb says with a laugh. "But demand for compute will continue to outstrip supply for the next few years. There's an insane amount of momentum around it and we feel like we're the center of this universe that a lot of people still don't realize exists."

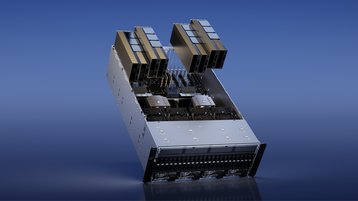

With the excitement around AI at fever pitch, it is hard to truly gauge how Paperspace and DO stack up. “Right now, the reality is that demand for compute is so high that, if you can rack and stack these pizza boxes, people will come and use them,” Erb says. “That will last for a little while.”

The GPU shortage is beginning to fade, although the data center industry still cannot build out and power data centers fast enough to keep up with demand, causing customers to compromise on who they do business with.

When that starts to improve and companies can be more discerning with their choice of provider, Erb is hopeful that developer experience will come to the fore.

“That’s what DO is best at, we have hundreds of thousands of customers. The whole thing has always been about simplicity, in the face of like massively complex hyperscalers.”

Similarly, he believes that other AI clouds will struggle to catch up with DO’s cloud software stack, even as they rush ahead with more GPU deployments. “What does a CoreWeave or a Lambda have to do in the future to become a cloud computing company?

"That's actually not easy to do - you need to be globally distributed, you need firewalls, you need load balancers, etc. I think DO is kind of a sleeper here. I think we're well positioned to become one of the better, if not the best in this market.”

Paperspace operates out of three data centers, adding to DO’s 15 data center-strong footprint. It’s not yet clear how many of the sites will add significant GPU footprints - “we're not trying to retrofit old data centers to become new ones” - but Erb hopes to lean on the company’s global reach to differentiate from other AI clouds. “Everyone in the world is trying to do AI, not just the US.”

Like the rest of the industry, how fast it will expand is somewhat dependent on how quickly it can get its hands on the latest GPUs, and how many it can get its hands on. But with so many of its customers being smaller, there are still opportunities to be found in older gear.

“For our customer base, there's a lot of folks who say ‘I don't actually need the newest B100 or B200,’” Erb says. “They don’t need to train the models in four days, they’re okay doing it in two weeks for a quarter of the cost. We actually still have Maxwell-generation GPUs [first released in 2014] that are running in production. That said, we are investing heavily in the next generation.”

Echoing the struggle of many in the AI sector, Erb believes that different generations of Nvidia GPUs represented the extent of the AI compute fragmentation. “I'm hopeful that there are more options [beyond Nvidia],” he says.

Paperspace was the first place that people could try out Graphcore’s IPU, but the company has been teetering on the edge of bankruptcy for some time after failing to compete with Nvidia and last week was purchased by SoftBank.

"Our premise early on was that we would be more agnostic and are working closely with Cerebras, SambaNova, and Groq," Erb says. "I've seen a bunch of transformer-specific hardware coming out. But I'm not sure if I would bet against the [Nvidia] machine."

For training, "it's Nvidia 99 percent of the time because you can't convince people to learn a new stack," Erb says, but the Paperspace founder sees an opportunity for new architectures "as we move to inference, if you can swap out Groq behind the scenes, and its price-for-performance is better, I think you will see some downward pressure."

Operating with a global footprint and a broad compute stack, Erb hopes that DO will be able to exert its own downward pressure on the cloud industry. “DigitalOcean has just been not present in this ecosystem,” he says. “And so, if we can move quickly and bring the best of what we did and what they're doing, I think it's super compelling.”