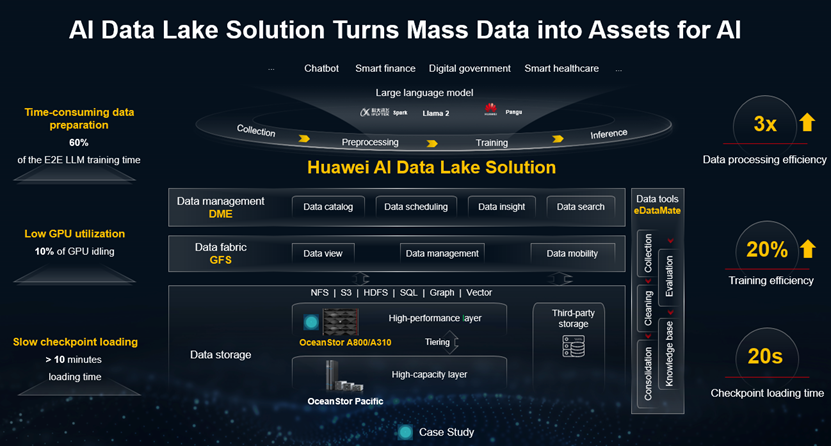

At this year’s Mobile World Congress (MWC) in Barcelona, Spain, Chinese ICT giant Huawei announced its AI Data Lake Solution, which it describes as one of its “solutions aimed at helping enterprises worldwide build leading data infrastructure in the AI era.”

There are a number of challenges that must be overcome when improving AI model quality.

Firstly, data preparation is a bottleneck, typically accounting for 60 percent of end-to-end model training time. Two reasons that hinder high-quality data preparation include diverse data access and EB-scale raw data of sample sets. These are tough nuts to crack in model training.

GPUs are the most critical component for this type of training, as improving GPU utilization typically correlates to significant cost reductions. In model training, storage latency often causes GPU idle time which accounts for up to 10 percent of total GPU execution time.

A complex process that can extend up to several months in certain cases, this is compounded by interruptions caused by low cluster computing efficiency, frequent faults, and slow troubleshooting. These headaches make model training unable to resume quickly, reduce success rates, and drive up training costs. To avoid the impact of downtime or faults, checkpoints are used periodically to record and subsequently load training results. Even with this safeguard, loading can take well over ten minutes, which is inadequate for LLM (large language model) training.

DCD spoke to Michael Qiu, president of data storage, global marketing & solution sales dept at Huawei, to learn more about what’s on offer. We begin by asking Qiu about the choice to refer to an “AI Data Lake” solution when the offering extends to Data Warehouse and Data Lakehouse functionality.

He told us: “DataLake/LakeHouse are concepts of the Big Data era. Most of these solutions are part of a build with off-the-shelf software and general-purpose hardware, but as we enter the AI/LLM era, we need an AI-ready data infrastructure to support both Big Data and AI mixed workloads, where data infrastructure plays an important role. That’s why we planned the solution and named it the “AI Data Lake”, including both software and hardware layer innovations.”

Visible, manageable, available

Huawei’s “AI Data Lake” solution aims at ensuring visible, manageable, and available data, transforming mass data into valuable assets, and accelerating the entire AI service process. Here’s how it works:

A unified data lake storage resource pool is equipped with both a high-performance tier and a high-capacity tier, the latter can be flexibly expanded to store mass data. The high-performance layer provides 100M IOPS and 10 terabytes per second of bandwidth, improving LLM training efficiency.

An intelligent data tiering feature is used to store the hot, warm, and cold data in appropriate storage tiers and ensure optimal total cost of ownership, or TCO.

Data fabric is used to efficiently collect mass data across vendors, regions, and systems, achieving on-demand and secure data mobility. A data toolchain is provided to implement a one-stop automatic conversion of data into knowledge. This process involves data cleansing and enhancement, such as the automatic generation of high-quality QA pairs and the automatic conversion of data into a vector knowledge base.

All this comes together with a data management platform to implement global data asset management and control, including global data asset lists, data collection and analysis, and data sharing management.

The AI advantage

Qiu explains some of the innovations that the ‘baked-in’ artificial intelligence can bring to the efficiency of AI workloads:

“OceanStor A series products are a design which is dedicated to AI workloads for many reasons. They offer extremely high performance for mixed I/O models because, during AI processes like data collection, cleaning, training, and interference, the storage should provide high bandwidth for big files (checkpoint, videos, big images) and high IOPS for small files (text, pictures, audio), IT also requires different I/O behavior, including Sequential Read/Write and Random Read/Write, which is quite different compared to the requirements for processing big data.”

He emphasizes: “EB-level expansion capabilities with single namespace access, and LLM training clusters have been entering trillions of parameters and require EB-level of the original data collected. If we build AI data infrastructure across several regions, moving data across different storage clusters will cost over 60 percent of the entire AI training process. Additionally, single namespace access can make up-layer AI workflow easier, with no need to manage the distribution of data in storage.”

In addition to these two points, the solution integrates Vector knowledge base and supports multiple file systems including NFS, S3, and Parallel at the same time.

Oceanstor at the storage core

At the heart of Huawei’s offering is the Oceanstor A800, a new type of storage offering blisteringly fast speeds. With an innovative data and control plane separation architecture, OceanStor A800 allows data to be directly transferred from interface modules to disks, bypassing bottlenecks created by CPUs and memory.

This means OceanStor A800 can deliver 10 times higher performance than traditional storage and 24 million IOPS per controller enclosure. In addition, its training set loading is four times faster than its nearest competitors.

The OceanFS innovative high-performance parallel file system lets OceanStor A800 achieve a bandwidth of 500 GB/s per controller enclosure. This means that when training a trillion-parameter model, a 5 TB–level checkpoint read can be completed in just 10 seconds, and training can resume from checkpoints, in this case, three times faster than its nearest competitor.

OceanStor A800 supports flexible expansion to form a hypercluster. It can scale out to 512 controllers and supports EB-level capacity for LLM clusters with trillions – even surpassing ten trillion – parameters. It can also support capabilities such as embedded data fabric, vector engine, and data resilience. These capabilities can be loaded based on customer requirements.

Sustainability matters

Finally, with sustainability a word on everyone’s lips, we wanted to explore the power consumption credentials of Huawei’s AI Data Center Solution. Qiu explains: “Power efficiency is an important factor for AI infrastructure and our product matches this requirement well.

“We adopt higher performance and capacity density in our AI data lake solutions, like 1TB/Watts (the energy used to read/write 1TB of data in 1s only costs 1 joule), 500GB/s and 24 million IOPS per 8U space for our performance tier, 64 disks in our 8U space for our capacity tier. This is bolstered by the fact that using our solution can reduce footprint, helping customers sharply reduce rack space and related cooling requirements.”

The Huawei AI Data Lake solution realizes the efficient collection of diversified mass data from multiple vendors, regions, and systems, using data fabric. On-demand and secure data mobility is complemented by globally visible and manageable data, to achieve three times higher cross-domain data scheduling efficiency.

The solution also provides up to 500GB/s and 24 million IOPS for LLM training that supercharges the checkpoint write, recovery, and initial loading. Slashes GPU idle time while improving GPU utilization, combining to improve training efficiency by 20 percent on average.

For more information about Huawei Data Storage, click here.