I flicked through the pages of the magazine to the article on dark matter as London City Airport disappeared beneath me. I was en route to CERN, one of the foremost particle physics research centers in the world, to find out more about how CERN’s big data challenges could shape the technologies of tomorrow.

About 12 hours later, faced with my own big data challenge after spending a day at the campus on the Franco-Swiss border, I realized how similar the big data challenge actually is to the search for dark matter. Dark matter is mysterious. It behaves differently to normal matter and is difficult to spot, but scientists believe it makes up about 80% of all matter in the universe. They are constantly upgrading experiments for greater power and detection to gain a better understanding of it.

CERN’s computing teams are doing a very similar thing when it comes to big data. For them data moves in ways that computing teams can rarely predict. Offering a service, their work is often governed by increasing scientific demand. Committed to being at the forefront of compute technology for research, they are also on their own path of discovery – to find better ways of understanding and dealing with rising amounts of information through new technologies, and delivering this in a way that allows big data, and henceforth dark matter, to finally take on a usable form.

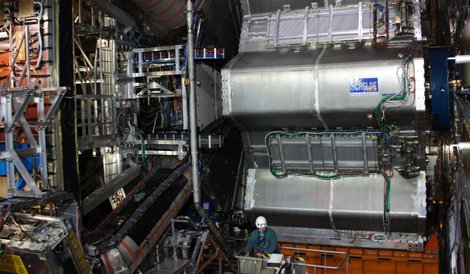

One of CERN’s biggest experiments, ATLAS, has been running since 2009. It is just one of four experiments sitting along the Large Hadron Collider (LHC), which circles 27km, 100m underground. ATLAS is in search of the Higgs boson – the particles thought to have been created by the ‘Big Bang’ that are now believed to act like a glue for the galaxies of the universe.

The ATLAS experiment at CERN

During my visit late in March, the place was abuzz with the news that a Higgs boson had been discovered. ATLAS had just been shut down for two years of upgrade work. Scientists, however, were still sifting through three years’ worth of data collected by the experiment. This is how they found a particle consistent with the makeup of a Higgs boson.

Just before my visit, CERN announced it had hit the 100 petabyte mark for the amount of data stored in its mass storage systems. Those working behind the scenes, managing CERN’s massive data center and network, say this will only grow once the LHC experiments are turned on again.

To handle this growing demand, CERN relies on relationships with a number of vendors formed through its openlab project, which has been designed to develop and test new technologies.

Technology companies use their relationship with CERN to test new innovations, the results of which are often fed back through commercial technology roadmaps.

CERN also offers advisory services for government bodies in the EU and research organizations around the world, and contributes heavily to a number of leading open source projects. This means that CERN is more than just a research center for nuclear physicists. It also plays an integral role in the development of the big data technologies and processes that could find their way into commercial development – from infrastructure to software.

The evolution of big data

To understand how CERN contributes to big data innovation it is important to understand big data at CERN. Scientists use the LHC to provide collisions at the highest energies ever created by man. These particles are manipulated and collected for observation at the experiment points. The 100 petabytes CERN stores is only a portion of what its IT systems have to work with before items are even earmarked for storage.

The sheer size of the ATLAS experiment is overwhelming – half that of Paris’ Notre Dame Cathedral. It stands nine stories high, has a 25m diameter, is 46m long and weighs 7,000 tonnes. Its aim is to prove Higgs Boson particles – which have a mass 126 times bigger than a proton. Physicists found the first Higgs by measuring head-on particle collisions created in the center of ATLAS. Both experiments measure these collisions using Calorimeters (for measuring energy) and the Muon System (which identifies and measures the momenta of Muons – unstable subatomic particles). Their movement tells CERN scientists a lot about what type of particles they are.

What a particle collission in ATLAS looks like

Many of these recorded instances – the LHC produces about 600 million collisions per second – produce invalid results. The success of the LHC experiments depends on what is a valid result, and there are many layers to determining this. Essentially, searching for the Higgs Boson is like playing a giant game of Guess Who.

100,000 CDs a second

If all the data that occurred in ATLAS was recorded it would fill up 100,000 CDs per second. But it isn’t. Data collected by ATLAS, which uses 3,000km of cables, is fed through to hardware set up on the experiment site, which filters 99% of this data. With information coming in at a fraction of a second, detailed analysis cannot be carried out. So this onsite hardware is equipped with algorithms that can detect ‘regions of interest’.

A tiny fraction of the cabling inside the ATLAS experiment

The head of CERN openlab Bob Jones says this is essentially pixelated activity, and scientists have created an algorithm embedded in the hardware that can show regions they will most likely want to delve into further. “Imagine if you had only a few pixels of an image but had to make a decision on whether you wanted to keep that full picture or not?” Jones says.

The hardware beside the the ATLAS experiment - the first point of sorting for experiment data

Once the onsite hardware has done its job, the data makes its way to the data center, where two or three layers of sophisticated algorithms written by scientists carry out further sorting before a final piece of software makes the final decision on whether that data should be stored or not. “That’s when we go from what we call the online world to the offline world – we now store it so it won’t be lost. Anything else is thrown away forever,” Jones says. “The question, then, is how many assets we have to process it, and how many times can we process it? This is when we start using the LHC computing grid and many other distributed environments.”

At ATLAS alone, more than 3,000 scientists carry out work using the data collected. Some are onsite, while others are in research institutions elsewhere around the world. CERN provides only 15% of the computing capacity required for the LHC. Networks, set up as a tiered infrastructure, connect CERN’s compute with other data centers around the world, which are continually processing information from the LHC for data experiments.

Fed by electrical power systems in Switzerland and France, CERN’s data center operations are the core – Tier 0. Its main computing center comprises three computing rooms – one 1,400 sq m, another 1,200 and a third, which contains about 90 racks running critical systems for the internal running of the particle physics facility. The data center is capable of switching 6Tbps and uses more than 600 HP switches. CERN also uses a small hosting setup in Geneva.

CERN’s main computing center

The LHC grid is owned by a number of different organizations and has been set up to ensure remote access for computers, software and data outside of CERN. All applications that run on the grid can run on any connected machine. The grid uses middleware to organize and integrate disparate computational resources and is used not only by CERN scientists working remotely but also by scientists from other connected organizations.

It also provides more compute power for CERN by integrating hundreds of thousands of data centers and computers and storage systems worldwide. “Essentially, we can bring into force 130 to 140 data centers from around the world using dedicated high-speed links to 11 major data centers,” Jones says, referring to these as Tier 1 facilities.

This network was put in place using GEANT, the pan-European data network for research and education, one of the largest research networks in the world. Tier 1 centers have stringent service requirements in terms of the network capacity and storage. “Because of them, we can ensure there is at least another copy of the data collected by CERN somewhere else in the world,” Jones says. These Tier 1 data centers then connect to Tier 2 data centers, which offer about 30 or 40 more facilities in each region that can serve CERN. But this tier structure is changing quickly, and this has greatly affected the direction in which Jones is taking the openlab team.

“Our network is becoming much less hierarchical and more democratic, in the sense that it doesn’t only have to go strictly Tier 0, Tier 1 and Tier 2 and beyond anymore. It can now be point-to-point, peer-to-peer, and that is having an impact on how we look at technology for the future,” Jones says.

The distributed computing environment run by CERN uses a distributed data management component, and a distributed production and analysis system called PANDA. But the enormous amount of data being processed by CERN has led to the HelixNebula, also known as the ‘Science Cloud’. This project is focused on the evaluation of cloud technologies, the integration of cloud resources using software and services, and the introduction of an internal cloud model.

But Jones believes the big data challenges will lead CERN to go a step further than this. “There is a lot of focus now on ensuring we can process the amount of data that will come from these experiments in the future,” Jones says. “We have upgraded our own data center and are moving to a sort of internal cloud-based model, and we are doing a lot of work with OpenStack as part of this.

“What we are looking at doing now is not only owning, operating and managing our own data center here, but looking at how CERN itself can use the data center as a service. We are thinking of procuring the computing services as cloud services from commercial providers to satisfy the scientific programme of the future in the most cost-effective manner.”

Jones says CERN is investigating ways of using the public cloud to do this, which could free up its own internal resources.

“But given the scale and style of the different services we will require for this, we don’t believe there is a single company at the moment that can offer us all the elements we need. It is also difficult to imagine in any procurement process that large public organizations will procure from a single source. So we will need to put in place a federated cloud service, where there are multiple suppliers working together in unison to offer the services we require. What we will need to do is build a new market, and we think this could serve the whole public sector, initially in Europe, but there is no reason this could not go further.”

Today, CERN provides cloud services internally using a private cloud. The cloud allows for easier reallocation of services through the use of virtualization. “It allows us to provision on a more elastic basis,” Jones says.

For a private company, such cloud projects could provide massive challenges around security. CERN, however, has a mandate for open access. This means the networking team has to tread a careful line between security and open policies.

CERN group leader for the IT department’s communications systems group, Jean-Michel Jouanigot, says the organization’s network is so open that its main concern is an incident where one of the commercial partners or governments it works with are attacked via the CERN network.

“We have a few gateways and WDB servers (a database system used for secure real-time archiving) and client connects here, but we cannot go much further. We have thousands of web servers at CERN and billions of files that people want to read, and our mandate is really to provide the service so they can do that,” Jouanigot says.

This is only one area where CERN, as a scientific organization, differs from those operating in the commercial market. The networking team has to run everything as a service for its scientists, who are constantly creating and updating applications used for their work. “We have some very real big data challenges with our network. Much of this for us has to do with the fact that we aren’t trained in the applications we run, so we don’t know what the optimum big data infrastructure is. Even if we did know, it could change tomorrow. We just provide the services on top of these applications,” Jouanigot says.

Read the second part of the CERN article, which appeared in FOCUS 29 – out this month - online here. You can also read the article in full in our digital edition here.