Oak Ridge National Laboratory’s (ORNL) upcoming exascale Frontier supercomputer is seeing daily hardware failures during its testing phase.

First announced in 2019, Frontier is based on Cray’s new Shasta architecture and Slingshot interconnect, and features upcoming AMD Epyc CPUs and Radeon Instinct GPUs. The system is expected to provide 1.5 exaflops of performance once fully operational and available to researchers.

The system is officially the world's fastest supercomputer, and the first to break the exascale barrier – though China is thought to be running a number of exascale systems it hasn’t entered onto the Top500 list.

InsideHPC reports the system's current problems appear to center on Frontier’s stability when executing highly demanding workloads, with some of the problems focused on AMD’s Instinct GPU accelerators, which carry most of the system’s processing workload and are paired with AMD Epyc CPUs within the system’s blades.

The publication has previously reported on problems with Frontier’s HPE Cray Slingshot fabric lasting from late last year into the spring of this year.

Justin Whitt, program director for the Oak Ridge Leadership Computing Facility (OLCF), said the issues are typical of those previously seen during the testing and tuning of supercomputers at the lab.

“We are working through issues in hardware and making sure that we understand (what they are) because you’re going to have failures at this scale,” he said. “Mean time between failure on a system this size is hours, it’s not days, so you need to make sure you understand what those failures are and that there’s no pattern to those failures that you need to be concerned with.”

“It’s mostly issues of scale coupled with the breadth of applications, so the issues we’re encountering mostly relate to running very, very large jobs using the entire system … and getting all the hardware to work in concert to do that,” Whitt added. “That’s kind of the final exam for supercomputers. It’s the hardest part to reach.”

A day-long run without a system failure “would be outstanding,” Whitt said. “Our goal is still hours” but longer than Frontier’s current failure rate, adding that “we’re not super far off our goal. The issues span lots of different categories, the GPUs are just one.”

“I don’t think that at this point that we have a lot of concern over the AMD products. We’re dealing with a lot of the early-life kind of things we’ve seen with other machines that we’ve deployed, so it’s nothing too out of the ordinary.”

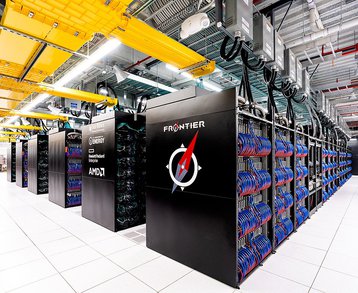

The supercomputer consists of 74 cabinets, each weighing in at 8,000 pounds. They feature 9,408 HPE Cray EX nodes, each of which has a single AMD 'Trento' 7A53 Epyc CPU and four AMD Instinct MI250X GPUs, for a total of 37,632 GPUs. Across the system, it has 8,730,112 cores. The supercomputer spans 372 square meters (4,004 sq ft) and consumes 40MW of power at peak.

Frontier will be followed by Aurora, which also began installation late last year. Featuring Intel's Ponte Vecchio GPU, it is expected to be capable of 2 exaflops. Another 2 exaflops system is expected next year, the AMD-powered El Capitan supercomputer.