Researchers at Oak Ridge National Laboratory have published a research paper detailing how they trained a one trillion parameter LLM on the Frontier supercomputer using only 3,072 of its 37,888 GPUs.

The team also detailed how it was able to train a 175 billion parameter LLM using only 1,024 of the supercomputer’s GPUs. A one trillion parameter LLM is on the same scale as OpenAI’s GPT4 model.

There are a number of challenges that come with training LLMs with billions of parameters, such as the considerable compute resources and memory required. In order to overcome this, the researchers investigated data parallel training techniques and their on memory footprint, communication latency, and GPU's computational efficiency. This allowed researchers to use “hyperparameter tuning” to find the most efficient strategies for training large LLMs.

The results saw GPU throughputs of 31.96 percent achieved for the one trillion parameter model, and 36.14 percent for the 17bn parameter model. Furthermore, for both these models, researchers achieved 100 percent weak scaling efficiency and strong scaling efficiencies of 89 percent for the 175bn parameter model and 87 percent for the one trillion parameter model.

However, the research paper did not provide any information about how long it took to train the models using this method.

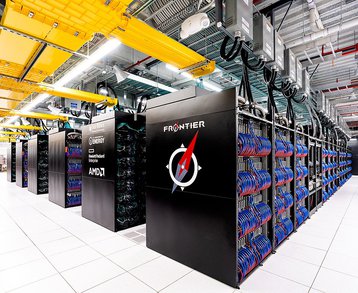

The Frontier supercomputer has an HPL (High-Performance Linpack) benchmark score of 1.194 exaflops, uses AMD Epyc 64C 2GHz processors, and is based on the HPE Cray EX235a architecture. The system has a total of 8,699,904 combined GPU and CPU cores, and uses HPE's Slingshot 11 network for data transfer.

In November 2023 it was awarded the top spot in the Top500 list of the world's fastest supercomputers.