British chip designer Graphcore has unveiled its second-generation Intelligence Processing Unit (IPU) platform for artificial intelligence workloads.

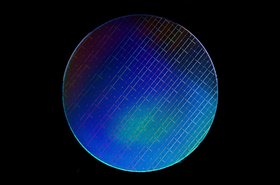

The IPU-Machine M2000 features four of the company's new 7nm Colossus Mk2 GC200 IPU processors, which offer an eight times performance jump over the Mk1. It boasts 59.4 billion transistors, more than the 54 billion in Nvidia's largest GPU, the A100.

Facing off against Nvidia

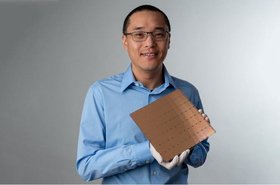

Each GC200 chip has 1,472 independent processor cores and 8,832 separate parallel threads, all supported by 900MB of in-processor RAM. Four of them together in a 1U M2000 delivers one petaflop of total AI compute, the company claims, at a price of $32,450.

Customers can start with one box, connected to an existing CPU server, or can add a total of eight M2000s connected to that server. Separately, the company sells IPU-POD64s with 16 M2000s in a 19-inch rack, with the theoretical ability to scale up to 64,000 IPUs. All the chips are supported by its memory organization software Poplar.

Graphcore has raised hundreds of millions from investors including Microsoft, BMW's i Ventures, Dell Technologies, Samsung Electronics, and Demis Hassabis, the co-founder of Google's DeepMind. Earlier this year, when raised $150m, it was valued at $1.95 billion.

It's not clear how many actual customers the small company has, although Microsoft is its most high profile - offering the first IPU on its cloud, in preview. Oxford Nanopore, EspresoMedia, the University of Oxford, Qwant, and Citadel all use the chips, to some degree.

Citadel, a hedge fund, commissioned a detailed independent analysis into the Mk1 and M1000 machine, which can be read in full here. "The IPU architecture and its compute paradigm were co-designed from the ground up specifically to tackle machine intelligence workloads," the researchers state. "For that reason, they incarnate certain design choices that depart radically from more common architectures like CPUs and GPUs, and might be less familiar to the reader."

Using their own benchmarks, the found that the IPU was "a clear winner in single precision against Nvidia’s V100 GPU (per-chip comparison)," but while it "delivers higher throughput, the GPU supports larger operands thanks to its higher device memory capacity. In our experiments, the largest square matrix operands fitting one IPU are 2,944×2,944, while on a 32-GB GPU they are roughly ∼50,000× ∼50,000."

For mixed precision, "the comparison does not yield a clear winner and requires a more nuanced discussion... On both devices, specialized hardware (TensorCores and AMP units) supports matrix multiplication in mixed precision. Despite one IPU delivering roughly the same theoretical throughput as one GPU, in GEMM benchmarks the IPU yields lower performance than a V100 GPU: 58.9 TFlops/s vs. 90.0 TFlops/s, respectively. The IPU uses a lower fraction of its theoretical limit (47.3%) than the GPU (72.0%).

"In mixed precision as well, the GPU supports larger operands. The largest square matrix operands fitting an IPU are 2,688×2,688, while on a 32-GB GPU they are roughly ∼72,000× ∼72,000."

For some workloads, such as pseudo-random number generation, the researchers found a significant advantage in using IPU when it came to throughput, but said it provided a lower quality of randomness. "The IPU’s performance advantage over the GPU doubles in a per-board comparison. We are not qualified to judge which platform the performance-quality trade-off favors."

J.P. Morgan is currently evaluating Graphcore’s chips for possible adoption.