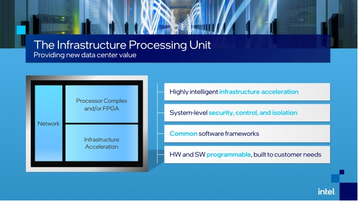

Intel has launched a new product line, the Infrastructure Processing Unit (IPU).

The device is the company's name for a Data Processing Unit (DPU), a programmable processing unit for offloading infrastructure-specific operations such as security, storage and networking. This takes work from CPUs and GPUs in servers, allowing them to focus on core applications.

An FPGA-based IPU is already being deployed by "multiple cloud service providers," while an ASIC IPU is currently being internally tested. Future iterations of both IPUs are planned.

An even SmarterNIC

“The IPU is a new category of technologies and is one of the strategic pillars of our cloud strategy. It expands upon our SmartNIC capabilities and is designed to address the complexity and inefficiencies in the modern data center," Guido Appenzeller, CTO of the Intel Data Platforms Group, said.

"At Intel, we are dedicated to creating solutions and innovating alongside our customer and partners — the IPU exemplifies this collaboration.”

The company said that it developed the IPU in collaboration with hyperscale cloud partners.

“Since before 2015, Microsoft pioneered the use of reconfigurable SmartNICs across multiple Intel server generations to offload and accelerate networking and storage stacks through services like Azure Accelerated Networking," Andrew Putnam, principal Hardware Engineering manager at Microsoft, said.

"The SmartNIC enables us to free up processing cores, scale to much higher bandwidths and storage IOPS, add new capabilities after deployment, and provide predictable performance to our cloud customers. Intel has been our trusted partner since the beginning, and we are pleased to see Intel continue to promote a strong industry vision for the data center of the future with the infrastructure processing unit.”

Other IPU partners include Baidu, JD Cloud, and VMware. Amazon uses its own DPUs, 'Nitro,' which rely on multi-core Arm CPUs. Google is believed to be developing its own in-house SmartNIC.

The IPU, and the wider DPU market, come as the CPU loses its monopoly on data center workloads. GPUs, AI accelerators, and other processors are growing in popularity, as the server becomes filled with heterogeneous compute.

GPU giant Nvida made its move into the DPU space last year, rebranding and upgrading Mellanox's SmartNICs line as DPUs, after acquiring the company for $6.9bn.

A study by Sandia National Lab and UC Santa Cruz found some value in deploying Nvidia BlueField-2 DPUs, but noted that there were still many limitations.

“While BlueField-2 provides a flexible means of processing data at the network’s edge, great care must be taken to not overwhelm the hardware,” the researchers saind. “While the host can easily saturate the network link, the SmartNIC’s embedded processors may not have enough computing resources to sustain more than half the expected bandwidth when using kernel-space packet processing.”

The researchers concluded that, at least for their HPC needs, “the advantage of the BlueField-2 is small.” Instead, there could be value in using it for memory-intensive workloads, encryption/decryption, and compression/decompression.