Meta has released a report stating that during a 54-day Llama 3 405 billion parameter model training run, more than half of the 419 unexpected interruptions recorded were caused by issues with GPUs or their onboard HBM3 memory.

For the model, which was trained on a cluster containing 16,384 Nvidia H100 80GB GPUs, this equates to an average of one failure every three hours.

Detailed in a table included in the report, a faulty GPU caused 148 interruptions, accounting for 30.1 percent of all those recorded, while GPU HBM3 memory was responsible for 72 interruptions, or 17.2 percent.

GPU SRAM memory and GPU system processor issues accounted for 19 and 17 interruptions – 4.5 and 4.1 percent, respectively, while network switch and cable problems caused 35, or 8.4 percent, of the recorded interruptions.

Only two CPU failures were recorded during the 54-day training period.

“The complexity and potential failure scenarios of 16K GPU training surpass those of much larger CPU clusters that we have operated. Moreover, the synchronous nature of training makes it less fault-tolerant – a single GPU failure may require a restart of the entire job.”

However, Meta said that despite these challenges, the Llama 3 team maintained more than a 90 percent effective training time. It also noted that while the training run experienced a high number of failures, “significant manual intervention was only required three times during this period, with the rest of the issues handled by automation.”

Released in April, Llama 3 is an open source LLM that was pre-trained on more than 15 trillion tokens that were collected from publicly available sources

Earlier this month, Meta launched Llama 3.1. This family model contains the new 405 billion parameter model which the report relates to, in addition to the 70 billion parameter and eight billion parameter variants that were made available with the original Llama 3 release.

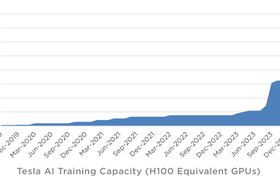

Currently, Meta has two data center scale clusters with a combined 24,000 GPUs that it has been using to train its Llama large language AI model.

By the end of 2024, the company said it is aiming to grow its infrastructure build-out to include 350,000 Nvidia H100s as part of a portfolio that will feature compute power equivalent to almost 600,000 H100s.