Amazon Web Services (AWS) has deployed its software on a satellite in orbit.

During its re:Invent 2022 conference this week the company announced that it successfully ran a suite of AWS compute and machine learning (ML) software on an orbiting satellite.

AWS collaborated with D-Orbit and Unibap; D-Orbit was able to rapidly analyze large quantities of Earth Observation (EO) imagery data directly onboard its orbiting ION satellite using AWS software.

The company said the ten-month experiment, conducted in low Earth orbit (LEO), was designed to test faster and more efficient methods for customers to collect and analyze data directly on orbiting satellites using the cloud.

“Our customers want to securely process increasingly large amounts of satellite data with very low latency,” said Sergio Mucciarelli, vice president of commercial sales of D-Orbit. “This is something that is limited by using legacy methods, downlinking all data for processing on the ground. We believe in the drive towards edge computing, and that it can only be done with space-based infrastructure that is fit for purpose, giving customers a high degree of confidence that they can run their workloads and operations reliably in the harsh space operating environment.”

Max Peterson, AWS vice president, worldwide public sector, added: “Using AWS software to perform real-time data analysis onboard an orbiting satellite, and delivering that analysis directly to decision makers via the cloud, is a definite shift in existing approaches to space data management. It also helps push the boundaries of what we believe is possible for satellite operations.”

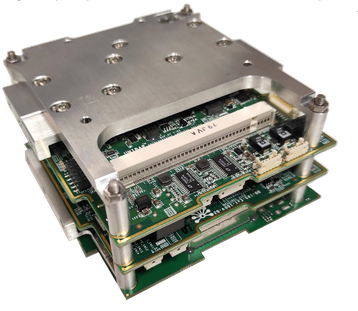

A software prototype was built including AWS machine learning models to analyze satellite imagery in real-time, and AWS IoT Greengrass, and integrated into processing payload built by Unibap. The Unibap processing payload was then integrated into D-Orbit’s ION SCV-4 satellite and launched into space.

On January 21, 2022, the team made its first successful contact with the payload and executed the first remote command from Earth to space. The team began running its experiments a few weeks later. Using AWS AI and ML services reportedly helped reduce the size of EO images by up to 42 percent, increasing processing speeds and enabling real-time inferences on-orbit.

“We want to help customers quickly turn raw satellite data into actionable information that can be used to disseminate alerts in seconds, enable onboard federated learning for autonomous information acquisition, and increase the value of data that is downlinked,” said Dr. Fredrik Bruhn, chief evangelist in digital transformation and co-founder of Unibap. “Providing users real-time access to AWS Edge services and capabilities on orbit will allow them to gain more timely insights and optimize how they use their satellite and ground resources.”

New EC2 instances with AWS’ Graviton3E and Intel’s Sapphire Rapids Xeon processors

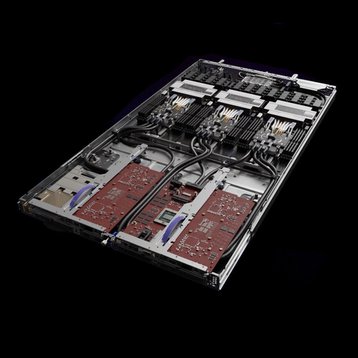

At re:Invent this week, AWS also announced a slew of new EC2 compute instances. These include new HPC and AI-tailored instances featuring AWS’ newest Inferentia2 and Graviton3E chips as well as Intel’s Sapphire Rapids Xeon processors. The company also announced its new Nitro 5 hardware hypervisor.

“Each generation of AWS-designed silicon—from Graviton to Trainium and Inferentia chips to Nitro Cards—offers increasing levels of performance, lower cost, and power efficiency for a diverse range of customer workloads,” said David Brown, vice president of Amazon EC2 at AWS. “That consistent delivery, combined with our customers’ abilities to achieve superior price performance using AWS silicon, drives our continued innovation. The Amazon EC2 instances we’re introducing today offer significant improvements for HPC, network-intensive, and ML inference workloads, giving customers even more instances to choose from to meet their specific needs.”

EC2 Hpc6id; new instances for high-performance computing, powered by 64 cores of Intel Xeon Scalable processors (Ice Lake) that run at frequencies up to 3.5 GHz, 1024 GiB memory, 15.2 TB local SSD disk, 200 Gbps Elastic Fabric Adapter (EFA) network bandwidth.

AWS said these instances are optimized to efficiently run memory bandwidth-bound, data-intensive high-performance computing (HPC) workloads, such as finite element analysis and seismic reservoir simulations.

“We heard feedback from customers asking us to deliver more options to support their most intensive workloads with higher per-vCPU compute performance as well as larger memory and local disk storage to reduce job completion time for data-intensive workloads like Finite Element Analysis (FEA) and seismic processing,” said Channy Yun, principal developer advocate for AWS. “Today, we announce the general availability of Amazon EC2 Hpc6id instances, a new instance type that is purpose-built for tightly coupled HPC workloads.”

EC2 Hpc7g; powered by AWS Graviton3E processors, the company said this new instance with up to 35% higher vector instruction processing performance than the Graviton3. Hpc7g instances will be available in multiple sizes with up to 64 vCPUs and 128 GiB of memory.

“They are designed to give you the best price/performance for tightly coupled compute-intensive HPC and distributed computing workloads, and deliver 200 Gbps of dedicated network bandwidth that is optimized for traffic between instances in the same VPC,” said AWS chief evangelist Jeff Bar.

EC2 Inf2; available in preview, these instances are powered by up to 12 AWS’ own Inferentia2 chip, offering up to 2.3 petaflops of deep learning performance, up to 384 GB of accelerator memory with 9.8 TB/s bandwidth, and NeuronLink, an intra-instance ultra-high-speed, nonblocking interconnect.

AWS said these instances are tailored toward natural language understanding, translation, video and image generation, speech recognition, personalization, and more.

EC2 R7iz; available in preview, these instances are the first EC2 instances powered by 4th generation Intel Xeon Scalable processors (code-named Sapphire Rapids) with an all core turbo frequency up to 3.9 GHz. R7iz instances are available in various sizes, including two bare metal sizes, with up to 128 vCPUs and up to 1,024GiB of memory.

AWS said these instances have the highest performance per vCPU among x86-based EC2 instances and can deliver up to 20 percent higher performance than z1d instances. These instances are reportedly ideal for front-end Electronic Design Automation (EDA), relational databases with high per-core licensing fees, financial, actuarial, data analytics simulations, and other workloads requiring a combination of high compute performance and high memory footprint.

EC2 C6in; powered by Intel Xeon Scalable processors with an all-core turbo frequency of up to 3.5 GHz, these x86-based Amazon EC2 compute-optimized instances offer up to 200 Gbps network bandwidth. This offering is available in nine different instances with up to 128 vCPUs and 256 GiB of memory, can deliver up to 80 Gbps of Amazon Elastic Block Store (Amazon EBS) bandwidth, and up to 350K input/output operations per second (IOPS).

AWS said C6in can be used to scale the performance of applications such as network virtual appliances (firewalls, virtual routers, load balancers), Telco 5G User Plane Function (UPF), data analytics, high-performance computing (HPC), and CPU-based AI/ML workloads

EC2 M6in, M6idn, R6in, and R6idn; now in general availability, these instances are powered by Intel Xeon Scalable processors with an all-core turbo frequency of up to 3.5 GHz. These x86-based general-purpose and memory-optimized instances offer up to 200 Gbps of network bandwidth.

Both the M6 and R6 instances are available in nine different instance sizes; M6 up to 128 vCPUs and 512 GiB of memory, and R6 up to 128 vCPUs and 1,024 GiB of memory. They also deliver up to 80 Gbps of Amazon Elastic Block Store (EBS) bandwidth and up to 350K IOPS. M6idn and R6idn instances are equipped with up to 7.6 TB of local NVMe-based solid state disk (SSD).

EC2 C7gn; available in preview, these instances are powered by the latest generation AWS Graviton3 processors. The c7gn instances will be available in multiple sizes with up to 64 vCPUs and 128 GiB of memory. C7gn instances offer up to 200 Gbps network bandwidth and up to 50 percent higher packet-processing performance compared to the previous generation’s Graviton2-based C6gn instances.

AWS said workload examples include network virtual appliances, data analytics, and CPU-based artificial intelligence and machine learning (AI/ML) inference.

Private 5G service expands; Earlier this month, AWS said its AWS Private 5G service, which currently doesn’t support 5G, now includes support for multiple radio units.

When it entered general availability in August, the service did not support small-cell radio units; each network was able to support one radio unit that can provide up to 150Mbps of throughput spread across up to 100 SIMs. Now the company said it customers can order additional small-cell radio units to extend coverage or additional SIMs to connect more devices.