Ok, I get it, AI is here. And it is everywhere. As we continue to see in every form of media, AI is here to stay. And grow. Whether for good or bad is not what this conversation is about – I will leave those discussions to the pundits, the technologists, the futurists, even the creators of all the ‘deepfake’ content.

This conversation is to bring AI out of the virtual world and into the real world and the challenges we mere mortals will face as we figure out just how much we can do as we enter this brave new world (with apologies to Aldous Huxley).

However, to reap all the benefits of AI, both real and potential, those of us in the trenches of the data center space are going to have to adapt to support the exponential increase in demand for space, power, and cooling.

Whether supporting cryptocurrency trading, next-level CGI, and cutting-edge graphics, or pushing the boundaries of science (such as the James Webb Space Telescope discovering the JADES-GS-z13-0 galaxy).

There continue to be plenty of articles, podcasts, presentations at seminars and conferences, research reports, and blogs all looking at the same thing: The growth of hyperscale and the future of AI. The numbers are there, the potential – enormous. Everyone is ready to unlock the vast possibilities AI seemingly offers. The future is now.

But…

All this stuff “lives” somewhere, and that somewhere is the IT space: The hyperscale data center, the plain old data center, the Edge data center, the micro data center, with the lowest common denominator – the data center. It does not matter what it is called; these are the spaces where the servers, switches, storage, cables, patch panels, etc. are installed, managed, and serviced. Aka, IT Infrastructure.

How IT Infrastructure must change will be critical. If we cannot change, we become the bottleneck on the AI information superhighway – a significantly bigger, faster, and more complex system than the plain old Internet we use today.

The three pillars of the hyperconnected planet

Space, power, and cooling – these are the core products provided by and in the IT space. Whatever you call the space, every installation must provide these three vital services.

Space:

This should be the easiest provision; just build more buildings. Until you cannot. We are beginning to see groundswell/grassroots opposition to building more data centers.

The same attitudes against building more 500,000 sq ft warehouses in residential communities, urban areas, next to or even in historical sites. I see the signs on lawns, along the sides of roads, and in the media.

It will become more challenging to build the facilities we must have to support the demands of AI. But “build we must.” And as these new buildings are erected, how they support power and cooling will have to be completely re-evaluated and deployed. Which leads to:

Power:

Ok, we have the building, now all we have to do is get electricity. No problem. Except we are running out of power. Or running out of transmission lines to deliver the power to these new spaces. Or, as with Space, facing increasing local resistance to building new transmission lines, substations, wind farms, or solar arrays.

I certainly would not want high-tension lines running through my backyard, down my street, or through the local parks. I am not sure I want to see a 164-meter diameter turbine rotor out my front window.

I am not against renewable energy; I strongly support it, just not too close.

And then there is the “nuclear option.” The very last thing I want to hear about is using small nuclear reactors for data centers. Most people do not even want a cell tower in their backyard, next to their kids' schools. But a small nuke, yeah, that’s fine. I am sure everyone in Northern Virginia, Silicon Valley, even Secaucus, New Jersey will be fine with popping in a nuclear power plant.

But why the demand for all this power? Because AI installations are pushing the 100kW power demand per enclosure footprint. Not too long ago, 50kW per footprint was considered ‘high density’. No more. These high-density systems are not coming. They are here. Which then leads to:

Cooling:

Well, not really cooling. Call it what it really is: Climate control and heat removal. That is what is being done – maintaining a stable climate in the IT space and sending all that heat out of the building.

This is the service that is the biggest concern. Regardless of the issues with space and power described above, somehow, we always manage to find both. And once found, the tremendous thermal loads generated by all the AI appliances and applications must be dealt with. Plain old ‘high density’ installations are passé, and supporting super, ultra, hyper (pick your superlative) densities are now the new norm.

We know the systems: immersion, direct-to-chip, row-based water cooling, and fan walls. Yet, it is not as simple as installing DLC systems or throwing a high-capacity row-based system in between these high-density footprints. You need piping, manifolds, or similar distribution hardware plus chillers, cooling towers, etc.

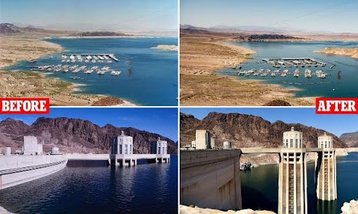

Oh, and water. Which, along with everything else, we are running out of (see Mexico City).

(Full disclosure – many North American lakes have seen significant increases in water levels in 2023-24, but still remain well below normal or historic levels.)

Yet, remove heat we must. 100kW (and perhaps higher) footprint loads are here, now, and will continue to be deployed. Lower densities to support AI will also continue to increase. Just going to be that much more heat to manage.

So, what can we do?

First and foremost, stop trying to pretend this will go away; it will not. The data center industry must accept the inevitable, and so must everyone else.

Plan, plan, plan. The demands AI will place on the data center are clear. More of everything will be needed. Plan for how a new build or retrofit/upgrade to an existing space can best be designed to support AI.

Renewables – wind, solar, geothermal, and tidal power sources must be considered. And not just because a company has a “Green Mission Statement” that promises to use energy from renewable sources and reduce carbon footprint by X amount in the future. Use them now and demand more from them. This is one area where the IT industry can really take the lead and show everyone else how to do it.

Throw out the old. We cannot use legacy designs. Enclosure dimensions must be re-evaluated even deeper than 48”/1200mm. Providing power to each footprint is another necessity as 100kW loads will need more than two PDUs per enclosure. Allocation of floor/white space – It will no longer be acceptable to simply “add a few more” racks or cabinets. And stop using <70F/21C cold air – warm it up, save some energy, and expand heat removal capacity.

The wrap-up

We have come so far in a very short time, and can certainly learn and build on all this experience. AI will present new infrastructure challenges (along with everything else). The industry not only must adapt, but it will adapt if we move beyond the hype and confront the issues directly. And go along for the ride.

More about Rittal

-

Stream DCD>Connect New York on demand

The episodes are here - catch up on what you missed!

-

Sponsored Eaton welcomes Rittal to its xModular partner program

Eaton and Rittal to collaboratively advance innovations within the modular data center space

-

Rittal and Stulz team to sell combined rack-and-cooling packages

One-stop shop for enclosures and climate control