Data centers have always been highly stable, resolute environments. These facilities are made to brace against shifts that could disturb IT – but the rabid pursuit of AI creates a fundamental disconnect between what data centers have been and what they must become. Today’s AI applications and their dynamic requirements are forcibly reintroducing change into the data center environment.

Data centers – until now

Stable base loads have long been the cornerstone of data center operation. Even as more IT filters in, power loads creep up slowly and evenly, allowing relatively invariable and stable power, space, and cooling delivery 24/7/365.

It makes sense why our power and cooling systems have been designed and configured to support continuous, even consumption.

By nature, AI is breaking the mold we’ve created. When jobs are launched, power levels change radically, spiking up by multitudes. A 600-700kW load can become over a megawatt in an instant, lasting days in this level of consumption before dropping back down just as suddenly.

Of course, this shifts cooling and heat load, making AI a multi-faceted challenge. Goldman Sachs reports that 47GW of incremental power generation capacity will be required to support US data center power demand growth through 2030, and demands are only getting higher. From a basic framework perspective, this is likely the single biggest item on any AI data center’s docket.

However, power density is also a key AI challenge. AI customers already want to deploy initially at 50-60kW a cabinet – but within six months, they’ll want 100kW per rack. This rate of evolution pushes significantly against those stable data center environments we’ve long depended on. Building this level of density (and catering to the heat load) is in itself very tricky – but achieving the elasticity needed for AI is critical. Today’s data centers simply aren’t built for it.

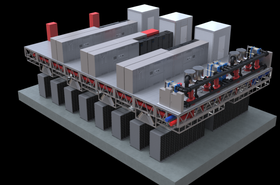

This is where we find ourselves today as an industry. In the face of these challenges, operators are scrambling to retrofit data halls (or establish new builds) to support the liquid cooling solutions and dense infrastructure needed today and tomorrow.

Parsing the problem

For the better part of a decade, we’ve all sat in the 5-10kW cabinet range. Some cabinets might have been labeled an ‘HPC cabinet’ and those may draw 50kW. Still, these instances would often be specialized and isolated from the larger environment so heat could be contained. These HPC deployments have, until recently, been the exception, not the rule.

Now, all of your data center racks will be at the level of those once-specialized HPC racks. Suddenly, the operations and plans supporting them are not so straightforward.

As standard densities rise significantly, the main culprits either holding data centers back or propelling them toward AI-readiness are power chains and cooling system capacities. Not to mention, with AI deployments, every nanosecond matters for cluster connectivity.

Cabinets need to be densely packed to assist with compute performance in the data hall as well as within each cabinet. We no longer have the luxury of spreading the load or segmenting cabinets. It’s in these ways that creating true AI data centers isn’t just comprehensive – it’s complex.

On the cooling side, data centers have been traditionally designed assuming a maximum kW level per cabinet and volume of necessary air exchange that we have already outstripped. We’re not playing in the ‘huge data halls with sparse cabinetry’ field anymore, making traditional air cooling designs totally inadequate for AI’s dense, grouped clusters.

Of course, none of this is news to most operators. Many are already actively pursuing change. Unfortunately, the problem many are still not aware of (or maybe not accepting) is the level of truly radical change required to not only meet AI where it is now, but preempt where it needs to go.

Why even purpose-built solutions aren’t working

Some colocation providers assume they can reinvent themselves for AI by simply ordering a liquid cooling system and sticking it at the end of the row. Sadly, this is like a band-aid over a bullet hole.

There are a number of reasons why even new infrastructure investments, retrofits, and additions aren’t helping make the AI cut. First, there’s the challenge we know too well: Supply chain issues. The lead times on liquid cooling systems are so long that between ordering and implementation, data centers are even further behind the curve than when they started. AI growth is outpacing the speed of deployment.

Plus, thanks to sky-high AI demand for liquid cooling tech, Vertiv has reported organic orders up 60 percent in Q1 2024 compared to the first quarter of 2023. If a data center provider has to bring liquid to the rack, they’re going to be losing ground every day, waiting behind hyperscalers.

Deploying with enough redundancy and resilience is the other side of this coin. Once you have the systems needed, they can’t just be plunked in anywhere. Some operators’ first reaction is to place an in-row chiller with a water distribution loop. But what happens if a component of the loop goes down? Suddenly, a data center has an unacceptable single point of failure. Delivering concurrent maintainability and resilience even if a component or pipe breaks is paramount, but redundancy is more complicated now than ever.

Data centers must now contend with pressurized water flowing all around IT equipment. If water is going to the rack, operators must have flawless leak prevention and emergency planning. What does the recovery process look like, and what will the downstream impact be? Even in these initial questions, we see that being prepared for AI is a lot more complicated, holistic, and forward-thinking than just getting systems into place.

At AI’s necessary speed of transformation, the opportunity costs of conscientiousness can make many operators skip steps. But this doesn’t make an AI data center, it creates a liability.

Fortunately, the market for AI data center solutions is maturing. For instance, data hall water cooling systems that run under vacuum help on the resilience and recovery side, preventing shutdowns if problems occur. However, many operators aren’t even thinking about AI preparedness at this level of necessary detail and foresight – and they aren’t challenging the long-standing assumptions they should be.

Changing our paradigm

Underneath these challenges, AI’s highly dynamic workloads and increasing densities are only making the race faster and the pressure more substantial. In truth, AI is forcing the industry to build not only on reality but on speculation.

From a capital investment perspective, today’s data centers must be viable for the next couple of decades. So, how scalable should (or can) they be? How many nodes packed into how many clusters grouped into how dense of an area can we achieve with today’s current market and technological thresholds? Ultimately, ongoing retrofits have to be made easy to leave room for dynamic change – and that’s still not a comfortable reality for data centers.

Operational aspects like disaster recovery only compound the strain presented by real and forecasted requirements. With all these considerations at hand, operators are forced to make quantum leaps forward without missing any detail. It’s like trying to hit a bullseye while driving by the target at Indy 500 speeds.

In this challenge, modularity has become an asset – but it’s not enough. Beyond an operational or even physical change, we need a philosophical change in how we view the problem and solution.

Until today, we’ve operated from the perspective of having X amount of fixed space into which we can bring Y amount of cooling and power capacity. AI requires us to turn this problem on its head.

We can agree it’s much better to waste space than to strand power or cooling, so our long-standing build philosophy is backward. Let’s flip the equation: If an operator needs to make sure a design can handle five times their current power or cooling density, how does the initial deployment space need to suit that? Furthermore, how can the space allow equipment to be easily pulled and replaced without sacrificing the building’s integrity in the process?

Now, we’re beginning to see how we can move away from the idea of a fixed, sealed building while making IT operations even more advanced. This allows data centers to preserve initial capital investments and make capital turnover much more viable and incremental to support profitability in the face of change.

Don’t worry – it’s not just you

Failing to recognize the sheer scope of AI’s disruption is holding many operators back. But as an industry, we have to share the blame: We’re still not designing full-system solutions that help operators solve these problems.

From OEMs to semiconductor companies and beyond, maintaining profitability by preserving product commonality is a hurdle we all must agree to overcome – but that’s a discussion for another day.

Ultimately, the right solutions and partners can make a world of difference in navigating a complex or stubborn market. When looking for the liquid cooling systems and holistic solutions necessary for AI, focus on readily expandable systems. When sourcing solutions, look for modularity, resilience, flexibility, and scalability – and don’t trust providers who claim to know the future completely.

The ability to comprehensively adapt while keeping IT operationally safe and stable will be any data center’s bread and butter for the foreseeable future. This is the point from which complex AI negotiations in the data center environment should begin.

More from Nautilus

-

Sponsored Will generative AI help or hinder data center sustainability?

Exploring the paradox surrounding AI and sustainability in the data center

-

Nautilus launches new modular data center offering

First deployment to be at Start's campus in Portugal

-

Sponsored AI within the data center

Addressing the challenges of AI advancement and meaningful implementation