In the 1880s, Thomas Edison and George Westinghouse engaged in the 'War of the Currents' - one of the fiercest industrial battles in history - pitting AC against DC. This year, I’ve been looking at how a version of that war could play out in data centers.

Back at the dawn of electricity, Edison backed direct current (DC) distribution, and both sides used patent suits and chicanery.

Edison said alternating current was too dangerous to be used in distribution. He said it was so deadly, it should be used as the power source of choice for executions by the then-new electric chair.

Faced with this, Westinghouse used any means to avoid the prospect of his technology being used to kill someone in a very public way. He funded legal appeals against the sentence of the first person due to face the electric chair.

When linemen died while working on live overhead wires, Edison quipped that, though he didn’t himself agree with the death penalty, the law could save the expense of executions, and just sentence convicted felons to a sentence of working on Wesinghouse’s infrastructure.

As we know, Westinghouse won, and our grids are predominantly run on alternating current. In 1889, Edison's interests merged into what became General Electric. His company now had a strong AC business, and Edison's own control was diluted.

But DC lingered on. Consolidated Edison switched off its last DC customer in 2007.

DC wars in the racks

But that wasn’t the end. During the 20th century, the telecoms sector had grown up using DC. Central offices (aka telephone exchanges) were built using 48V DC connections point-to-point, and the lines out to phones in homes and offices were driven by 24V or 48V DC power.

Many of those were going to AC in this century. But in the 2000s data centers emerged as a new sector, and in that sector, the current wars returned.

Fundamentally, electronics use DC power. The chips and circuit boards are all powered by direct current, and every computer or other piece of IT equipment that is plugged into the AC mains has to have a “power supply unit” (PSU), also known as a rectifier or switched mode power supply (SMPS) inside the box, turning the power from AC to DC.

Old–school data centers use mains-oriented IT equipment, and route the mains power into the racks and right to the servers. That results in complex cabling, in which all the kit in one rack is driven from giant power strips, sometimes called power distribution units (PDUs).

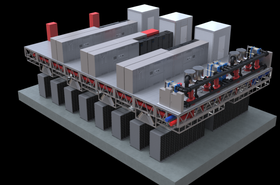

Starting around 2011, hyperscalers argued for a simplification, taking the rectifiers out of the servers, and delivering DC power directly to them via a bus bar at the back of the rack. No more SMPS units and all the power can be rectified in one power shelf.

The Open Compute Project (OCP) emerged from Facebook with a scheme for Open Racks driven by bus bars. Open19 (now the SSIA) came up with an alternative idea, and both had to handle both 12V and 48V strands.

DC racks are now a major feature in the data center world, and we’ve spoken to some of the proponents in our latest power supplement.

Going full DC

But there’s more. Even before the hyperscalers installed their bus bars, other people had been proposing a more radical shift to use DC across the whole facility.

The reasoning was about simplicity.

Data centers have an Uninterruptible Power Supply (UPS) designed to power the facility for long enough for generators to fire up. The UPS has to have a large store of batteries, and they are powered by DC.

So power enters the data center as AC, is converted to DC to charge the batteries, and then back to AC for distribution to the racks.

In the 2000s, a number of companies proposed to take the AC link out of the equation. Convert to DC, charge the batteries, and distribute DC to the racks. ABB was convinced enough to buy the leading DC prominent Validus back in 2011.

But the idea didn’t catch on. In this iteration of the war of the currents, DC was widely seen as unsafe. For that reason, and maybe the innate conservatism of the sector, it withered away.

But things can always come back.

Data centers are now looking at using microgrids for power. That means drawing on-site energy directly from sources such as fuel cells and solar panels. As it turns out, those sources often conveniently produce direct current.

A data center could be isolated from the AC grid, and live on its own microgrid. On that grid DC power sources charge batteries, and power electronics which fundamentally run on DC.

In that situation, the idea of switching to AC for a short loop around the facility begins to look, well, odd.

For our latest power supplement, DCD spoke to one of the pioneers bringing back full-site DC distribution.

More invention

But when things are opened up for innovation, other things can change too. We could see modern-day Edisons coming up with completely new ideas.

Those central battery rooms are only one place to store the backup. There have been multiple schemes proposing batteries within the actual data center racks, where they can provide that failover time to each server individually, instead of through a distributed system.

And beyond that, why not re-examine the fundamentals of electrical conduction?

A few years after Edison, in 1911, the phenomenon of superconductivity was discovered. Materials exist which, at low temperatures, have no resistance at all.

In theory, superconducting circuits could carry as much current as you like, without any wastage.

The drawback is the need for low temperatures. Scientists have searched for high-temperature superconductors, and produced some which operate at -130C, warm enough to be cooled with a liquid nitrogen.

Bus bars have been proposed, sheathed in a cryogenic circuit, that could ship as much power as you like around a data center, without loss.

Real data centers are going to take a lot of convincing to adopt anything that exotic.

But by comparison, maybe DC distribution doesn’t look so unusual after all?