For the latest issue of the DCD Magazine, we looked at delay-tolerant networking, a protocol designed for fragile and latency-heavy networks.

DTN has been used by the military, by zoologists, and is now being proposed as the backbone of the Interplanetary Internet.

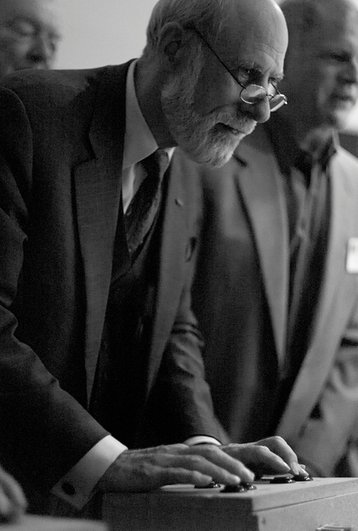

For a fuller explanation of the technology and the efforts to improve adoption, we recommend reading the full feature. However, as part of our research for the piece, we had the pleasure of interviewing TCP/IP co-creator Vint Cerf, often referred to as 'the father of the Internet,' and to packet switching founder Leonard Kleinrock.

This week, we're publishing both interviews in full, as they covered more ground than could fit into the magazine feature. Below is our interview with Vint Cerf, and we will publish our Kleinrock interview later this week.

Sebastian Moss: So to kick off, I want to understand this long journey you've been on to get DTN into space. You've been working on a version of DTN, the Bundle Protocol, since 1998?

Vint Cerf: All of the tasks that I seem to choose have really long timelines. So as an example, when Bob Kahn and I started the design of the Internet it was 1973. And it didn't get turned on really until 1983. We'd done a bunch of tests, but we turned it on in January of '83. And the Interplanetary work started in 1998. And here we are, almost 25 years later.

We did get an opportunity to test the prototype software in 2004, when the rovers Spirit and Opportunity landed, we had to revise the method by which the data was returned to Earth.

And that involved uploading them new software, store and forward processes, as opposed to point-to-point links direct from the surface of Mars back to the Deep Space Network. And since that time, since 2004, there's been continued evolution and standardization.

So it has taken a long time. And we're still not entirely there yet. But we have made enormous amounts of demonstrative progress. And right now, the Interplanetary Network Special Interest Group and the Internet Society are starting to do some demonstrations of the bundle protocols in terrestrial applications. And partly in order to scale up to demonstrate that the systems, network management tools, and other things like that actually work at scale. Not that we're expecting billions of nodes or anything like that, but something more than three or four, which is sort of where we are today in terms of demonstrable capability in live situations, there are small numbers of devices involved. We'd like to show that the system is scalable.

So the other two big landmarks were the EPOXI spacecraft 2008, and ISS in 2016?

VC: Well, with the ISS in 2016 we uploaded the software to some laptops that were onboard the ISS. And we were not in the path of command and control of the spacecraft, we were over on the astronaut usage side, or on the experiment side, we had onboard experiments going on, and we were able to use the Interplanetary protocol to move data back and forth, both commands up to the experiments, and getting data back down again.

The EPOXI was sort of a one-shot, and we had the opportunity to upload the protocols and our purpose there was to demonstrate utility at some distance. In this case, it was 81 light seconds. The older software, the even more primitive software that has been used now since 2004 on Mars was not the Bundle protocol, it was a predecessor store and forward system, and it was called CFDP for CCSDS file delivery protocol, which we have since revised in order to run that as an application layer, File Transfer Protocol layer, and then below that the Bundle Protocols and the required transport protocol.

So that's the capability that we're capable of demonstrating today. It's what we propose to use for the Artemis missions, the US return to Moon, the Gateway program.

And as you might know from your reading, we're also working closely with ESA in Europe, JAXA in Japan, with the Korean space agency. And of course, with the UN Consultative Committee and Space Data Systems, and also the Internet Engineering Task Force, which is standardized within the Internet community.

Although the Bundle Protocol is not literally an extension of the TCP/IP protocol -- it's a brand new development -- it does run on top of TCP. It will run on top of IP, it'll run on top of LTP, it will run on top of almost anything, in the same sense that the Internet Protocol will run on top of almost anything.

The core elements of the Bundle Protocol are not unlike the Internet Protocol in terms of functionality, it's just that it's faced with a much higher probability of disruption, and an absolute guarantee of variable delay because the planets are in motion and their distances apart change with time.

Also, because of the variable delay and the very large potential delay, the domain name system (DNS) doesn't work for this kind of operation. So we've ended up with a two-step resolution for identifiers that first you have to figure out which planet are you going to. And then after you figure that out, then you can do the mapping from the identifier to an address at that locale, where you can actually send the data.

Whereas in the Internet Protocols, you do a one-step lookup: You take the domain name, you do a lookup in the DNS, you get an IP address back and then you open a TCP connection to that target. Here, we do two steps before we can figure out where the actual target is.

When you're mentioning all of the different bodies you working with, it always makes me curious - which is the greater challenge, the actual technical side or the 'herding 500 cats across the world to all agree to something' side?

VC: I think getting people to agree and to implement is the hard part. That was true for the Internet as well. It was certainly not an easy process, turning it on in 1983 was just barely the beginning because we turned it on under the auspices of the American defense department.

And all the networks involved in that initial ignition were all DoD-managed. It was only in the mid-1980s that we started to see significant uptake for the Department of Energy ESnet, for NSF and the NSFnet, and NASA and the NASA Science Internet. And also in the mid-80s to late-80s, some implementations of TCP/IP in Europe, especially among academics.

So the same argument is true for the interplanetary work, we are still not at the point where, for example, all of the spacecraft makers, like Ball Aerospace and Lockheed Martin and Boeing and so on, the people who make the spacecraft have not yet fully adopted these things. And they probably won't until NASA asserts that you have to have that available in order to meet the requirements for spacecraft construction.

And I think that's where the Artemis mission may be the critical turning point for the Interplanetary System, because I believe that will end up being a requirement in order to successfully prosecute that mission.

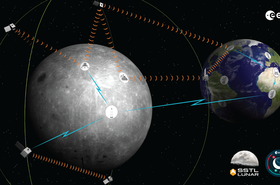

That's why I got interested in LunaNet [see our exclusive deep dive], because that seems to be the first kind of at least somewhat concrete roadmap to getting something off the ground, so to speak.

VC: The interesting issue here is to distinguish between local Lunar surface communication and communication back to Earth. And here, we get into an interesting issue, because if the installations that need to communicate back to Earth are on the face of the Moon that is facing us all time, you almost don't need a relay capability necessarily, except locally. You might need some PoPing relays followed by something transmitting directly back to Earth to an antenna.

On the other hand, if it's on the other side, that's a whole other story. And now we need orbiting spacecraft of some kind in order to pick up a signal and hang on to it and then transmit when it gets back to the other side where it can see the Earth. And that's how the Mars system works.

The orbiters are, in fact, repurposed spacecraft that were used originally to map the surface of Mars. And then because they were still around when the rovers landed, we could repurpose them to be relays in the system.

And so the two things that drive my interest in the LunaNet are the configurations where we end up with something on the other side where we can't use direct communication to Earth. And the other one, of course, might be even local communication, where the radio signals are obscured.

So if you're on one side of a crater, and then the thing you need to talk to is on the other side, you need something to relay the signal. And you may not be able to do ground based relays, there's nobody going around putting towers all over the Moon, least not in the near term. And so an orbiter could be very powerful. It introduces delay, obviously, but at the very least, you have the ability to do the relay, either from one part of the surface of the Moon or back to the Earth if necessary.

The Chinese have a Relay Satellite for their far side Moon landing.

VC: That is correct. They put one in Halo orbit, which gave them the ability to communicate back from the Chang'E 5 spacecraft.

Are they using DTN?

VC: I do not think that they are, I could be wrong. We have not had engagement with the Chinese space agency.

The only ones that we've worked with closely are ESA, JAXA, and the Korean space agency. [China] are, I believe, participants in CCSDS - I honestly have not gone to look carefully and see if they're on the roster. They certainly have the credibility to be on.

And as a member of the UN, they certainly would be welcome. I don't know if they've been active, but they certainly have access to everything we've done because we made it all public. The software is available on GitHub and the documentation is public. The work that's gone on in the IETF is public. It's all easily discovered. And so we made no attempt to inhibit access to any of that information.

Your early work was military-based. Now it's NASA-based, which obviously has military ties, but it's a more science-led organization. Has that changed how openly you can work?

VC: No, it doesn't. In fact, the irony of always is that when Bob [Kahn] and I started to work on the Internet, we published our documentation in 1974.

Right in the middle of the Cold War, we laid out here's how it all works, and here's how it would work. It wasn't detailed, it wasn't an implementation, it was a conceptual description. But then we started in 1974, the paper was published in May of '74. In January '74, we started detailed specification, and that was published in December '74, openly in the ROC list from the IETF.

And then all of the subsequent work, of course, was done in the open as well. And that was based on the belief that, in the end, if the Defense Department actually wanted to use this technology, it would need to have its allies use it as well, otherwise you wouldn't have interoperability for this command and control infrastructure.

So that's how we concluded that we should just release it publicly. And the reason for that is that we figured, well, if this is successful, it's going to be in use for a long time - p.s. it has been - and second, that meant that we wouldn't know 25 years from then who would be our allies.

And so we concluded that we should just release it publicly because we wanted any allies present and future to have access to the technology so that we would achieve interoperability.

Of course, as things unfolded, I also came to the conclusion that the general public should have access to this. And so we opened it up in 1989, the first commercial services started.

The same argument can be made for the Bundle Protocol. The demands for terrestrial communication are somewhat less stringent than they are in a deep space case. But anyone who's ever used the mobile phone and discovered that they didn't have very good access could recognize the problem of disruption, or weak signals, and the need for alternatives or relay capability.

We even have some fun applications. There's one in the northern part of Sweden, the Sami reindeer herder herders in conjunction with Lulea, the Swedish university, has an engineering group that's been testing the use of interplanetary protocols for monitoring reindeer herds. In fact, the person who's handling that operation is going to be sending in a report in January that will share through the IPN Special Interest group of the Internet Society.

So that's a very unusual application. But you can see how that could turn out to be a challenge because the reindeer are wandering around, and typography is all variable. Nobody has a whole bunch of cell phone towers up in the northern parts of Sweden. So we have to do things that are more resilient.

Another terrestrial use case for DTN is Loon [now canceled]. You detailed that in a research paper in 2018. At the time, you said that with this temporal-spatial SDN, 'we're open to using it reach out to us, NASA if you want to use it come to us...' Where's that at now?

VC: Well, so the part of the Loon work that is particularly relevant is the Minkowski routing system - his name is associated with the American nuclear program, the Manhattan Project.

Anyway, the Minkowski routing system is really a very powerful, very clever piece of work, because they were trying to deal with balloons that were free-flying. These are not tethered balloons, these are not balloons that you can guarantee are going in any particular way. So they had to build an extremely flexible protocol that could figure out which balloons can I communicate with and in which direction?

How do I aim a laser, for example, at another balloon and have it pick this up? Because remember, laser beams are very tight.

So the Minkowski capability, which is, in many ways, quite separable from the balloon project, it's a piece of network technology, got me very excited because we're still exploring, frankly, the routing protocols that make sense in an interplanetary context.

It will not be a highly dense system; the number of spacecraft that will be available certainly over the course of the next decade or three is modest in number. And so we won't have the richness that we have in terms of adaptive alternate routing in the Internet space right now, which is increasingly dense. And of course, with the Starlink effort, OneWeb, and others, the density will have gone up in terms of connectivity. So that makes it both easier and harder. It's easier because there's a path anywhere you look. And it's harder because there's a path anywhere you look - you have to pick one.

So that particular piece of development and design really captured my attention. And I hope that we can find a way to try that out among the other various routing protocols that we've experimented with.

Just to be didactic about it, they all end up populating what's called a contact graph. In the interplanetary networking space, the contact graph is the equivalent of what in the Internet we would call the forwarding information base, which basically says, where's this thing supposed to go? And here's an address, and you look that up and the forwarding table and it says send it out that way.

So the FIB, forwarding information base, is generated by the Border Gateway Protocol, or it's generated by interior gateway protocols IS IS, OSPF, and so on... don't you love all these acronyms?

And the contact graph is generated by some routing methods. And there could be any number of them, some could be very manual, which is pretty much what we do today with the Deep Space Network, a bunch of people will say, 'okay, so where are the spacecraft? And when do they need to be serviced? And when can we see them with our big 70 meter antennas? And is there a conflict for time? And how do we resolve that?' And a lot of that is done by a bunch of people arguing with each other over who gets time.

So we're hoping to find some various routing algorithms that we can try out, either in simulation or perhaps in a real live test.

I guess one of the concerns with DTN would be that one of the nodes gets congested.

VC: Yeah, congestion and flow control are really a tough problem, especially when you think about the fact that you have these really big time delays, you can't collect all the information that you would like about the state of the network in a way that convinces you that the data is fresh. Because of the latencies involved some of the data is always stale, which means you do not have you have an imperfect view of the state of the network.

And that forces us into a kind of local decision making. The good news is that a lot of the connectivity is predictable, thanks to celestial dynamics.

You can calculate orbits, you figure out at what point you should be able to transmit something. The weird thing, of course, is that when two things are far enough apart, and they are in motion, it's like shooting in a moving target, you have to aim ahead of where it is you're at now because it has to arrive at the then when the spacecraft actually gets to where the signal is propagating.

And it's a little hard to visualize that because this sort of ethereal radio signal gets launched, you can't see it. And the waves are heading out in this direction, and there's the spacecraft moving along, and you sort of have to hit the spacecraft. And this is particularly hard if it's a laser beam, as opposed to a radio wave which has more beam spread.

The notion of 'now' is very broken in these kinds of large delay environments. Because anything you do now can't affect anything anywhere else until it gets there.

There's Einsteinian delay, unless he's wrong. I mean, so far as we know, he was right that the speed of light is 300,000 kilometers a second and there's a limit. Things can't propagate faster than that. So if you transmit a radio wave, it's going to take whatever the distance is divided by 300,000 kilometers per second to get there.

So, whatever is your now is going to be delayed by the speed of light. Of course now if we figure out a way to exceed the speed of light for communication, that would be very cool. But don't let anybody sell you on the idea that somehow quantum entanglement beats the speed of light. It's too bad. I mean, something's going on, there is correlation, which appears to be demonstrable in spite of the speed of light delay.

And, you know, we can do this as the Bell's inequality, if you can do an experiment in which it's not possible for the action you took here to affect the measurement that you took at the distant point. If the speed of light delay is higher than the measurement experiment, then you have to assume that something else is going on, that leads to correlation.

But it's not the same as signaling.

VC: Exactly. Of course, this continues to be a conundrum for physicists, because the question then is, well, why is it correlated? What is it that enforces the correlation? And we don't have any good answers to that. Einstein didn't like it. It was spooky action at a distance, and he had hidden variables, and nobody liked that. And so it's an unresolved problem,

So for store and forward to work, what is the level of storage each node needs to the speed of the network?

VC: That's such a good question. So guess what, I have the same question. And I said, 'Okay, where do I go to get an answer to that.' And that is the capacity question: What capacity of this DTN network, given if I know where the nodes are, and I know what the physics are. And I know what the data rates could be. I have a traffic matrix. Do I have a network which is capable of supporting the demand?

That's the formulation of the question you're asking. So I went to the best possible source for this question, Leonard Kleinrock at UCLA. He is the father of the use of queueing theory to analyze store and forward networks way back in 1961/62, doing his dissertation at MIT on this topic, not in aid of the interplanetary network, but more general question on store and forward networking.

And so he did some fantastic work, he broke the back of the problem with what was called the independence principle. But he left MIT and came to UCLA in the '60s, he was on my thesis committee. And he's still very, very active. He's 87, still blasting on.

So I sent him a note saying, look, here's the problem. I've got this collection of notes, and I've got a traffic matrix, and I have this DTN environment, how do I calculate the capacity of the system so I know, I'm not gonna overwhelm it.

And, you know, I figured it would take him a while and maybe find a graduate student. So two days later, I get back two pages of dense math saying, okay, here's how you formulate this problem. Now, I didn't get all of the answer. I still don't have all of the answer. But I know I have one of the best minds in the business looking at the problem.

So it's a very interesting computation, but it's not an impossible one. So we can if we know, for example, which scientific instruments are deployed, and we know something about what the demand is for the data that they will produce, and if we know something about which nodes are present, and what their contact graph looks like, or which is derived from some routing algorithm, then we should be able to compute the capacity of that system. And we can certainly calculate statistically whether or not it will be overwhelmed.

Now, there are some little details like what if there's radio interference, or radio noise, and we lose data and we have to retransmit, that will increase the total amount of demand on the network because of the retransmission. So if we got a really noisy environment, it could be that our calculations have to take that into account. So this is not a solved problem. Your question is spot on. But we're on it.

I guess in the meantime, would it make sense to have some nodes with more storage than necessary, at least at bottleneck areas?

VC: Today you can get terabytes of memory for almost nothing.

Now, there is another issue here that we have spacecraft that are outside of the Earth's atmosphere and outside of our electromagnetic field. And that's a problem, because we're protected from a lot of radiation because of the Earth's magnetic field. And when you get out into space, you don't have that protection. So that's another big issue.

The design of anything involving semiconductors is at risk in the high radiation environment. So we'll have to use radiation immune memory. And that's more expensive, and maybe it will be more costly for large amounts of memory. But honest to god, I think having certainly terabytes of memory available will not be impossible. And given the slow progress and expansion of spacecraft demand, which is a function of how many missions can we launch, I think that we're probably in fairly good shape in terms of absolute raw memory available.

Is that the biggest bottleneck? The space companies like Ball aren't on board yet - is that the biggest roadblock to retroactively bringing DTN to these kinds of systems?

VC: The biggest roadblock in my view is not technical, right? It's really a question of getting implementations available off the shelf so if NASA wants to encourage the design of a mission that takes advantage of those capabilities the spacecraft builders can say, 'Oh, yeah, we know how to do that, you know, pull this off the shelf and put it here.'

This is why, for example, the companies like Cisco that got started early on the Internet story were so helpful, because you could pull a router off the shelf, instead of finding a computer and a graduate student to wrap around the computer to turn it into a router. You could just buy one from Cisco.

So commercialization in that sense, not necessarily commercial use in applications by the general public, but simply commercialization and availability for the spacefaring nations is vital to the success of DTN. And that's, again, a trend we're already seeing. If you look at SpaceX, for example, it is the incarnation of the commercialization of space, at least in terms of getting into space. And so the same argument could be made for DTN.

We're running out of time. So to shift to the more philosophical question of like, why is this a big deal? Why would this be good for humans and science?

VC: Well, to the degree we're interested in exploring the solar system, and understanding the physics of the world we live in space exploration is certainly increasingly now considered a valuable enterprise from the scientific point of view.

And in order to effectively support manned and robotic space exploration, you need communications, both for command of the spacecraft and to get the data back. And if you can't get the data back, why the hell are we going out there?

So my view has always been let's build up a richer capability for communication than Point to Point radio links, and or bent pipe relays. And so that's what's driven me since 1998, and now you know, we can smell the success of all is we can see how we can make it work. And as we overcome various and sundry barriers, the biggest one right now in my view, is just getting commercial implementations in place. So that there are at least off-the-shelf implementations available to anyone who wants to design and build a spacecraft.

I could talk to you for hours, but I appreciate your time is valuable. So is there anything you think I might have missed?

VC: None at the moment, I think we covered quite a bit of territory. And of course, I threw a lot of technical stuff at you.

I got 95 percent of it, the rest I will Google.

VC: [Laughs]

[With the call happening after soon after the January 6 insurrection, talk segued into far-right discussion]

It's very unsettling. I live in Northern Virginia, not very far from DC called miles away. On the far-right there's a lot of language about armed demonstrations and things like that, not only here in DC, but in capitals around the country. This is truly scary.

Yeah, stay safe, and best of luck. At least it's a little better here in the UK...

VC: Yeah, democracy should not depend on luck, and yet it does. If you look back at the history of the American War of Independence - which I know you don't call it that in the UK - we barely made it. Winter of 1776 could have completely killed that revolution. And it didn't, but just barely.

On that cheery low -

VC: - but certainly, the interplanetary stuff is far more positive, far more exciting. It's genuinely... is our species worth saving? Right now the answer is not clear.

The counterargument is that even if we're not, us providing data on how we messed up could be useful to other species.

VC: You may be right, you should talk to David Byrne about that. There is a series of books, if you're a science fiction reader, called The Uplift series is all about the advanced species helping less advanced species and sentience and capacity for space travel. You might want to have a look at that.