Water, water everywhere – but how do you get it cooling your precious data center technology? Do you even need to? More importantly, do you need to build a whole new data center to do it? DCD sat down with Matt Archibald, a 20-year veteran of data center cooling, and director of technical architecture at nVent, for a masterclass in liquid cooling.

Archibald begins by articulating a universal pinch point. Liquid cooling goes against everything we’ve ever been taught about mixing water with electricity.

“Historically, there are a lot of customers who, when moving liquid closer to the IT, become hydrophobic. Systems that contain high-performance chips that need to be liquid-cooled are not on the lower cost spectrum; a typical rack could be anywhere from a million to eight million dollars. That's $8 million, and liquid doesn't play well with electronics, so there has been resistance.”

In reality, liquid cooling is not only a safe solution, with the liquid and technology kept apart by rugged and robust safeguards; but it is now seen as the way forward for high-performance loads that, by their very nature, have needs extending beyond the limits of traditional air cooling and should be part of the roadmap for both designers of new facilities and retrofitting of existing ones.

While data center managers may be concerned about having to rebuild their infrastructure to support liquid cooling, data center technology has evolved to include options for localized liquid loops, consisting of a coil filled with liquid, that can operate within existing air-cooled infrastructure. This makes adopting liquid cooling less invasive, and faster and creates a far more practical way to retrofit an existing facility than completely remodeling.

The chips are down

Says Archibald, “There is a lot of attractiveness for customers with existing legacy data centers because while retrofit for full liquid cooling may be in the cards down the line, it's not here now – but these chips are here now and there are solutions that can assist with rapidly deploying liquid-cooled IT equipment that leverages the existing data center infrastructure.”

Because demand for liquid cooling is so new and the technology it supports is still emerging, many operators don’t know what their liquid footprint will be. Not all IT loads need to be liquid cooled – and indeed, in some cases, air cooling is perfectly sufficient. Then there’s the biggest unknown quantity – the rise of AI. Archibald tells us:

“AI is not replacing anything in the data center, everything we had 17 months ago will still be there – AI is just the pat of butter on top of the pancake stack. I don't know how prevalent it will be in the data center from a customer perspective, but none of the traditional compute, the traditional services, enterprise workloads, and stuff like that, has gone away and we don't know how much of that will be replaced with AI technologies in the future. The roadmap of what a data center is going to look like in five years now has a whole bunch of non-committal statements because customers don't know how AI will impact their current IT footprint.”

Cure your hydrophobia

“AI is interesting, you've got some of those one to three million plus dollar racks, which have liquid-only chips – whereas other loads can be air-cooled. The other side of that is not everything is going to be liquid-cooled. Traditional workloads are going to be higher performance than they were in the past, so they're going to use more power than they have in the past. But you can still get away with air cooling.”

Indeed, a large data center runs the gamut from 10-15kw watt racks that will only ever need air cooling, to 20-30kW racks, where customers might consider air or liquid cooling if they are future-proofing, to the 60-100+kw range for high-performance computing (HPC) and AI workloads, where liquid cooling is required now. Archibald addresses those borderline mid-range workloads. When is it worth considering liquid for those?

“Some customers might choose to go liquid because they know they’re going to have to do it at greater scale at some point – so let's get our feet wet with it. Liquid cooling was always there in the HPC (high-performance) computing space, scientific academia, and compute communities, if there was a large research and supercomputer project. We solved that with a rear door heat exchanger form of liquid cooling, next to the IT. Wherever any customer is in the journey, you need to be able to adopt the hybrid approach. There’s no one solution, so cooling companies need to be able to put multiple cooling solutions in to give the customer the most flexibility and modularity to achieve what they need.”

The solution is listening

Archibald believes that the secret to finding the right solution is listening to the customer, but with it, asking the right questions. He explains:

“I can throw out all the different ‘Do this, do that’ but take a step back. What are they honestly trying to achieve? I'm not questioning anything, what I'm trying to get out of them is what problem we are ultimately trying to solve. This could be things like whether they want to achieve a more efficient facility by running the chiller less, supporting higher-power IT, or redistributing the power balance between the facility and IT equipment. These sorts of things factor into what solution I recommend.”

This speaks to the fundamental point – liquid cooling, especially when it comes to retrofitting, is hard. But it’s possible. The key is focusing on a clear and defined end objective.

“Deploying liquid in any format fundamentally changes how the data center operates. But we don’t want to do that. We want the data center to operate as it historically has, and deploy these new technologies in the least invasive way possible. There are a range of cooling technologies, both within the nVent portfolio and outside that can help customers get down this path. We want to ensure we're knocking out as many objectives as possible, knowing that not everybody is an expert in liquid cooling.”

The future is hybrid

It’s worth restating the point that, like most experts, Archibald doesn’t believe in an “all-liquid” future, and with good reason. He tells us:

“There is always going to be a place for air cooling, you can't liquid cool everything. It is going to become more dominant but it's not going to be the dominant force. Air can still be efficient when done right. You still have data centers with very low PUEs, so it might be that two to five percent of the data center might be some form of liquid-cooled in the future, and that might grow to 20 to 40, but it'll keep progressing as the needs as the technology demands.”

Part of the solution to getting the right setup is education. We’ve already talked about the “hydrophobia” factor associated with liquid cooling, but there are also valid concerns about creating clean rooms, dedicated maintenance teams, holes being drilled through walls, and so on. Archibald points to solutions such as rear door heat exchangers (RDHX) and in-row cooling, that don’t require such dramatic installation methods or upkeep.

“Modularity, flexibility, and cost are key to achieving objectives, but we can’t forget about simplicity. It’s not as simple as only deploying liquid-cooled CPUs or deploying rear door heat exchangers. Customers have to work around these liquid cooling solutions, so we as vendors have to make it easy to work around. Air is this kind of cool thing, it is just sort of everywhere. You can have more of it than you need, you can have less of it than you need, and you can deal with those imbalances rather easily. From a space reasoning perspective, we only want the liquid to be where it needs to be. The more points we want direct liquid cooling to touch, the more the solution becomes both complex and costly. Having a blend of liquid cooling technologies that achieve the desired objectives improves efficiency and control costs, while still giving customers the flexibility to address changing needs in the future.”

Regulating the temperature

Then there’s the question of how to regulate the temperature in a hall containing mixed loads, cooled by a variety of methods. For this, Archibald talks of two main considerations – control, and modeling.

“I need that solution to work locally, as well as globally with my data center without tearing everything up. By modeling, such as with a digital twin, we can see how when I drop in a new system, I can see how it affects the local environment. From a control perspective, I want controls to integrate locally and manage the new system locally to ensure the environmental requirements are met, but I also want to ensure these controls integrate upstream. It becomes more than just collecting telemetry, it’s to make sure that putting this new system in this specific place isn’t going to starve other locations of needed power and cooling or generate too much heat that negatively affects other IT equipment.”

The last five years have been tumultuous in all forms of industry, and for data centers, the combination of massive growth through the rise of new workloads including but not limited to AI, and the supply chain issues caused by global events, has been a double whammy. Archibald zooms out to look at the challenges in the industry today.

“There are a lot of challenges that we've never faced before. Take a pump vendor, they have their business, which suits multiple different industries and verticals. Now you've got this historically niche space overnight becoming very high demand. How does their business maintain that supply chain?”

A good question. He continues:

“We've seen a few component manufacturers within our supply chain that don't necessarily want to shelve their existing business but have a whole new set of volumes, so now they're scaling up to support it. That’s how we cope with the demand across this breadth of products – making sure the supply chain is resilient. During Covid, it was impossible to get a power supply or a fan. Lead times have come down, but they're not at pre-Covid levels. It's not just COVID-19 though, it's increased demand. Supply chains have recovered to pre-Covid levels, but all of a sudden, there is this whole new set of demands from increasing digital services, along with AI as this whole new big thing on top.”

Supply chain letters

Another big consideration is how suppliers source components in the first place. When liquid cooling was a niche pursuit, a small-scale order with a ‘mom and pop’ manufacturer was sufficient. Small-scale manufacturing can’t always promise to keep up with demand as it skyrockets, so internal production of parts becomes a more viable option for larger companies like nVent. It’s not just about the size of the order, as Archibald says:

“There are also a bunch of different challenges surrounding manufacturing quality, it becomes a very precise process. How can you scale your manufacturing to keep up with demand, with that level of accuracy? This comes down to an at-scale manufacturing capability with a focus on both manufacturing quality as well as knowledge of the application. Extending quality processes beyond manufacturing ensures success from small-scale applications to mass production. nVent quality engineers are involved from the day one concept, through the product engineering phase, and even out through solution deployment. This ensures that our products are designed from day one for manufacturing scale and provides a direct feedback loop for continuous improvement. The main thing though, the whole way through the supply chain as well as our end-of-line manufacturing, just comes down to maintaining quality through at scale.”

Learn from the experts

We finished the masterclass by asking Archibald for his advice to someone who knows that liquid cooling needs to be a part of their future strategy but doesn’t know where to begin. We have to say – we like the way he thinks on this:

“There are a lot of articles like this one to see what the trends, guidelines, and recommendations are. There is a lot of chatter. You need to be able to trust your source, Read, read, read – online because it's faster digestion of information. A lot of the names of experts and authors come up again and again. It's not because they want to monopolize the conversation. These are the people who know that liquid cooling isn't new, it's just been over in its side corner for years.”

“I'm not saying blindly trust them, but tracking some of these author and contributor names – people who know what they're talking about, they have lived it, they have breathed it. The caveat is that every single customer's installation will be different. There are so many nuances to liquid cooling because it's married to both the facility and the IT equipment, it's an intimate relationship – infrastructure, and support have to be there. So, have multiple conversations, and get multiple perspectives, because that's going to help formulate your strategy.”

For more technical information and to contact us please click here.

More from nVent

-

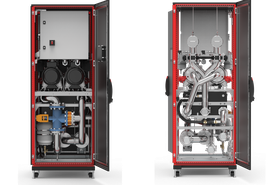

New rear door coolers from nVent to offer a scalable solution for high-density racks

Providing a scalable, pay-as-you-go, room-neutral solution for cooling high-density racks up to 78kW

-

Sponsored Unpacking CDU motors: It’s not just about redundancy

Liquid cooling is here – next-generation chips need more efficient cooling methods to keep them from overheating

-

Sponsored Your golden ticket to liquid cooling – enter through the rear door

Could rear door heat exchangers be the missing link for retrofit?