A research newsletter from Goldman Sachs has warned that large tech companies have ramped up capital expenditure to fuel generative AI development, but have yet to show sustainable business models.

The investment banking firm estimates that around $1 trillion will be spent over the next few years on data centers, semiconductors, grid upgrades, and other AI infrastructure.

The report argues that even if a so-called “killer application” were to emerge, it’s unclear that generative AI will generate the financial returns investors have been banking on.

Meanwhile, countries such as the US that have led the technology’s charge are now grappling with hardware shortages and, even more worryingly, power constraints and shortages that are likely to require the country’s grid to be overhauled.

"So, the crucial question is: What $1tn problem will AI solve? Replacing low-wage jobs with tremendously costly technology is basically the polar opposite of the prior technology transitions I’ve witnessed in my thirty years of closely following the tech industry," Goldman Sachs' head of global equity research, Jim Covello, said.

"Many people attempt to compare AI today to the early days of the Internet. But even in its infancy, the Internet was a low-cost technology solution that enabled e-commerce to replace costly incumbent solutions."

Given the complexity of building AI chips, alongside Nvidia's market dominance, Covello said that there is no guarantee that costs will naturally fall. "The market is too complacent about the certainty of cost declines," he said.

MIT economist Daron Acemoglu said that “only a quarter of AI-exposed tasks will be cost-effective to automate within the next 10 years, implying that AI will impact less than five percent of all tasks.”

He also argued that we can’t yet know that, as AI models improve over time, they will become less expensive. He also estimated that AI will only improve US productivity levels by 0.5 percent, while simultaneously increasing GDP growth by 0.9 percent.

"How long investors will remain satisfied with the mantra that 'if you build it, they will come' remains an open question,” Goldman’s Covello continued. “The more time that passes without significant AI applications, the more challenging the AI story will become.

"And my guess is that if important use cases don’t start to become more apparent in the next 12-18 months, investor enthusiasm may begin to fade. But the more important area to watch is corporate profitability. Sustained corporate profitability will allow sustained experimentation with negative ROI projects. As long as corporate profits remain robust, these experiments will keep running. So, I don’t expect companies to scale back spending on AI infrastructure and strategies until we enter a tougher part of the economic cycle, which we don’t expect anytime soon."

Senior equity research analyst Eric Sheridan was more positive about the current moment: "Those who argue that this is a phase of irrational exuberance focus on the large amounts of dollars being spent today relative to two previous large capex cycles—the late 1990s/early 2000s long-haul capacity infrastructure buildout that enabled the development of Web 1.0, or desktop computing, as well as the 2006-2012 Web 2.0 cycle involving elements of spectrum, 5G networking equipment, and smartphone adoption.

"But such an apples-to-apples comparison is misleading; the more relevant metric is dollars spent vs company revenues. Cloud computing companies are currently spending over 30 percent of their cloud revenues on capex, with the vast majority of incremental dollar growth aimed at AI initiatives. For the overall technology industry, these levels are not materially different than those of prior investment cycles that spurred shifts in enterprise and consumer computing habits.”

Colleague Kash Rangan added: "Every computing cycle follows a progression known as IPA - infrastructure first, platforms next, and applications last. The AI cycle is still very much in the infrastructure buildout phase, so finding the killer application will take more time, but I believe we’ll get there."

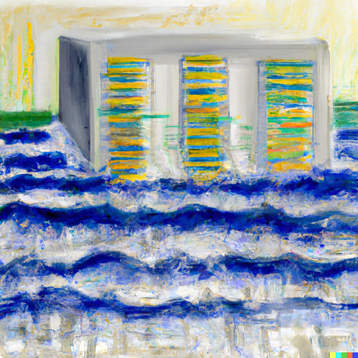

Irrespective of the long-term success or failure of the investment cycle, the AI data center buildout will have a near-term impact on the grid. Carly Davenport, senior US utilities equity research analyst, said: "After stagnating over the last decade, we expect US electricity demand to rise at a 2.4 percent compound annual growth rate (CAGR) from 2022-2030, with data centers accounting for roughly 90bp of that growth.

"Indeed, amid AI growth, a broader rise in data demand, and a material slowdown in power efficiency gains, data centers will likely more than double their electricity use by 2030. This implies that the share of total US power demand accounted for by data centers will increase from around three percent currently to eight percent by 2030, translating into a 15 percent CAGR in data center power demand from 2023-2030."

She added: "We estimate around 47GW of incremental capacity is needed to serve data center-driven load growth in the US through 2030."

Hongcen Wei, commodities strategist, focused on data center hotspot Virginia, noting: "Data centers boosted Virginia power consumption by 2.2GW in 2023, accounting for 15 percent of the total power consumption in the state that year, compared to virtually zero percent in 2016 and roughly three percent in 2019.

"While the evidence suggests that AI and data centers are boosting US power demand, the overall magnitude of the boost remains modest compared to both the current level of total US power demand, as well as the level of data center power demand expected later this decade. We estimate the 2.2GW of Virginia data center power demand in 2023 makes up only 0.5 percent of the 470GW of total US power demand and 7 percent of the roughly 30GW increase in overall data center demand our equity analysts expect by 2030."

Wei continued: "The magnitude of the recent increase in data center power demand in Virginia provides a glimpse of the large boost in US power demand likely ahead."

This growth will put an unprecedented strain on the grid, former Microsoft VP of energy Brian Janous said: “Utilities have not experienced a period of load growth in almost two decades and are not prepared for— or even capable of matching—the speed at which AI technology is developing.

“Only six months elapsed between the release of ChatGPT 3.5 and ChatGPT 4.0, which featured a massive improvement in capabilities. But the amount of time required to build the power infrastructure to support such improvements is measured in years.”

Despite the concerns and constraints raised in the report, Goldman Sachs editor Allison Nathan concluded: “We still see room for the AI theme to run, either because AI starts to deliver on its promise, or because bubbles take a long time to burst."