A £42 million ($53.5m) research project aiming to ‘redefine the compute paradigm’ and cut the cost of AI infrastructure by 1,000 times has been launched by the UK’s Advanced Research and Innovation Agency (ARIA).

The 'Scaling Compute: AI at 1/1000th the cost' project is seeking to “create new technological options for the infrastructure supporting AI systems, enabling AI processors with performance, efficiency, and ease of manufacturing well beyond what is deemed possible today," according to its website.

ARIA, a UK government agency set up to back novel technology research projects, is inviting anyone with ideas of how this goal can be achieved to submit concept papers. The project will run for four years.

A new approach to AI compute power

Suraj Bramhavar, ARIA's program director for Scaling Compute, said that for more than 60 years, society has “benefited from exponentially more computing power at lower cost.” But, he said, “this fact is no longer true and has coincided with an explosion of demand for more compute power driven by AI.”

Writing on LinkedIn, Bramhavar said: “The narrow mechanisms used to train these AI systems come at incredible cost (the world’s leading AI models cost upwards of £100m to train) and this combination of technological significance and scarcity have far-reaching economic, geopolitical, and societal implications.

“Nature provides us with at least one such existence proof that it is fundamentally possible to accomplish sophisticated information processing much more efficiently.”

Bramhavar said the program will seek to demonstrate that it is possible to “significantly drop the hardware costs required to train large AI models,” and to do this “without primarily relying on leading-edge fabrication facilities.”

The cost of the AI revolution

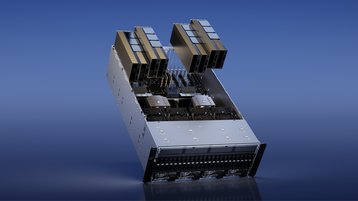

Building and running AI systems is an expensive business requiring thousands of chips. GPU maker Nvidia’s top-of-the-range AI model, the H100, sells for up to $40,000 per chip, with the company’s older A100 GPU, which has been used to train popular models such as OpenAI’s GPT-4, costing about $10,000.

OpenAI CEO Sam Altman said last year that GPT-4 had cost his company more than $100 million to train, and that building the model required 25,000 A100s.

ARIA was announced by the UK government in 2021 and officially launched last year. Its goal is to fund ‘moonshot’ technology projects in a bid to mimic the success of US government research agency DARPA, which is credited with a key role in the development of foundational technologies including the Internet, GPS, and the personal computer.

The Scaling Compute initiative falls within ARIA’s Nature Computes Better workstream, which is looking to “redefine the way computers process information by exploiting principles found ubiquitously in nature.”