If you’ve been around the data center industry for any time, you’ve probably heard that, some time in the future, we’ll be enjoying all the advantages of dense data center designs.

We know that being able to cool 20, 30, even 50 kW of hardware in a rack unlocks all sorts of possibilities, from smaller data center footprints to support for bleeding edge hardware for generative AI.

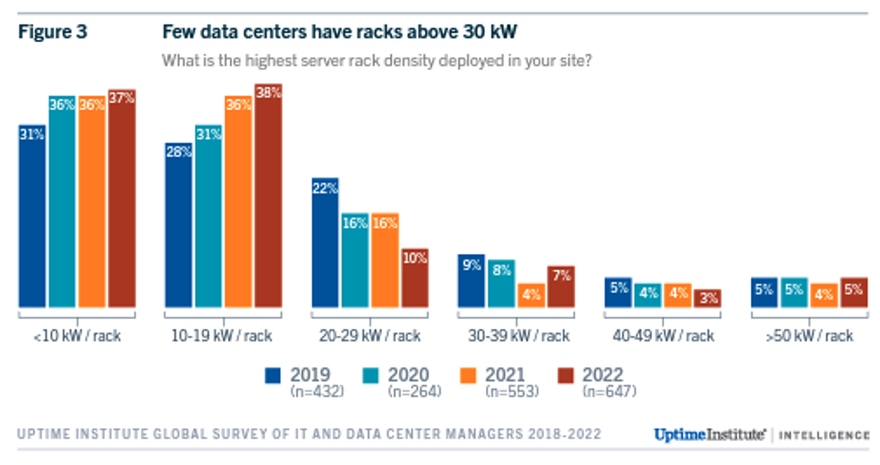

So let’s ask a blunt question: where are all the high density racks? The latest Uptime Institute Global Data Center Survey gives us a good sense of the situation.

To put it simply, the vast majority of organizations don't support densities above 20 kW, and the numbers have actually been on the decline for the past few years. And, as the report notes, if we took hyperscalers out of the analysis, and added in all the small server closets and server rooms, the density situation would actually be worse than these results suggest.

Why is that?

There are four intersecting factors affecting data center operators, keeping them from achieving greater densities.

1. Poor server utilization. We know from research that server utilizations, outside hyperscalers, are woefully low – IBM reports that the average server utilization is 12-18 percent. Despite 20 years of server virtualization and several years of containers, many organizations still run a single workload on a server. This blocks server consolidation, forcing providers to rack more hardware than they’d like.

2. The comfort zone. Increasing density isn’t a straightforward task. It takes different thinking, different technologies, and different budgets. Many providers are happy sticking to their status quo because it feels safer. Understanding the technical risks of greater density, which essentially requires liquid cooling, isn’t a straightforward thing, especially when you’ve never done 20-30 kW density at scale, and you’re afraid of liquid in your data center.

3. The need to retrofit data centers to achieve greater densities. Doubling or tripling both power distribution and cooling isn't an easy task. Doing it for a few racks has its complexities, doing it across the data center might be impossible. Whether or not data center operators like it, there's a substantial set of hard and soft costs that come with a retrofit, and in a world of limited budgets, retrofitting a data center for greater densities might be impossible.

4. Making a density move comes with added business risk. They essentially have two options:

a. They can build a data center that supports 30, 40, even 50 kW per rack, and then they run the risk of not having the demand for that density. If that situation extends for years, they've spent substantial money on an underutilized or unutilized resource, which they would then be forced to write off.

b. They can build a data center that offers today's density levels, supporting today's workloads and supporting today's servers. In the past, they could probably get a decade out of that approach. But if they have a workload or a customer who demands more density, they have built a design that can't serve emerging needs.

So discerning a path past these challenges isn't an obvious thing. However, increased density is going to be a key consideration going forward, because, to put it simply, the future demands it.

New servers are hotter than ever

Today's servers can consume a kilowatt or more, and according to this road map, in two years we will see servers consuming as much as two kilowatts. If your racks can only provide 10 kilowatts of cooling, then you're deploying five to 10 servers per rack, and investing more money in blanking panels. Not only is that terrible from a space utilization standpoint, if you're a colocation provider, it's terrible from a business standpoint.

Conventional cooling

Trying to conventionally cool hotter servers is a recipe for losing ground on PUE. We all know, according to research, that data center PUE has remained stubbornly flat. Due to the emergence of these hotter servers, we are actually in a position where conventional cooling will result in us backsliding on PUE if we don't come up with a different way to cool servers more efficiently.

Doubling server power consumption, and then doubling CRAC power consumption to compensate for the added heat is not the path toward greater data center efficiency, lower PUE, and long-term industry sustainability.

New workloads demand new densities

Whether or not generative AI is going to become a widely deployed service in enterprises or not, it's becoming clear that more and more organizations are intending to incorporate AI powered capabilities in the software they sell, the products they build, the services they offer, and even in their internal operations.

AI workloads demand new power and cooling thinking, because they run on intensely hot, dense infrastructure. AI is the reason that Meta paused their data center construction last year and has announced the need to go all-in toward liquid-cooled racks. They understand, better than anyone, that the densities achieved by conventional air cooling simply are not a fit for these emerging workloads.

Distributed cloud demands denser infrastructure

Again and again, we see latency sensitive workloads that can't be run at scale on centralized cloud infrastructure. Instead, these workloads are being deployed on a wide and diverse range of Edge infrastructure platforms, many of which must be slotted into space-confined environments, like Telco sheds or spare space in offices.

These distributed infrastructure designs are more efficient, effective, and impactful if they are dense, simply because they can provide more compute and storage capacity per square foot.

What should data center providers and operators do to cope with these challenges and difficulties?

It's time to understand that supporting greater densities positions you for greater success. Being able to support emerging designs, emerging workloads, and emerging data center site selection possibilities with cooling that supports radically hotter power envelopes gives you added flexibility and freedom.

It's time to evaluate technologies. It's a bit of a non-standardized wild west out there, with many intersecting, overlapping, and competing providers. The smart data center providers and operators know that every new cooling technology needs to be on the table to find the best options for their unique needs.

Whether you are evaluating rear door, DLC, or immersion, you're likely to find situations in which one technology offers advantages over another. Liquid cooling technologies are mature and proven in every other industry other than the data center industry.

It's time to evaluate different data center designs. This is of course what Meta has done, recognizing that their 2022 data center designs weren't suitable for 2023. Most organizations aren't under the pressure that Meta experiences, but it's clear that those pressures will continue to become more widespread. Many data center design and construction companies are working feverishly to deliver new, modular designs that can support 50 KW and beyond.

Density doesn’t have to be an all-or-nothing initiative. It’s likely that existing providers will start by retrofitting individual racks to address individual requirements. That incremental approach gives providers an opportunity to trial different technologies and tailor designs to distinct requirements.

It's also clear that thinking about density has to be a holistic process, not just a process about technology. Everyone from real estate, finance, infrastructure operations, and IT stack administrators will find themselves in the middle of conversations about density. Density unlocks a range of possibilities, from smaller data center footprints to greater energy efficiency – impacting both hard and soft costs.

Finally, the industry is going to reach a density inflection point. When that happens, greater density will become the new emerging differentiated capability, the new selling point, and anyone who isn’t able to keep up will be left out.

This factor perhaps doesn’t matter as much if you’re not selling data center capacity. However, if you are selling capacity, if your competitor can put a customer’s entire infrastructure in a single rack, and you require five racks – you’ll be out of the running.

Of course at Nautilus, since we specialize in highly dense designs, we're in the middle of the challenges, complexities and possibilities of density. It's clear that there will be winners and losers as data center density considerations come to the forefront. Some organizations will be on the leading edge, and other organizations will keep their heads in the sand as long as possible.

Fortunately, at Nautilus, our solution suits the early adopter, the mainstream provider, and even the laggard. Whether your aim is simply to incrementally improve your densities, or to drive a radical shift toward tripling or quadrupling your density to 50 kW and beyond, Nautilus is here for you with distinctive designs and partnerships, rooted in real world data centers proven in production, to help you succeed faster than ever before.

To learn more about Nautilus, visit us at https://nautilusdt.com/

More from Nautilus Data Technologies

-

Sponsored A rethink on efficiency: How deeper ideas help lead the charge

Industry efficiency is on us, let’s make it happen

-

Sponsored Power and water: How can data centers be made genuinely sustainable?

Data centers are made from concrete, cement, steel and glass, and consume vast amounts of power. So how can operators genuinely become “sustainable”, let alone achieve net-zero?

-

Sponsored The future of data centers: Seven key trends

Examining the next ten years. A look at what the future holds for the data center industry