The fire that destroyed a data center (and damaged others) at the OVHcloud facility in Strasbourg, France, on March 10-11, 2021, has raised a multitude of questions from concerned data center operators and customers around the world. Chief among these is, “What was the main cause, and could it have been prevented?”

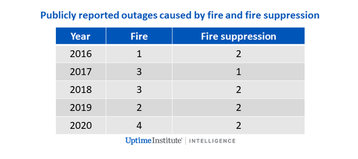

Fires at data centers are rare but do occur - Uptime Institute Intelligence has some details of 25 data center fires (14 publicly recorded, 11 in its member incident database - see Figure below). These were collected over many years. But most of these are quickly isolated and extinguished; it is extremely uncommon for a fire to rage out of control, especially at larger data centers, where strict fire prevention and containment protocols are usually followed. Unfortunately for OVHcloud, the fire occurred just two days after the owners announced plans for a public listing on the Paris Stock Exchange in 2022.

While this Note will address some of the known facts and provide some context, more complete and informed answers will have to wait for the full analysis by OVHcloud, the fire services and other parties. OVHcloud has access to a lot of closed-circuit television and some thermal camera images that will help in the investigation.

Updates

OVHcloud details compensation for fire-hit customers

Firefighters return to OVHcloud's SBG3 data center in Strasbourg

OVHcloud

OVHcloud is a high-profile European data center operator and one of the largest hosting companies globally. Founded in 1999 by Octave Klaba, OVHcloud is centered in France but has expanded rapidly, with facilities in several countries offering a range of hosting, colocation and cloud services. It has been championed as a European alternative to the giant US cloud operators and is a key participant in the European Union’s GaiaX cloud project. It has partnerships with big IT services operators, such as Deutsche Telekom, Atos and Capgemini.

Among OVHcloud customers are tens of thousands of small businesses running millions of websites. But it has many major enterprise, government and commercial customers, including various departments of the French government, the UK’s Vehicle Licensing Agency, and the European Space Agency. Many have been affected by the fire.

OVHcloud is hailed as a bold innovator, offering a range of cloud services and using advanced low energy, free air cooling designs and, unusually for commercial operators, direct liquid cooling. But it has also suffered some significant outages, most notably two serious incidents in 2017. After that, then-Chief Executive Officer and chairman Octave Klaba spoke of the need for OVHcloud to be “even more paranoid than it is already.” Some critics at the time believed these outages were due to poor design and operational practices, coupled with a high emphasis on innovation. The need to compete on a cost basis with large-scale competitors —Amazon Web Services, Microsoft and others – is an ever-present factor.

The campus at Strasbourg (SBG) is based on a site acquired from ArcelorMittal, a steel and mining company. It houses four data centers, serving customers internationally. The oldest and smallest two, SBG1 and SBG4, were originally based on prefab containers. SBG2, destroyed by the fire, was a 2 MW facility capable of housing 30,000 servers. It used an innovative free air cooling system. SBG3, a newer 4 MW facility that was partially damaged, uses a newer design that may have proved more resilient.

Chronology

The fire in SBG2 started after midnight and was picked up by sensors and alarms. Black smoke prevented staff from effectively intervening. The fire spread rapidly within minutes, destroying the entire data center. Using thermal cameras, firefighters identified that two uninterruptible power supplies (UPSs) were at the heart of the blaze, one of which had been extensively worked on that morning.

All of the data centers have been out of action in the days immediately following the fire, although SBG3 and SBG4 are due to come back online shortly. SBG1 suffered significant damage to some rooms, and OVHcloud has decided it will not be re-started. moving surviving servers to other facilities. Many customers were advised to invoke disaster recovery plans, but OVHcloud has spare capacity in other data centers and has been working to get customers up and running.

Causes, design and operation

Only a thorough root-cause analysis will reveal exactly what happened and whether this fire was preventable. However, some design and operational issues have been highlighted among the many customers and ecosystem partners of OVHcloud:

- UPS and electrical fires.

Early indicators point to the failure of a UPS, causing a fire that spread quickly. However, there may be other reasons why a fire started at or near that location. At least one of the UPSs had been extensively worked on earlier in the day, suggesting a maintenance issue may have been a main contributor. Although it is not best practice, battery cabinets (when using vent-regulated lead-acid, or VRLA, batteries) are often installed next to the UPS units themselves. Although this may not have been the case at SBG2, this type of configuration can create a situation where a UPS fire heats up batteries until they start to burn and can cause fire to spread rapidly. - Tower design.

SBG2 was built in 2011 using a tower design that has convection-cooling based “auto-ventilation.” Cool air enters, passes through a heat exchange for the (direct liquid) cooling system, and warm air rises through the tower in the center of the building. OVHcloud has four other data centers using the same principle. OVHcloud says this is an environmentally sound, energy efficient design — but since the fire, concerns have been raised that it can act rather like a chimney. Vents that allow external air to enter would need to be immediately shut in the event of a potential fire (the nearby, newer SBG3 data center, which uses an updated design, suffered less damage). - VESDA and fire suppression.

It is being reported that SBG2 had neither a VESDA (very early smoke detection apparatus) system nor a water or gas fire suppression system. Rather, staff relied on smoke detectors and fire extinguishers. It is not known if these reports are accurate. Most data centers do have early detection and fire suppression systems, and OVHcloud does deploy these at other data centers.. - Backup and cloud services.

Cloud (and many hosting) companies cite high availability figures and extremely low figures for data loss. But full storage management and recovery across multiple sites costs extra, especially for hosted services. Many customers, especially smaller ones, usually pay for basic backup only. Statements from OVHcloud since the fire suggest that some customers would have lost data. Some backups were in the same data center, or on the same campus, and not all data was replicated elsewhere.

Fire and resiliency certification

Responsibility for fire prevention — and building regulations — is mostly dealt with by local planning authorities (AHJs – authorities having jurisdiction). These vary widely across geographies.

Would any data center certification have surfaced the risks, helping to prevent the fire? The answer is probably not. Uptime Institute Tier certification and others tend to avoid duplicating – and possibly contradicting – local fire regulations. Specific data center risk assessments, however, may have identified obvious risks and anomalies, or any concerns with fire suppression

In recent years, accidental discharge of fire suppression systems, especially high pressure clean agent gas systems, has actually caused more serious disruption than fires (see Figure) with some banking and financial trading data centers affected by this issue. Fires near a data center, or preventative measures taken to reduce the likelihood of forest fires, have also led to some data center disruption (not included in the numbers reported above).

A version of this article first appeared at the Uptime Institute Journal