AI-powered applications are rapidly becoming essential to key business functions, such as quality control and customer communications. And the arrival of generative AI algorithms like ChatGPT indicate we’re only beginning to see how truly pervasive this technology will be in our daily lives.

It is not difficult to get started with AI. Accessible frameworks and pre-trained models provide foundations that developers can easily build on. Many developers run their AI experiments on their own hardware or in the public cloud. But things get more complicated once a project moves past the early stages.

Hidden challenges of scaling AI

As AI and machine learning projects evolve and scale over time, the infrastructure requirements for their workloads change as well.

Early experiments can use small data sets to create a working model, but as an application grows, it must access and process real business data for each use case. This can prove problematic if data is stored in the public cloud, as shared resources can be slowed by the demands of AI’s iterative development processes.

The increased compute requirements of real-time inference can lead to poor performance if an organization is not running on dedicated infrastructure. As a project scales, compute hardware and data storage may need to be kept close to each other to keep latency low and performance high.

Another challenge is that once an organization successfully implements AI within a key business function, it evolves from being an experiment to being mission-critical – with no room to fail. The infrastructure supporting it must be fast and completely reliable, and disaster recovery becomes much more important. If running in the public cloud, constant availability requirements will often demand multiple instances across regions and cloud providers.

Governance and compliance also become increasingly difficult due to the spread of shadow IT, where teams in different departments start spinning up their own AI solutions, often without IT’s awareness. Projects can end up running on multiple infrastructure providers, potentially causing security and compliance issues.

Finding the Right Infrastructure Solution

When considering where to host your AI workloads to support growing projects, there are four main options:

- Public cloud: This is flexible and scalable, the “as a service” consumption model makes it easy to get started without a huge upfront investment, and you don’t have to worry about hardware maintenance and management. On the flip side, shared cloud resources might not be able to deliver required performance, causing issues with application access and reliability. In addition, fees for storing, downloading, and processing data can add up fast, and costly data egress fees can be incurred when implementing disaster recovery best practices.

- On-premises: Provides complete control over your data. Dedicated hardware is likely to meet performance requirements, and costs tend to be more predictable. However, getting started can require significant upfront capital expenditure, and setting up a GPU cluster takes time and requires a specialized skill set. There are significant maintenance/management requirements, and capacity planning can be very challenging.

- Traditional colocation: Solves the maintenance and management challenges of on-premises, while still providing total control over your data and equipment. This model also provides more compute and storage at a lower cost than public cloud. On the other hand, dedicated hardware can require a significant capital expenditure and, depending on availability, could require months of lead time. Making infrastructure changes quickly can also be a challenge.

- AI/ML infrastructure-as-a-service (IaaS): This can provide the best of both worlds – the flexibility of public cloud with the high performance needed for AI workloads. There is a growing ecosystem of AI cloud providers that are giving companies immediate access to highly scalable AI computing power in a subscription model. In addition, some data center companies, like Cyxtera, are offering IaaS specifically for AI workloads, enabling organizations to provision dedicated hardware on demand. This approach is well-suited for AI projects as they scale, offering significant compute power without major up-front costs or wait times. You can quickly provision new resources as your capacity requirements increase, helping lower total cost of ownership and accelerate time-to-market, without needing to worry about hardware maintenance and management.

As your organization’s AI projects become more complex and business-critical, do you have the right infrastructure strategy in place? If not, you will eventually run into issues with cost, performance, or resources – any of which could cause an AI project to falter.

It’s not necessary to be "all-in" on any one infrastructure solution. When evaluating options, organizations should:

- Look at the attributes of your AI applications and data and take the time to understand the specific technology requirements for each workload in your environment;

- Review the desired business outcomes for each workload and map them to infrastructure requirements;

- Create an infrastructure strategy that allows you to match the right resources to each workload at each stage of your AI development journey.

More on DCD

-

Cyxtera to use CareAR for augmented reality data center support

Managing data centers with augmented reality

-

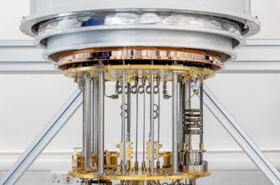

Sponsored Accelerating the accessibility of quantum computing via colocation data centers

Quantum computing promise to solve insoluble technical challenges exponentially faster than conventional computers. But acquiring, installing and maintaining a quantum computer is expensive. Could quantum computing-as-a-service be the answer?

-

Oxford Quantum Circuits to deploy quantum computer in Cyxtera facility in UK

Cyxtera’s LHR3 facility in Reading first colo data center to house quantum system