A growing number of data center operators and equipment vendors are anticipating the proliferation of direct liquid cooling systems (DLC) over the next few years.

As far as projections go, Uptime Institute’s surveys agree: the industry consensus for the mainstream adoption of liquid-cooled IT converges in the latter half of the 2020s.

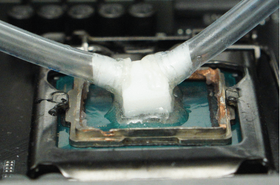

DLC systems, such as cold plate and immersion, have already proved themselves in technical computing applications as well as mainframe systems for decades.

More recently IT and facility equipment vendors, together with some of the larger data center operators, have started working on commercializing DLC systems for much broader adoption.

A common theme running through both operators’ expectations of DLC and vendors’ messaging is that a main benefit of DLC is improved energy efficiency. Specifically, the superior thermal performance of liquids compared with air will dramatically reduce the consumption of electricity and water in heat rejection systems, such as chillers, as well as increase opportunities for year-round free cooling in some climates. In turn, the data center’s operational sustainability credentials would improve significantly. Better still, the cooling infrastructure would become leaner, cost less, and be easier to maintain.

These benefits will be out of reach for many facilities for several practical reasons. The reality of mainstream data centers combined with the varied requirements of generic IT workloads (as opposed to high-performance computing) means that cost and energy efficiency gains will be unevenly distributed across the sector. Many of the operators deploying DLC systems in the next few years will likely prioritize speed and ease of installation into existing environments, as well as focus on maintaining infrastructure resiliency — rather than aiming for maximum DLC efficiency.

Another major factor is time: the pace of adoption. The use of DLC in mission-critical facilities, let alone a large-scale change, represents a wholesale shift in cooling design and infrastructure operations, with industry best practices yet to catch up. Adding to the hurdles is that many data center operators will deem the current DLC systems limited or uneconomical for their applications, slowing rollout across the industry.

Cooling in mixed company

Data center operators retrofitting a DLC system into their existing data center footprint will often do so gradually in an iterative process, accumulating operational experience. Operators will need to manage a potentially long period when liquid-cooled and air-cooled IT systems and infrastructure coexist in the same data center. This is because air-cooled IT systems will continue to be in production for many years to come, with typical life cycles of between five and seven years. In many cases, this will also mean a cooling infrastructure (for heat transport and rejection) shared between air and liquid systems.

In these hybrid environments, DLC's energy efficiency will be constrained by the supply temperature requirements of air-cooling equipment, which puts a lid on operating at higher temperatures — compromising the energy and capital efficiency benefits of DLC on the facility side. This includes DLC systems that are integrated with chilled water systems (running the return facility loop as a supply for DLC may deliver some marginal gains) and DLC implementations where the coolant distribution unit (CDU) is cooled by the cold air supply.

Even though DLC eliminates many, if not all, server fans and reduces airflow requirements for major gains in total infrastructure energy efficiency, these gains will be difficult to quantify for real-world reporting purposes because IT fan power is not a commonly tracked metric — it is hidden in the IT load.

It will take years for DLC installations to reach the scale where a dedicated cooling infrastructure can be justified as a standard approach, and for energy efficiency gains to have a positive effect on the industry’s energy performance, such as in power usage effectiveness (PUE) numbers. Most likely, any impact on PUE or sustainability performance from DLC adoption will remain imperceptible for years.

Hidden trade-offs in temperature

There are other factors that will limit the cooling efficiency seen with DLC installations. At the core of DLC’s efficiency potential are the liquid coolants’ favorable thermal properties, which enable them to capture IT heat more effectively.

The same thermal properties can also be used for a cooling performance advantage as opposed to maximizing cooling system efficiency. When planning for and configuring a DLC system, some operators will give performance, underpinned by lower operating temperatures, more weight in their balancing act between design trade-offs.

Facility water temperature is a crucial variable in this trade-off. Many DLC systems can cool IT effectively with facility water that is as high as 104°F (40°C) or even higher in specific cases. This minimizes capital and energy expenditure (and water consumption) for the heat rejection infrastructure, particularly for data centers in hotter climates.

Yet, even when presented with the choice, a significant number of facility and IT operators will choose lower supply temperatures for their DLC systems’ water supply. This is because there are substantial benefits to using lower water temperatures — often below 68°F (20°C) — despite the costs involved. Chiefly, a low facility water temperature reduces the flow rate needed for the same cooling capacity, which eases pressure on pipes and pumping.

Conversely, organizations that use warm water and DLC to enable data center designs with dry coolers face planning and design uncertainties. High facility water temperatures not only require higher flow rates and pumping power but also need to account for potential supply temperature reductions in the future as IT requirements become stricter due to evolving server silicon. For a given capacity, this could mean more or larger dry coolers, which potentially require upgrades with mechanical or evaporative assistance. Data center operators that want free cooling benefits and a light mechanical plant have a complex planning and design task ahead.

On the IT side, taking advantage of low temperatures makes sense when maximizing the performance and energy efficiency of processors because silicon exhibits lower static power losses at lower temperatures. This approach is already common today because the primary reason for most current DLC installations is to support high IT performance objectives. Data center operators currently use DLC primarily because they need to cool high-density IT rather than to conserve energy.

The bulk of DLC system sales in the coming years will likely be to support high-performance IT systems, many of which will use processors with restricted temperature limits — these models are sold by chipmakers specifically to maximize compute speeds. Operators may select low water temperatures to accommodate these low-temperature processors and maximize the cooling capacity of the CDU. In effect, a significant share of DLC adoption will likely represent an investment in performance rather than facility efficiency gains.

DLC changes more than the coolant

For all its potential benefits, a switch to DLC raises some challenges to resiliency design, maintenance, and operation. These can be especially daunting in the absence of mature and application-specific guidance from standards organizations. Data center operators that support business-critical workloads are unlikely to accept compromises to resiliency standards and realized application availability for a new mode of cooling, regardless of the technical or economic benefits.

In the event of a failure in the DLC system, cold plates tend to offer much less than a minute of ride-through time because of their small coolant volume. The latest high-powered processors would have only a few seconds of ride-through at full load when using typical cold plate systems. Operating at high temperatures means that there are thin margins in a failure, something that operators of mainstream, mission-critical facilities will be mindful of when making these decisions.

In addition, implementing concurrent maintainability or fault tolerance with some DLC equipment may not be practical. As a result, a conversion to DLC can demand that organizations maintain their infrastructure resiliency standard in a different way from air cooling. Operators may consider protecting coolant pumps with an uninterruptible power supply (UPS) and using software resiliency strategies when possible.

Organizational procedures for procurement, commissioning, maintenance, and operations need to be re-examined because DLC disrupts the current division of facilities and IT infrastructure functions. For air-cooling equipment, there is strong consensus regarding the division of equipment between facilities and IT teams, as well as their corresponding responsibilities in procurement, maintenance, and resiliency. No such consensus exists for liquid cooling equipment. A resetting of staff responsibilities will require much closer cooperation between facilities and IT infrastructure teams.

These considerations will temper the enthusiasm for large-scale use of DLC and make for a more measured approach to its adoption. As operators increasingly understand the ways in which DLC deployment is not straightforward, they will bide their time and wait for industry best practices to mature and fill their knowledge gaps.

In the long term (i.e., 10 years or more), DLC is likely to handle a large share of IT workloads, including a broad set of systems running business applications. This will happen as standardization efforts, real-world experience with DLC systems in production environments and mature guidance take shape in new, more robust products and best practices for the industry. To grow the number and size of deployments of cold plate and immersion systems in mission-critical facility infrastructure, DLC system designs will have to meet additional technical and economic objectives. This will complicate the case for efficiency improvements.

The cooling efficiency figures of today’s DLC products are often derived from niche applications that differ from typical commercial data centers — and real-world efficiency gains from DLC in mainstream data centers will necessarily be subject to more trade-offs and constraints.

In the near term, the business case for DLC is likely to tilt in favor of prioritizing IT performance and ease of retrofitting with a shared cooling infrastructure. Importantly, choosing lower, more traditional water supply temperatures and utilizing chillers appears to be an attractive proposition for added resiliency and future-proofing.

As many data center operators deem performance needs and mixed environments to be more pressing business concerns — free cooling aspirations, along with their benefits in sustainability, will have to wait for much of the industry.